AI coding assistants are powerful but can silently introduce critical errors; learning how to harness their strengths while systematically avoiding pitfalls can dramatically boost productivity, especially in small teams.

My cofounder (and brother), Yitz, and I ship fast at yeshaya.dev. As a two-person team, every minute matters. That’s why we loved the efficiency of AI-driven autocomplete — until it quietly broke our builds.

Our whole stack broke because of return null, and AI wrote it for us. One night, working late on a Next.js API route, Cursor’s autocomplete dropped in placeholder logic:

if (!record) {

// TODO: Improve error handling later

return null;

}

It looked harmless enough, so we quickly deployed to Vercel. Seconds later, the production app was down. A blank screen greeted our users. Turns out React server-side rendering stumbled upon that innocent-looking null, triggering a fatal runtime error.

The takeaway was clear: AI-generated code needs to be heavily monitored.

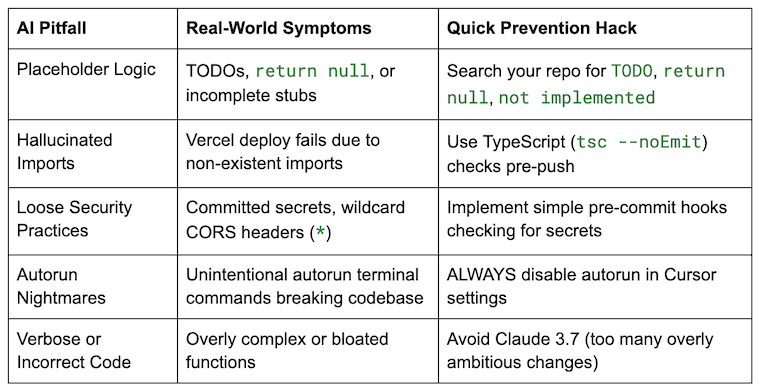

5 Common AI Coding Assistant Problems

Hallucinated imports.

Loose security practices.

Autorun nightmares.

Verbose/incorrect code.

Common AI Assistant Pitfalls and How to Spot Them

When you’re running lean, these subtle traps are especially hazardous. We’ve learned the hard way to identify and preempt them:

For small teams, emergencies are costly. Catching these early saves hours of stress later.

Streamlined, Prompt-Driven Workflows Using XML Tags

Rather than relying on generic scaffold tools like Yeoman or Plop.js, we maintain explicit prompts using clear XML tags within a markdown file (scaffolding-prompt.md). This becomes both our spec and documentation:

We are yeshaya.dev, a boutique digital marketing agency, tasked with building a sleek, modern marketing site for a client in the “ space.

You are tasked with creating a responsive, high-performance website that showcases the client’s portfolio with smooth animations and strong typographic hierarchy. Website must be type-safe, secure architecture with a sub-200ms response time, optimized for SEO and maintainability.

– Use Next.js (App Router) with TypeScript

– Use `pnpm` as the package manager

– Use `tailwindcss` for styling

– Use `framer-motion` for animations and page transitions

– SSR critical routes, lazy-load non-essential components

– Keep all API routes behind proper validation & sanitization layers

This format works well because it’s easy for humans to understand and review at a glance. It’s structured clearly enough for AI tools to generate accurate results. Finally, it’s perfect for iteration or A/B testing.

Recommended Workflow

Write or refine prompts first.

Generate initial scaffold using Cursor or Windsurf (autorun off!).

Incrementally refine with manual edits.

Quick, five minute peer review of prompt and generated code.

Keeping CI Lightweight Yet Effective

We don’t have the luxury of multi-stage PR processes or extensive QA resources. Instead, we use a minimalistic but strict setup:

Pre-Push Check (Husky Pre-Push)

pnpm lint && pnpm test && tsc –noEmit && depcheck

Vercel Preview Build

Automatically ensures the app runs in a realistic production-like environment. A broken preview blocks deploy.

Quick Teammate Review

Non-author dev scans the diff and XML prompt briefly before merging. No long PR waits; just a quick sanity check. This structure strikes a practical balance between caution and agility.

Essential Cursor and Windsurf Best Practices

After extensive trial-and-error, here are our go-to settings.

Autorun

Always disabled.

Preferred Models

o4-mini

Offers higher accuracy and reliability for sensitive or critical code tasks, significantly reducing the risk of subtle bugs or security vulnerabilities.

GPT-4.1

Provides rapid, iterative development capability – ideal when speed matters more than perfect accuracy, allowing for quicker feedback loops.

Not Claude 3.7

Avoided because it often produces overly ambitious refactors or overly complex solutions, increasing debugging time and cognitive overhead.

Incremental Coding With Edit-Test Loops

One powerful trick we adopted is forcing Cursor to write incrementally, using a quick iterative process.

Define the smallest meaningful increment.

Write (or AI-generate) a failing test case first.

Use Cursor or Windsurf (in agent mode) to write just enough code to pass the test.

Run tests immediately after code generation.

If it fails, let the AI diagnose and fix. Repeat until tests pass.

The rationale here is straightforward: smaller increments allow for rapid diagnosis and resolution of issues. By keeping each iteration minimal, debugging becomes significantly simpler and faster. It ensures that generated code remains aligned closely with requirements and prevents runaway complexity from accumulating silently.

Tips and Tricks for Maximum Productivity

Here are some additional practical suggestions we’ve found indispensable.

Commit Early, Commit Often

I can’t stress this enough. If something works, save it with a git commit. It’s your safety net. We’ve lost hours to AI agents making sweeping changes across an uncommitted codebase. Undoing that mess is a nightmare. Frequent commits let you roll back to a known-good state instantly, which is essential when iterating with tools that can go rogue fast.

Keep AI Context Sharp

Reference files explicitly (using @) and regularly refresh context with .cursorignore to exclude irrelevant noise.

Reference Open Editors

Quickly add open editors to context for fast iterations.

Store Frequently Used Prompts

Store reusable, proven prompts in Drive or a Private Repo for faster scaffold setup.

Rules for AI System Prompts in Cursor

Set clear, concise and effective AI prompt guidelines.

Keep Answers Concise

Use direct and actionable language. Concise answers lead directly to actionable solutions, reduce cognitive load and prevent AI-generated responses from obscuring critical details in verbose or distracting language.

Suggest Alternative Solutions

AI proactively provides multiple viable approaches. This is crucial because they enable developers to quickly weigh multiple viable options, assess trade-offs and select the most contextually appropriate solution, rather than being locked into the AI’s initial — and potentially suboptimal — recommendation.

Prioritize Technical Detail

Avoid generic advice. Always favor specificity.

Avoid Unnecessary Explanations

Less fluff, more substance. For instance, instead of the agent explaining basic JavaScript syntax or well-known React principles every time it suggests code, skipping straight to the unique, specific advice relevant to the context is far more helpful and efficient.

Effective Troubleshooting Practices

When encountering stubborn problems, try this:

Ask Cursor to summarize all involved files and pinpoint where things break.

Use tools like gitingest.com to consolidate scripts and configs for easier debugging.

Reference the latest documentation via https://context7.com/ (MCP for most current practices).

Security Without the Overhead

For security checks, we utilize straightforward bash scripts to catch secrets before commits:

grep -RE “(AWS|AIza|sk_live|SECRET_KEY)” ./ && echo “Potential secret exposed!” && exit 1

This simple pre-commit hook has saved us countless times because it immediately prevents accidental commits containing sensitive data (e.g., API keys, secret tokens) which, if exposed publicly, could lead to significant security vulnerabilities, downtime or costly breaches.

Measuring What Actually Matters

Our improved workflow showed measurable benefits clearly relevant to small teams.

Idea to production time was reduced from approximately six hours to around four hours.

Deploy frequency increased from about five deployments weekly to roughly nine.

Monthly post-deploy hotfixes dropped significantly from seven per month to just one.

Late-night emergencies were previously frequent with three or four per month and are now rare at zero or one per month.

Turn AI Tools Into Reliable Allies

The lesson for small teams is that AI coding assistants aren’t foolproof, but they’re incredibly powerful when wielded responsibly. By clearly structuring prompts, disabling risky automation features, maintaining tight CI, and keeping vigilant human oversight, we turned Cursor and Windsurf from unpredictable disruptors into dependable coding allies.

AI won’t replace you. But if you don’t learn how to wield it precisely, it will waste your time. For lean teams, discipline isn’t optional. It’s how you sleep at night.