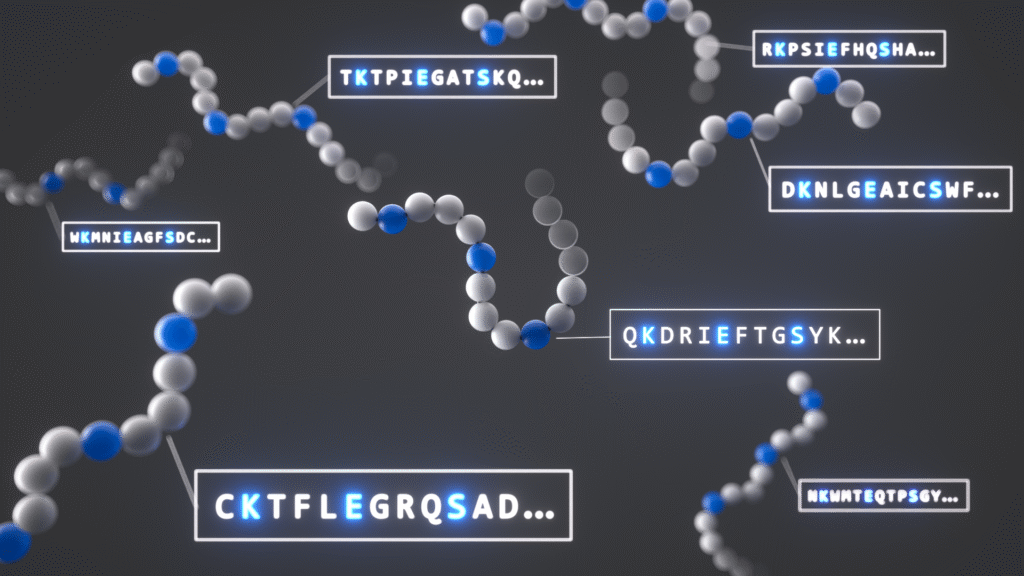

Advances in AI are opening extraordinary frontiers in biology. AI-assisted protein engineering holds the promise of new medicines, materials, and breakthroughs in scientific understandings. Yet these same technologies also introduce biosecurity risks and may lower barriers to designing harmful toxins or pathogens. This “dual-use” potential, where the same knowledge can be harnessed for good or misuse to cause harm, poses a critical dilemma for modern science.

Great Promise—and Potential Threat

I’m excited about the potential for AI-assisted protein design to drive breakthroughs in biology and medicine. At the same time, I’ve also studied how these tools could be misused. In computer-based studies, we found that AI protein design (AIPD) tools could generate modified versions of proteins of concern, such as ricin. Alarmingly, these reformulated proteins were able to evade the biosecurity screening systems used by DNA synthesis companies, which scientists rely on to synthesize AI-generated sequences for experimental use.

In our paper published in Science on October 2, “Strengthening nucleic acid biosecurity screening against generative protein design tools (opens in new tab),” we describe a two-year confidential project we began in late 2023 while preparing a case study for a workshop on AI and biosecurity.

PODCAST SERIES

AI Testing and Evaluation: Learnings from Science and Industry

Discover how Microsoft is learning from other domains to advance evaluation and testing as a pillar of AI governance.

We worked confidentially with partners across organizations and sectors for 10 months to develop AI biosecurity “red-teaming” methods that allowed us to better understand vulnerabilities and craft practical solutions—”patches” that have now been adopted globally, making screening systems significantly more AI-resilient.

![An illustration of the AI Protein Design red-teaming workflow. [starting at the left] an icon of a database with the heading above that reads: Database of Wild-Type Proteins of Concern. [arrow moves right] Above the arrow the text reads: Generate Synthetic Homologs (x) Conditioned on Wild Types (y). P(x|y) appears below the arrow. [continuing to the right] a computer monitor icon with protein sequences on the screen appears in brackets with N appearing outside the bottom of the right bracket. The text above the computer screen reads: “N” Synthetic Homologs per Wild-Type. [arrows move to the right and fork to an upper arrow and a lower arrow] The text above the upper arrow reads Reverse Translate and the arrow points to a computer monitor icon with a DNA icon on the screen. [upper arrow continues to the right] The arrow points to a computer monitor icon with the text Hazard Screening appearing above and a biohazard icon and a question mark appearing on the screen. [lower arrow moves to the right] A computer monitor icon includes a paraphrased toxin sequence verses a protein sequence on the computer screen. Above the monitor the text reads: Score in silico. [lower arrow continues to the right] An illustration provides an example of the evaluation results (see also table S1 in the paper) tracking the number of flagged sequences (y-axis) and hazardous sequences (x-axis). [the lower arrow moves up to the Hazard Screening step (from the upper arrow process) and another arrow moves from the Hazard Screening to the evaluation results illustration. There is a dotted line with the words Repeat Process moving from the Evaluation illustration to the left and back to the database.](https://www.microsoft.com/en-us/research/wp-content/uploads/2025/10/Fig1_Biohazard.png)

For structuring, methods, and process in our study, we took inspiration from the cybersecurity community, where “zero-day” vulnerabilities are kept confidential until a protective patch is developed and deployed. Following the acknowledgment by a small group of workshop attendees of a zero-day for AI in biology, we worked closely with stakeholders—including synthesis companies, biosecurity organizations, and policymakers—to rapidly create and distribute patches that improved detection of AI-redesigned protein sequences. We delayed public disclosure until protective measures were in place and widely adopted.

Dilemma of Disclosure

The dual use dilemma also complicates how we share information about vulnerabilities and safeguards. Across AI and other fields, researchers face a core question:

How can scientists share potentially risk-revealing methods and results in ways that enable progress without offering a roadmap for misuse?

We recognized that our work itself—detailing methods and failure modes—could be exploited by malicious actors if published openly. To guide decisions about what to share, we held a multi-stakeholder deliberation involving government agencies, international biosecurity organizations, and policy experts. Opinions varied: some urged full transparency to maximize reproducibility—and to help others to build on our work; others stressed restraint to minimize risk. It was clear that a new model of scientific communication was needed, one that could balance openness and security.

The Novel Framework

The risk of sharing dangerous information through biological research has become a growing concern. We have participated in community-wide discussion on the challenges, including a recent National Academies of Science, Engineering, and Medicine workshop and study.

In preparing our manuscript for publication, we worked on designing a process to limit the spread of dangerous information while still enabling scientific progress.

To address the dual challenges, we devised a tiered access system for data and methods, implemented in partnership with the International Biosecurity and Biosafety Initiative for Science (IBBIS) (opens in new tab), a nonprofit dedicated to advancing science while reducing catastrophic risks. The system works as follows:

Controlled access: Researchers can request access through IBBIS, providing their identity, affiliation, and intended use. Requests are reviewed by an expert biosecurity committee, ensuring that only legitimate scientists conducting relevant research gain access.

Stratified tiers of information: Data and code are classified into several tiers according to their potential hazard, from low-risk summaries through sensitive technical data to critical software pipelines.

Safeguards and agreements: Approved users sign tailored usage agreements, including non-disclosure terms, before receiving data.

Resilience and longevity: Provisions are built in for declassification when risks subside, and for succession of stewardship to trusted organizations should IBBIS be unable to continue its operation.

This framework allows replication and extension of our work while guarding against misuse. Rather than relying on secrecy, it provides a durable system of responsible access.

To ensure continued funding for the storage and responsible distribution of sensitive data and software, and for the operation of the sharing program, we provided an endowment to IBBIS to support the program in perpetuity. This approach was modeled after the One Hundred Year Study on AI at Stanford, which is endowed to continue for the life of the university.

An Important Step in Scientific Publishing

We are pleased that the leadership at Science accepted our approach to handling information hazards. To our knowledge, this is the first time a leading scientific journal has formally endorsed a tiered-access approach to manage an information hazard. This recognition validates the idea that rigorous science and responsible risk management can coexist—and that journals, too, can play a role in shaping how sensitive knowledge is shared. We acknowledge the visionary leadership at Science, including editors, Michael Funk and Valda Vinson, and Editor-in-Chief, Holden Thorp.

Beyond Biology: A Model for Sensitive Research

While developed for AI-powered protein design, our approach offers a generalizable model for dual-use research of concern (DURC) across disciplines. Whether in biology, chemistry, or emerging technologies, scientists will increasingly confront situations where openness and security pull in opposite directions. Our experience shows that these values can be balanced: with creativity, coordination, and new institutional mechanisms, science can uphold both reproducibility and responsibility.

We hope this framework becomes a template for future projects, offering a way forward for researchers who wish to share their insights without amplifying risks. By embedding resilience into how knowledge is communicated—not just what is communicated—we can ensure that scientific progress continues to serve humanity safely.

The responsible management of information hazards is no longer a peripheral concern: it is central to how science will advance in the age of powerful technologies like AI. This approach to managing information hazards demonstrates a path forward, where novel frameworks for access and stewardship allow sensitive but vital research to be shared, scrutinized, and extended responsibly. Approaches like this will be critical to ensuring that scientific openness and societal safety advance hand-in-hand.

Additional reading

Strengthening nucleic acid biosecurity screening against generative protein design tools.

The Age of AI in the Life Sciences: Benefits and Biosecurity Considerations, National Academies of Science, Engineering, and Medicine, 2025. (opens in new tab)

Disseminating In Silico and Computational Biological Research: Navigating Benefits and Risks: Proceedings of a Workshop, National Academies of Science, Engineering, and Medicine, 2025. (opens in new tab)

Protecting scientific integrity in an age of generative AI, Proceedings of the National Academy of Science, 2024. (opens in new tab)