We dig into the implications of China-based DeepSeek’s rapid ascent on the AI infrastructure landscape, the private sector, and enterprise AI strategies.

China’s DeepSeek has upended assumptions about what it takes to develop powerful AI models.

The AI company, which emerged from Liang Wenfeng’s hedge fund High-Flyer, released an open-source reasoning model (named R1) in January 2025 that rivals the performance of OpenAI’s o1 reasoning model.

DeepSeek says it trained its base model with limited chips and about $5.6M in computing power — a fraction of the $100M+ US rivals have spent training similar models — thanks to some clever techniques.

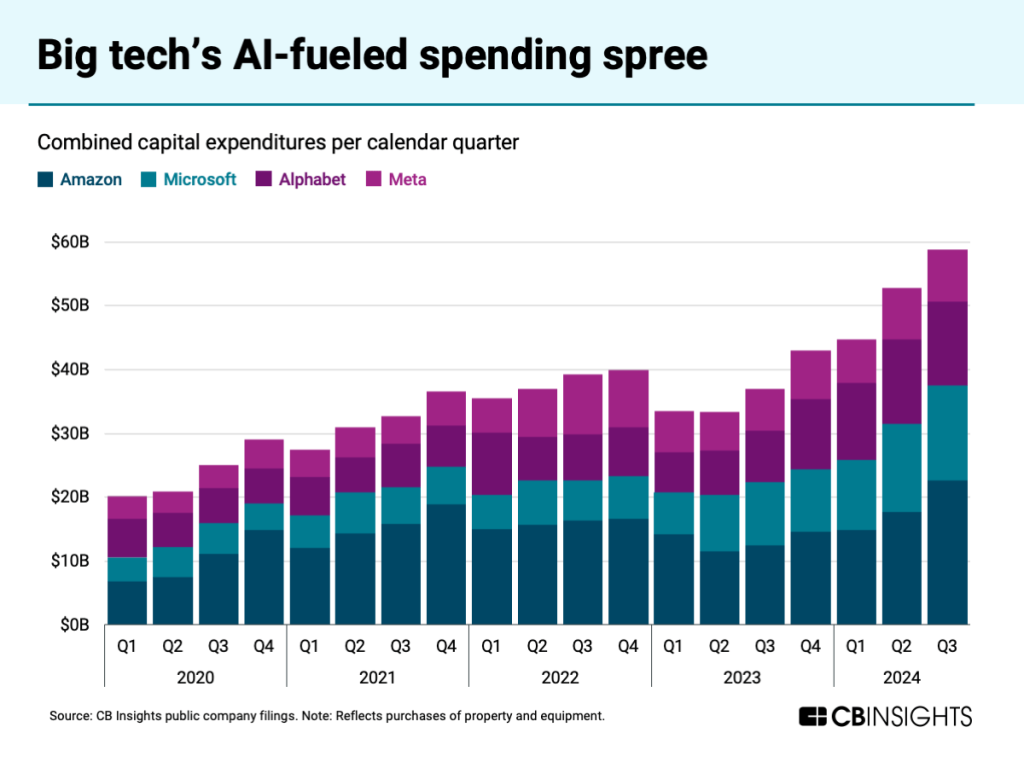

That efficiency has raised questions about the scale of US companies’ AI investments. Increased spend on AI infrastructure has driven big tech’s combined capex past $50B in recent quarters, while venture investors poured $76.3B into US AI startups in 2024 alone, per CB Insights data.

Below, we dive into 5 key trends highlighted by DeepSeek’s rise:

AI infrastructure costs come under scrutiny

VCs and private AI sector face recalibration

Amid funding gap in China, restrictions force innovative development

Open-source ecosystem gains steam, with China making strides

Enterprises rethink AI strategies for open models

1. AI infrastructure costs come under scrutiny

In the US, genAI development has raced ahead thanks to billions of dollars in funding.

Big tech companies have justified their spend on AI infrastructure based on the need for more hardware (like chips) and energy to train bigger and more performant models.

DeepSeek’s reported ability to develop similarly powerful models much more efficiently (though questions remain about the true cost of its development) is upsetting these assumptions.

It’s also sending shockwaves through the public markets. On Monday, January 27, Nvidia’s stock fell more than 15% as investors reacted to R1. Other stocks linked to the AI value chain, including infrastructure suppliers like Oracle and power producers like Constellation Energy, also saw steep declines.

But in the long run, as AI infrastructure and operational costs decline, major tech companies stand to benefit from the expanding market. The decreasing cost barriers will accelerate enterprise AI adoption, allowing cloud providers like Microsoft and Amazon to capture growing demand through more competitively priced AI services.

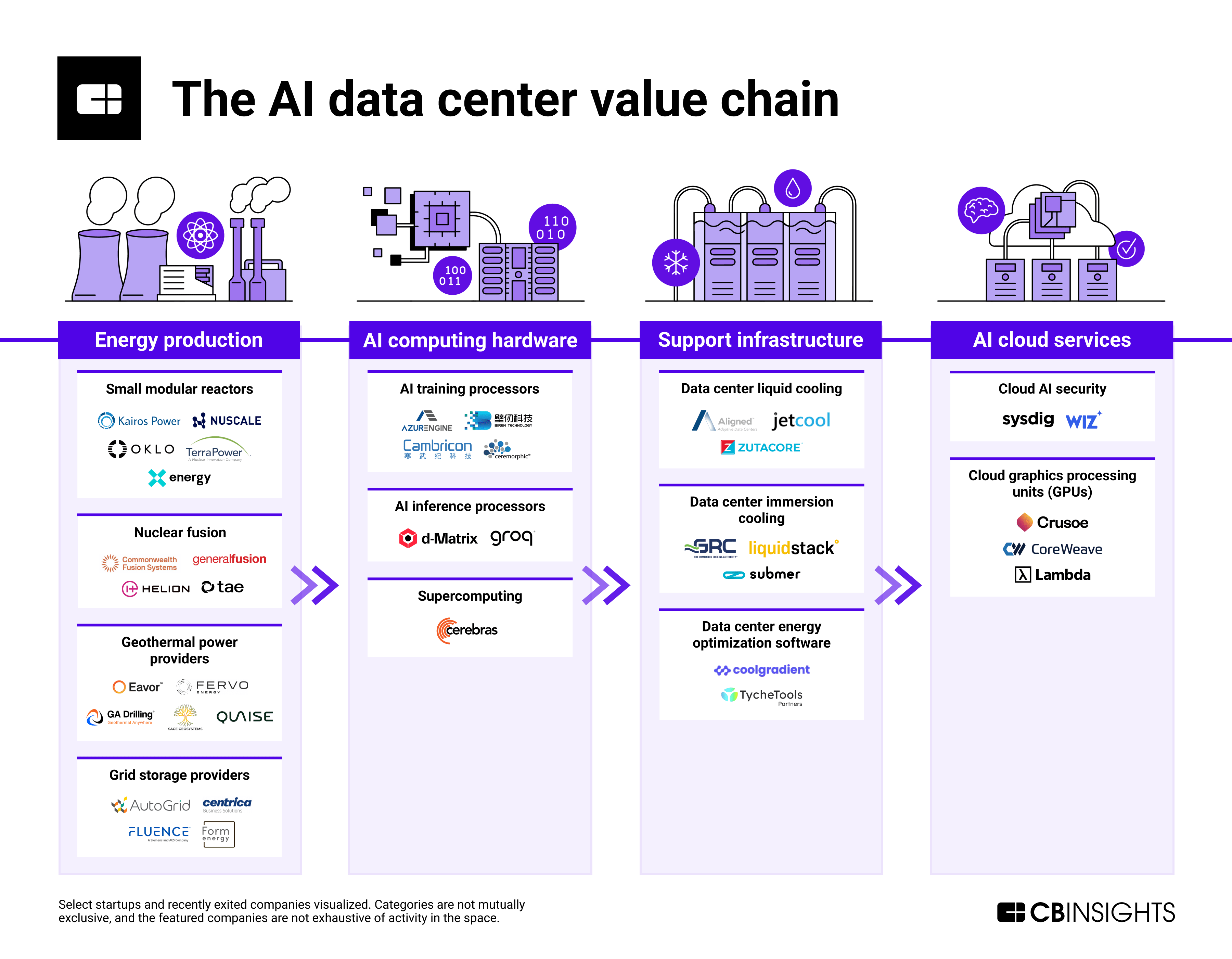

Increasing AI usage would also benefit companies at the inference layer. While Nvidia, AMD, and Intel dominate the AI inference processors market (chips that run already trained models), startups like d-Matrix and Groq are making progress, particularly in power efficiency. In July 2024, Groq raised a $640M Series D.

![]() Explore the AI data center value chain

Explore the AI data center value chain

2. VCs and private AI sector face recalibration

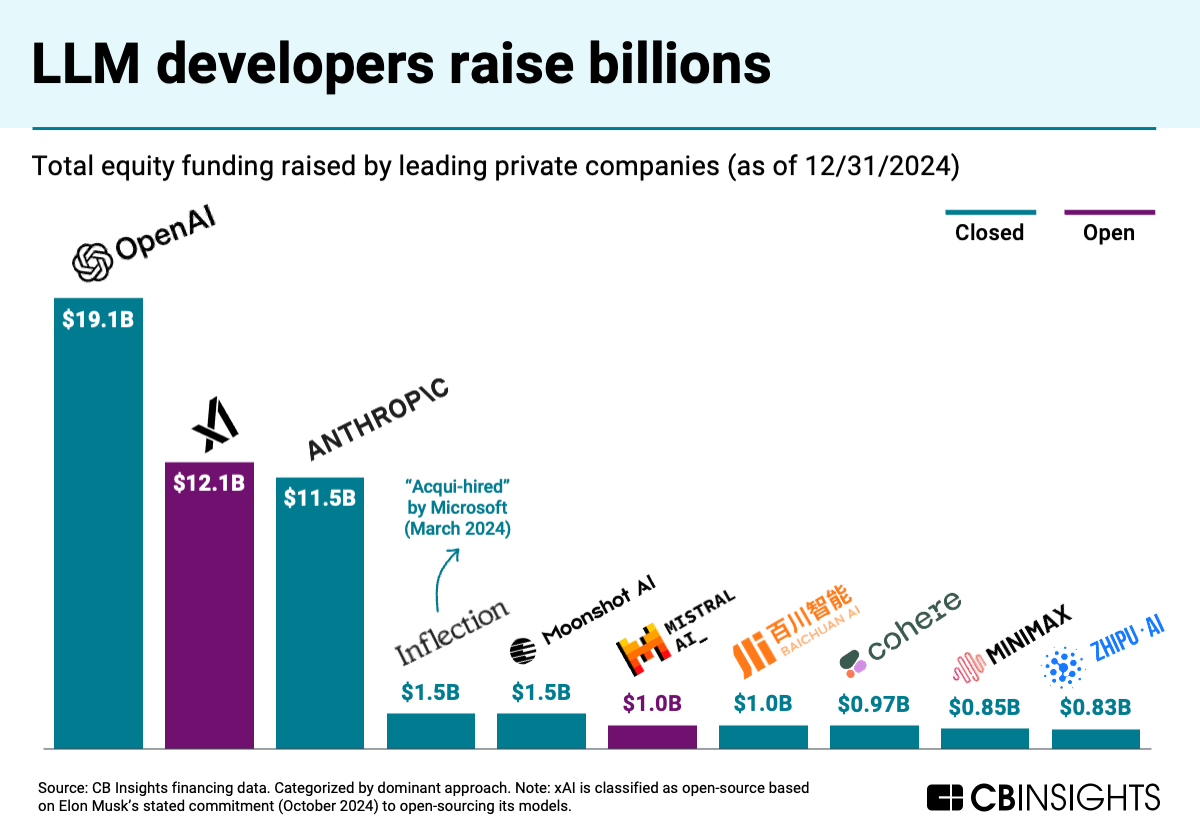

DeepSeek’s advances could undercut the vast sums of money that have gone to foundation model developers. OpenAI and Anthropic alone have raised over $30B in funding.

Going forward, it may be harder for AI startups to justify raising huge funding rounds to support their infrastructure buildouts.

![]() Evaluate 30+ LLM developers

Evaluate 30+ LLM developers

At the same time, US developers maintain an advantage in compute and data, and they will likely adopt DeepSeek’s architecture changes. This may widen their performance lead if they can combine their superior resources with new efficiency gains.

DeepSeek also appears to have relied on outputs from OpenAI models to train its models, which would violate OpenAI’s terms of service — DeepSeek’s chatbot even self-identifies as an OpenAI product. Without OpenAI’s releases (including its o1 reasoning model), DeepSeek’s R1 model likely wouldn’t have emerged.

3. Amid funding gap in China, restrictions force innovative development

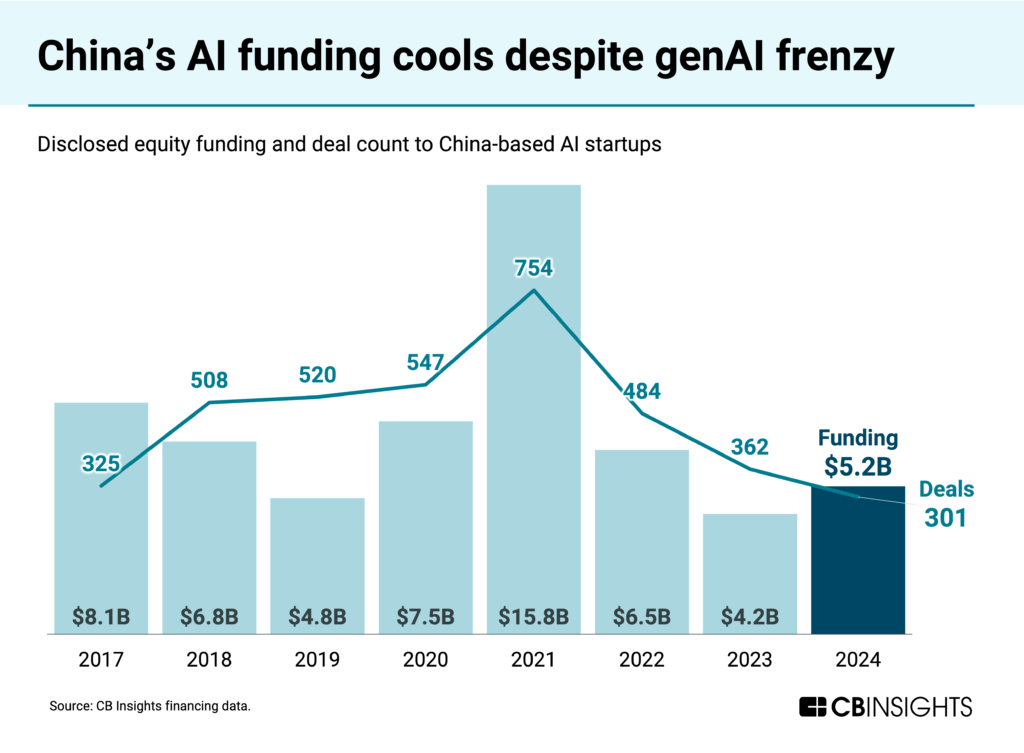

AI startup funding in China is a fraction of what US AI startups raise: China saw $5.2B in AI funding in 2024, or 7% of the US’ $76.3B.

And it’s cooled over time as the Chinese government has cracked down on its private sector in recent years.

The US has also put caps on exports of advanced AI chips, like those from Nvidia, which are key to model development.

The crunch has forced companies like DeepSeek to get creative. (Note: It’s estimated that High-Flyer acquired anywhere from 10K to 50K Nvidia A100 chips prior to the sanctions, which helped launch DeepSeek.)

Rather than using more powerful hardware (like H100 chips), DeepSeek focused on making extremely efficient use of more constrained hardware through careful optimization at multiple levels — from model architecture to low-level GPU programming — in the development of its 671B parameter V3 model. This demonstrated that superior results could be achieved through better engineering rather than just using more powerful hardware.

Meanwhile, its R1 model (which uses V3 as the base model) showcased how reinforcement learning without extensive labeled data could achieve high-quality reasoning capabilities, challenging the notion that expensive training with human feedback was necessary for strong performance.

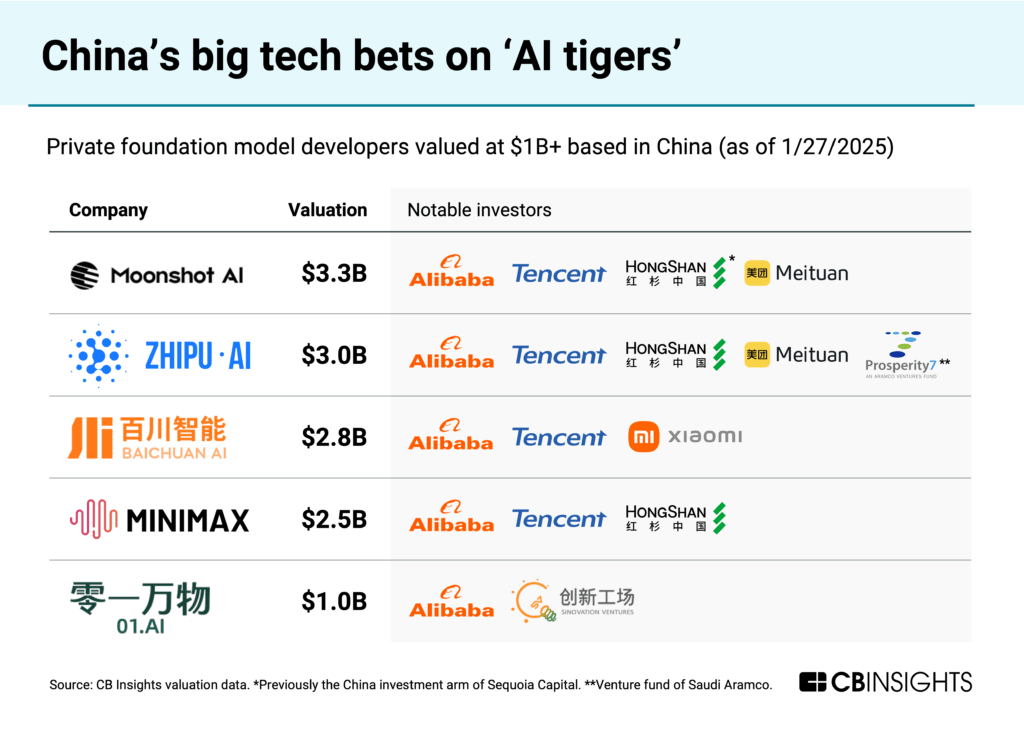

Other companies to watch in China include the “AI tigers” Moonshot AI, Zhipu AI, Baichuan AI, MiniMax, and 01.AI — all of which have nabbed $1B+ valuations backed by China’s big tech companies.

It will be worth keeping an eye on China’s AI ecosystem as well for the eventual AI applications that take root — especially those that become “killer” consumer apps.

For example, MiniMax has released AI apps in the US featuring avatar chatbots and video generation tools (though its app Talkie was removed from Apple’s App Store in December). In another indication that Chinese AI startups are pushing into the US consumer market, DeepSeek’s mobile app is currently the top free app in the US App Store on iOS.

4. Open-source ecosystem gains steam, with China making strides

DeepSeek’s advances also give more fuel to the open-source movement — highlighting that open, frontier models can be developed with more modest resources and computing infrastructure.

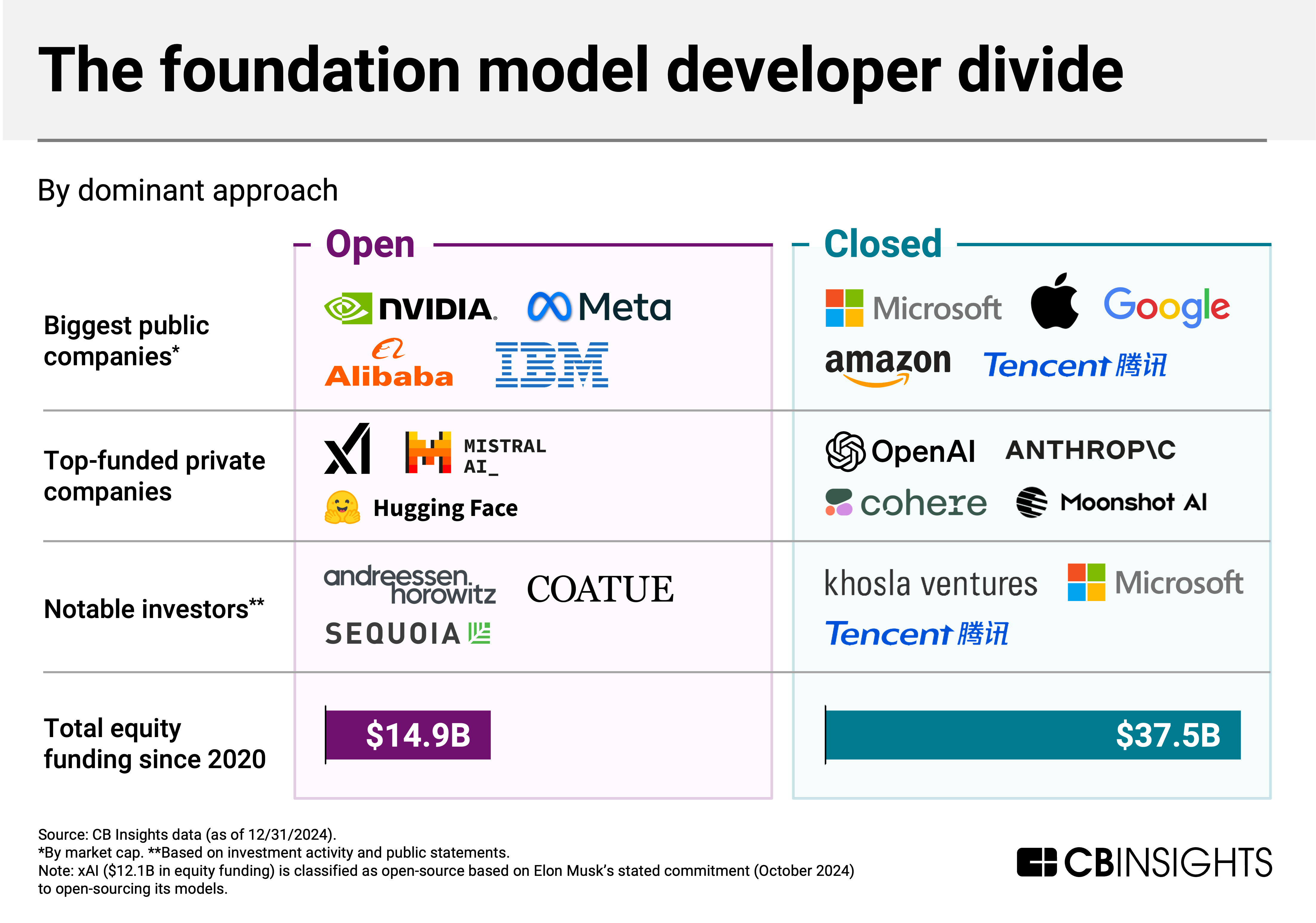

We previously dug into how the assumption of increasing model training costs was tipping the model race in favor of closed-source developers. Since 2020, closed-source AI model developers have secured $37.5B in venture funding, while open-source developers have trailed with $14.9B. However, DeepSeek’s efficient training approach challenges the assumption that massive funding is necessary for competitive model development.

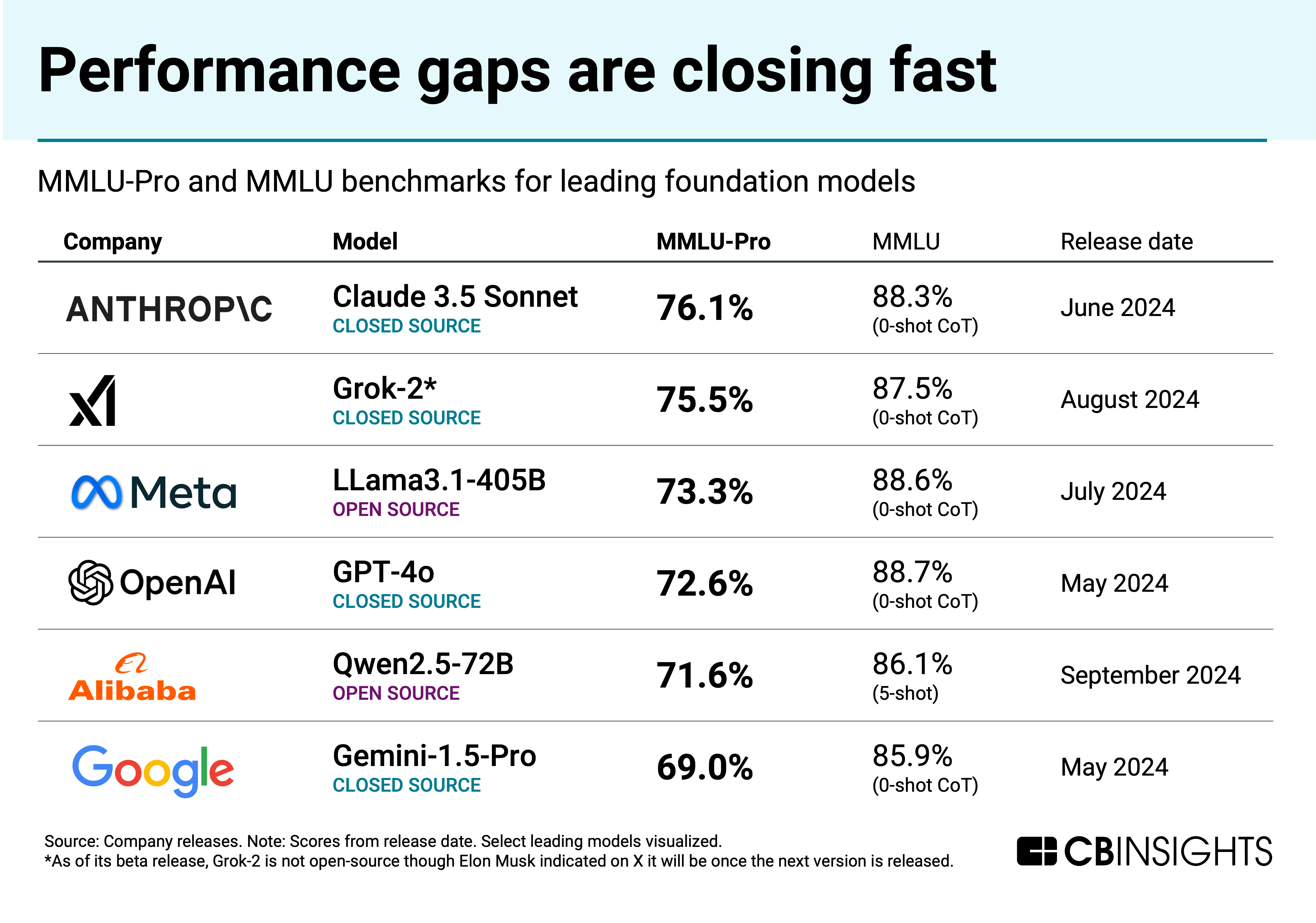

Overall, in the last few months, China has been closing the gap in model performance vs. US rivals, especially among open-source models. Based on our analysis from earlier this year, Alibaba’s open-source Qwen-2.5 had made it on the leaderboard, alongside primarily closed-source models from US developers. Today, when including reasoning models, DeepSeek’s R1 takes the top spot, followed by GPT-o1-mini.

If China continues to contribute top-ranking open-source models, that could encourage US developers to build on top of its technology — increasing China’s importance in the AI development landscape.

![]() Track AI model developers in China

Track AI model developers in China