Fast-food restaurants are becoming proving grounds for conversational AI’s next evolution. We break down the rapid growth of the voice AI market — as well as remaining hurdles to adoption.

This research comes from the April 1 edition of the CB Insights newsletter. You can see past newsletters and sign up for future ones here.

It looks like voice AI may have found its sweet spot: ordering fries with that.

Yum! Brands — which owns Taco Bell, KFC, and Pizza Hut and has a larger restaurant footprint than any other company globally — recently announced a partnership with Nvidia to deploy AI (including AI voice ordering) throughout hundreds of restaurants starting in April.

Similarly, Jersey Mike’s Subs has partnered with SoundHound on a 50-store pilot of AI voice ordering, while Wendy’s now uses Google Cloud LLMs to process orders in English and Spanish.

Voice AI stands to reduce labor costs in high-turnover positions while also increasing order throughput and accuracy. It also means staff can be redeployed to food preparation or customer service roles that drive higher satisfaction.

But fast food is just the tip of the iceberg for voice AI.

Below, we get into:

Why voice AI matters

Market maturity

Challenges to adoption

Why does voice AI matter?

For customer interactions, voice conversations offer a far more expressive mode of communication than text-based channels.

Yet the industry remains stuck in a purgatory of robo-call decision trees and endless holds. 62% of customer calls to SMBs go unanswered, while upwards of 70% of business calls that connect still end up putting customers on hold, with most hanging up within minutes.

Advances in AI speech models could break this cycle. Voice AI models are shifting toward processing audio directly — rather than needing to translate it to text, process it using an LLM, then convert it back into speech — and are getting closer to the cadence of human conversation (<300ms latency).

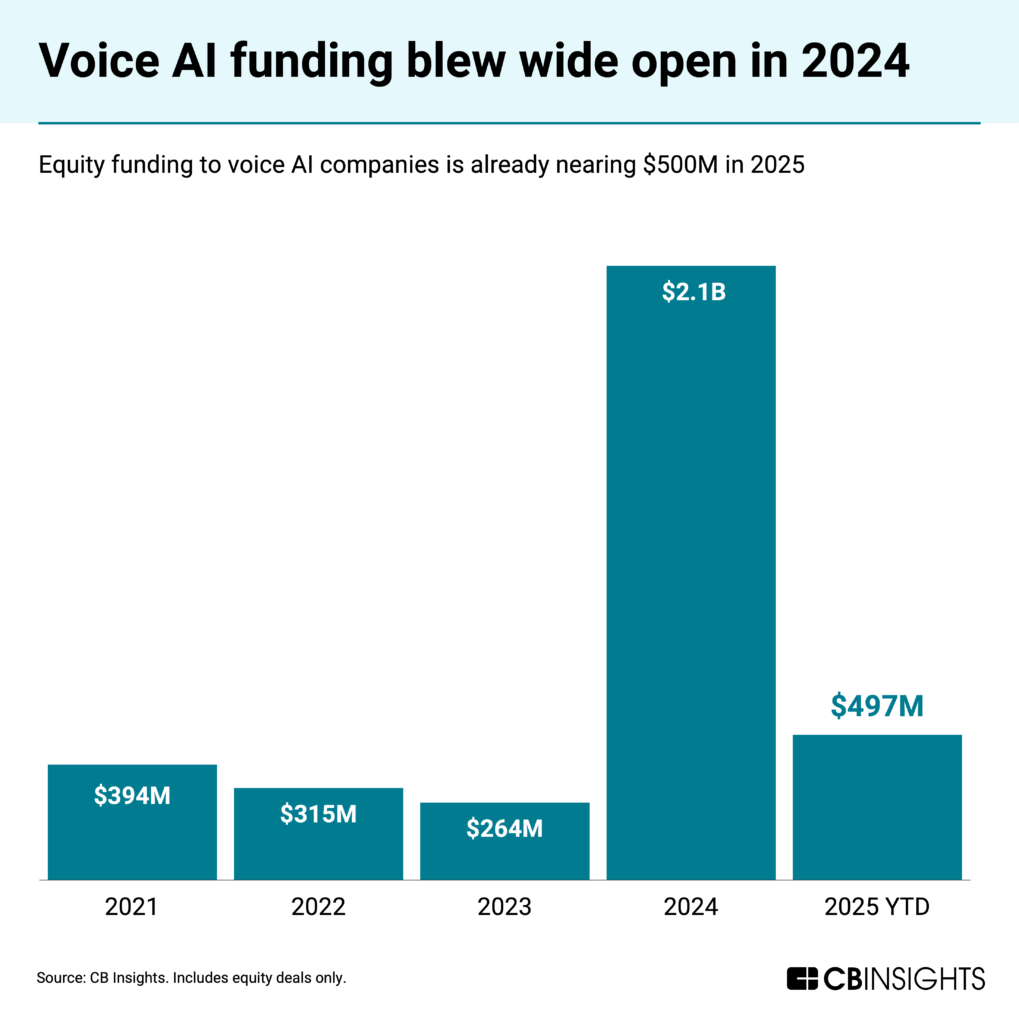

The progress has fueled a surge in equity funding to voice AI solutions, which grabbed $2.1B in 2024, per CB Insights’ funding data. Momentum has continued in 2025 so far, with companies raising nearly $500M in Q1’25.

ElevenLabs‘ $180M round from investors including a16z, Salesforce Ventures, and Sequoia Capital was a big part of this year’s strong start. ElevenLabs has already hit $100M in ARR — just 3 years after its founding.

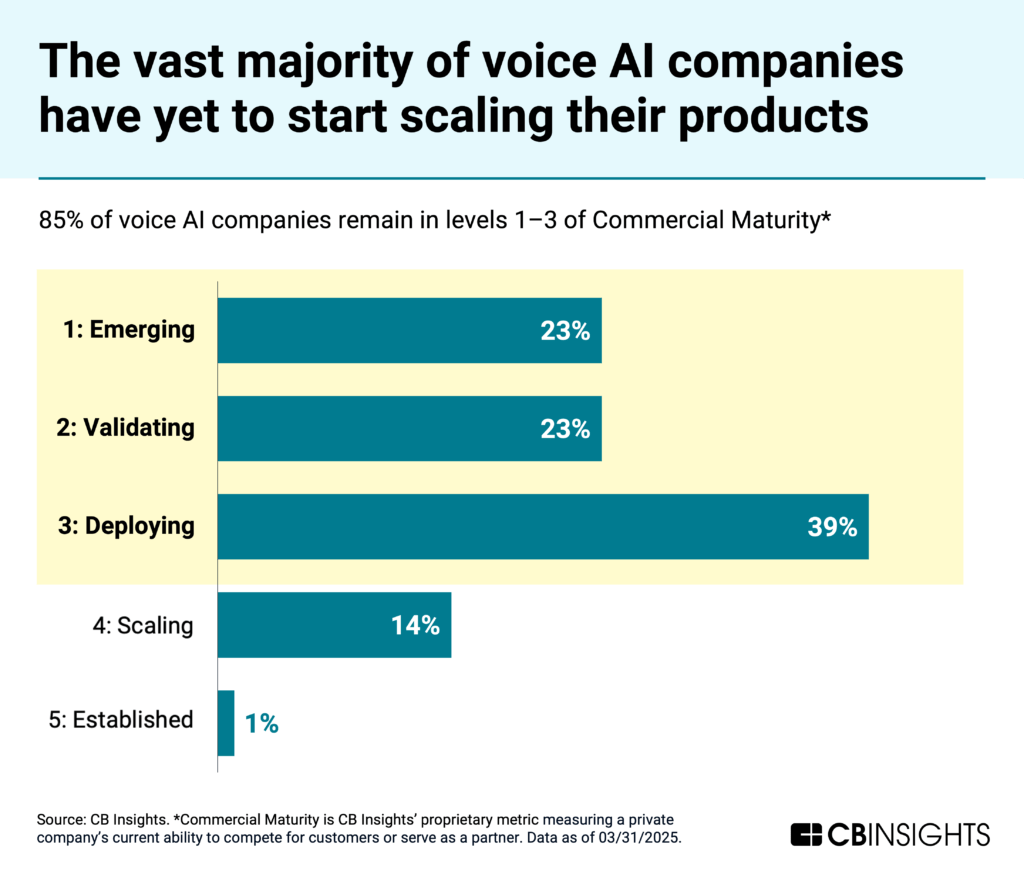

On the whole, though, the voice AI market remains in its early stages — and faces growing pains.

The market is still nascent

Most of the voice AI market remains in the earlier stages of commercial maturity, with 85% in levels 1, 2, or 3 on CB Insights’ Commercial Maturity scale. More than half are still developing or validating their products, while 39% are beginning commercial distribution and starting to gain customers.

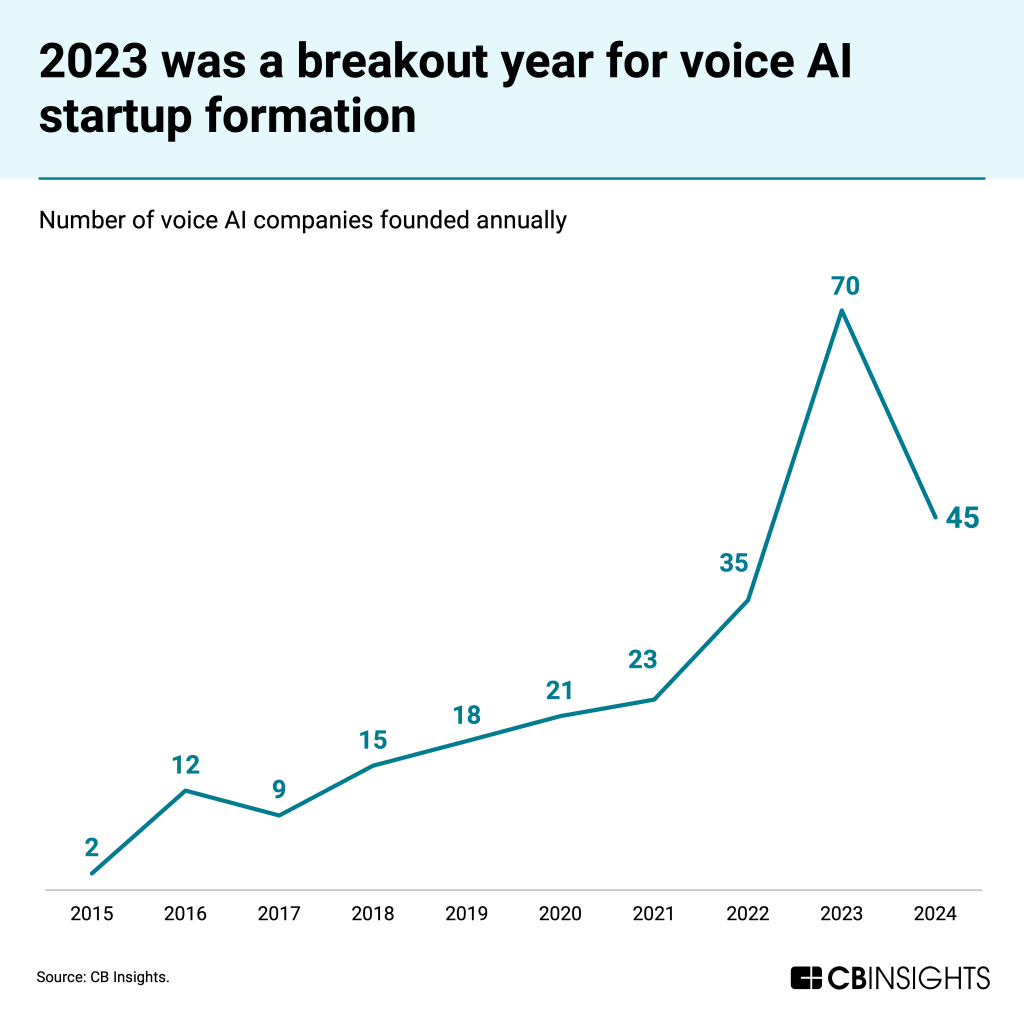

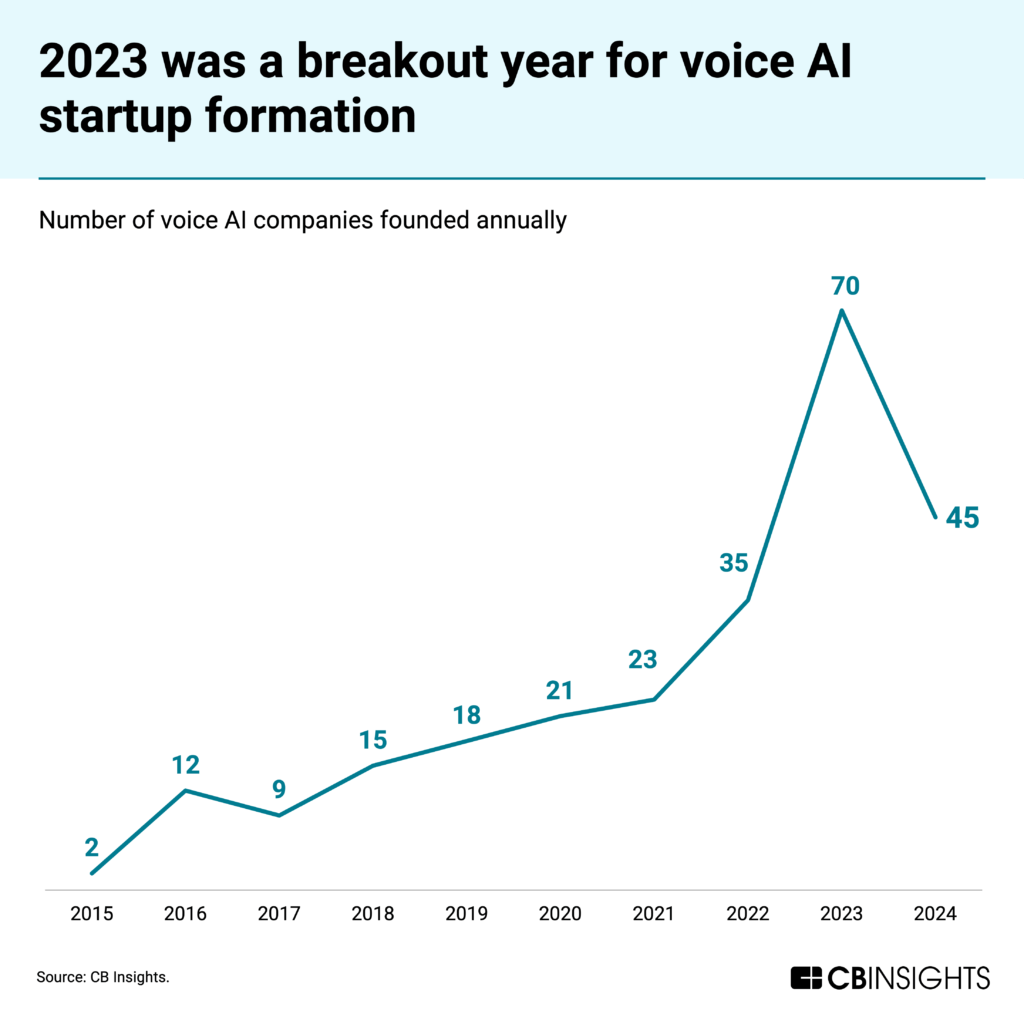

Most startups here were founded in just the last 3 years, as the chart below demonstrates. 2023 was a breakout year, seeing the number of companies founded grow 2x year-over-year, from 35 to 70.

This growth has been driven by advancements in voice AI models — including OpenAI‘s Realtime API for speech-to-speech applications, launched in late 2024 — which jumpstarted applications across use cases.

One additional signal that voice is hot: companies building voice AI applications are making up larger chunks of Y Combinator’s recent cohorts.

CBI customers can dive into the data on 270 companies developing voice AI capabilities — with a focus on voice generation — here.

Growing pains

Despite the excitement, challenges remain around reliability and trust.

Voice AI agents still struggle with complex conversations and unpredictable inputs, leading most enterprises to start out by deploying them in low-stakes scenarios.

In theory, fast-food ordering should be a natural fit — interactions are brief and highly predictable. The AI only needs to understand a limited vocabulary of items and modifiers.

But the reputational risk of even the occasional mishap can be high. McDonald’s, for instance, started a voice AI pilot with IBM back in 2021, but pulled it in 2024 after videos of inaccurate orders went viral on TikTok.

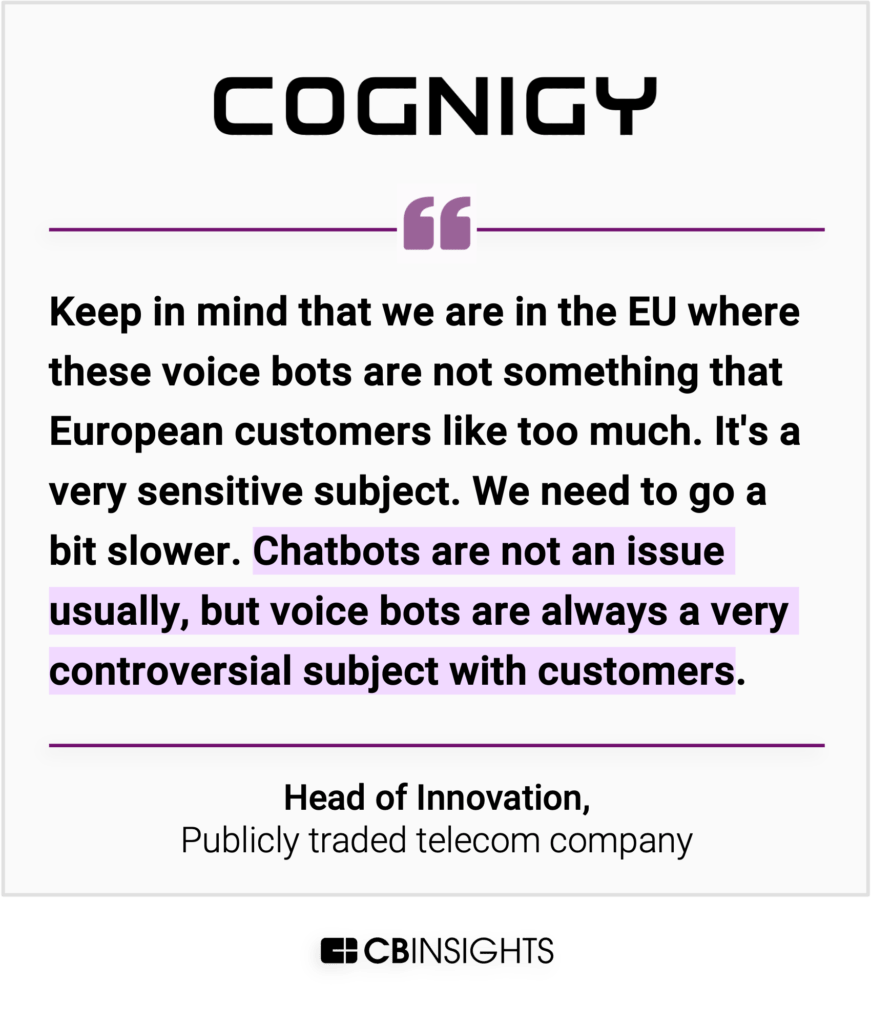

Customer acceptance of voice AI interaction also varies dramatically by region. As one Cognigy customer told us:

Meanwhile, a strategic divide is emerging in the voice AI market: cloud vs. edge processing.

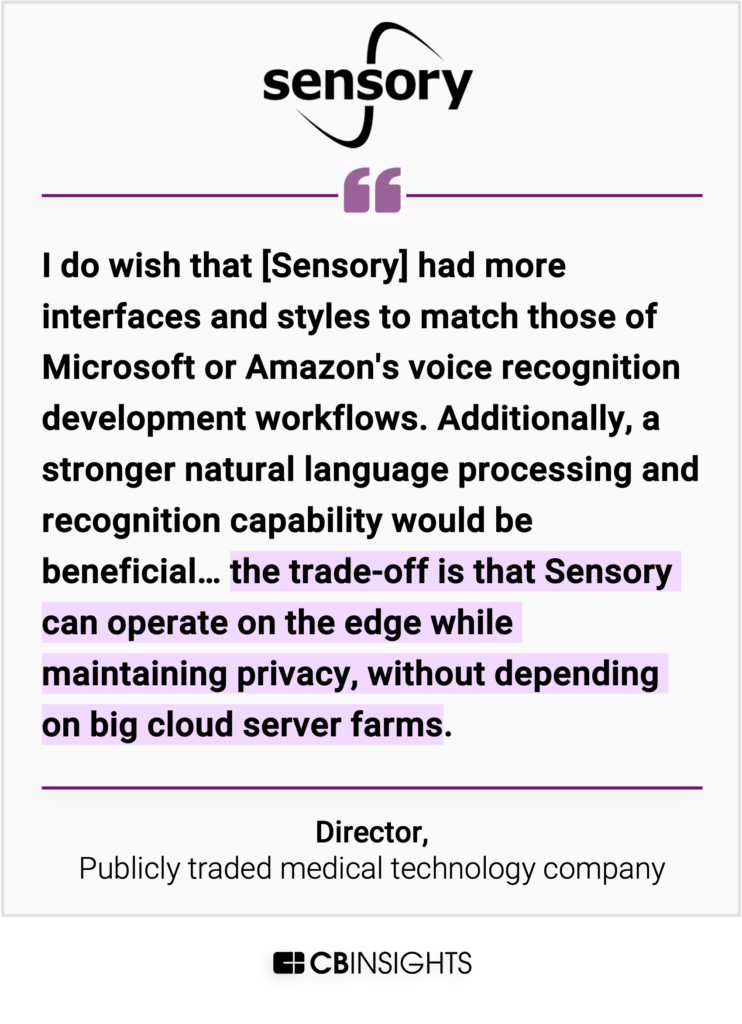

Cloud-based solutions from tech giants offer advanced capabilities but raise privacy concerns, while edge-based platforms process data locally with better privacy but more limited features.

A medtech executive highlighted this tradeoff, telling us they chose Sensory over Microsoft or Amazon despite losing out on more robust capabilities:

This divide will shape which players win in different sectors, with edge solutions likely dominating in sensitive industries like healthcare and financial services, while cloud platforms prevail in consumer and retail applications.

For more on how AI will shape every aspect of the customer experience, get the free report here.

RELATED RESOURCES:

For information on reprint rights or other inquiries, please contact reprints@cbinsights.com.

If you aren’t already a client, sign up for a free trial to learn more about our platform.