#vjepa #meta #unsupervisedlearning

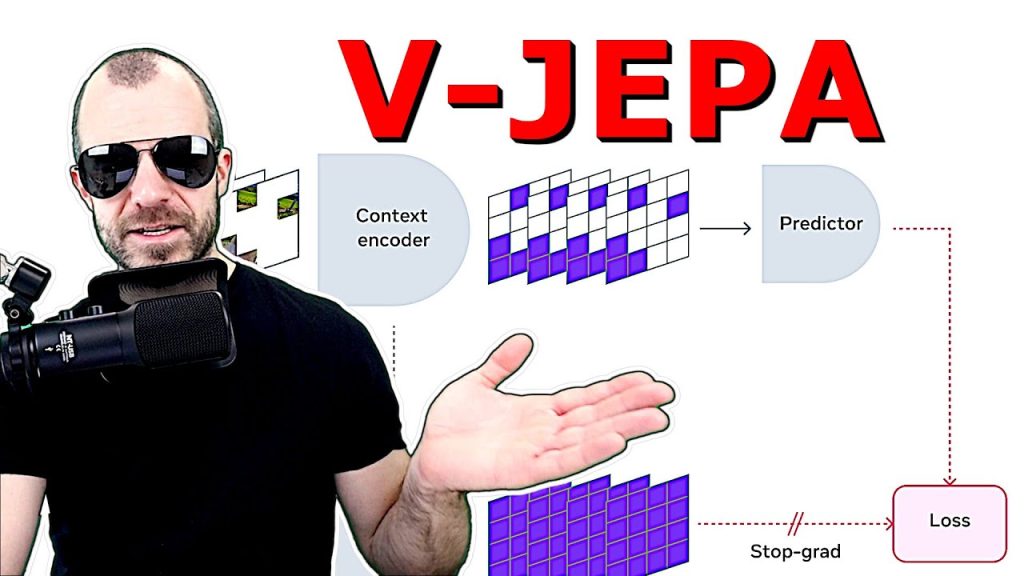

V-JEPA is a method for unsupervised representation learning of video data by using only latent representation prediction as objective function.

Weights & Biases course on Structured LLM Outputs:

OUTLINE:

0:00 – Intro

1:45 – Predictive Feature Principle

8:00 – Weights & Biases course on Structured LLM Outputs

9:45 – The original JEPA architecture

27:30 – V-JEPA Concept

33:15 – V-JEPA Architecture

44:30 – Experimental Results

46:30 – Qualitative Evaluation via Decoding

Blog:

Paper:

Abstract:

This paper explores feature prediction as a stand-alone objective for unsupervised learning from video and introduces V-JEPA, a collection of vision models trained solely using a feature prediction objective, without the use of pretrained image encoders, text, negative examples, reconstruction, or other sources of supervision. The models are trained on 2 million videos collected from public datasets and are evaluated on downstream image and video tasks. Our results show that learning by predicting video features leads to versatile visual representations that perform well on both motion and appearance-based tasks, without adaption of the model’s parameters; e.g., using a frozen backbone, our largest model, a ViT-H/16 trained only on videos, obtains 81.9% on Kinetics-400, 72.2% on Something-Something-v2, and 77.9% on ImageNet1K.

Authors: Adrien Bardes Quentin Garrido Xinlei Chen Michael Rabbat Yann LeCun Mido Assran Nicolas Ballas Jean Ponce

Links:

Homepage:

Merch:

YouTube:

Twitter:

Discord:

LinkedIn:

If you want to support me, the best thing to do is to share out the content 🙂

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar:

Patreon:

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

source