This post is co-authored with Karsten Weber and Rosary Wang from Lexbe.

Legal professionals are frequently tasked with sifting through vast volumes of documents to identify critical evidence for litigation. This process can be time-consuming, prone to human error, and expensive—especially when tight deadlines loom. Lexbe, a leader in legal document review software, confronted these challenges head-on by using Amazon Bedrock. By integrating the advanced AI and machine learning services offered by Amazon, Lexbe streamlined its document review process, boosting both efficiency and accuracy. In this blog post, we explore how Lexbe used Amazon Bedrock and other AWS services to overcome business challenges and deliver a scalable, high-performance solution for legal document analysis.

Business challenges and why they matter

Legal professionals routinely face the daunting task of managing and analyzing massive sets of case documents, which can range anywhere from 100,000 to over a million. Rapidly identifying relevant information within these large datasets is often critical to building a strong case—or preventing a costly oversight. Lexbe addresses this challenge by using Amazon Bedrock in their custom application: Lexbe Pilot

Lexbe Pilot is an AI-powered Q&A assistant integrated into the Lexbe eDiscovery platform. It enables legal teams to instantly query and extract insights from the full body of documents in an entire case using generative AI—eliminating the need for time-consuming manual research and analysis. Using Amazon Bedrock Knowledge Bases, users can query an entire dataset and retrieve grounded, contextually relevant results. This approach goes far beyond traditional keyword searches by helping legal teams identify critical or smoking gun documents that could otherwise remain hidden. As legal cases grow, keyword searches that previously returned a handful of documents might now produce hundreds or even thousands. Lexbe Pilot distills these large result sets into concise, meaningful answers—giving legal teams the insights they need to make informed decisions.

Failing to address these challenges can lead to missed evidence, possibly resulting in unfavorable outcomes. With Amazon Bedrock and its associated services, Lexbe provides a scalable, high-performance solution that empowers legal professionals to navigate the growing landscape of electronic discovery efficiently and accurately.

Solution overview: Amazon Bedrock as the foundation

Lexbe transformed its document review process by integrating Amazon Bedrock, a powerful suite of AI and machine learning (ML) services. With deep integration into the AWS ecosystem, Amazon Bedrock delivers the performance and scalability necessary to meet the rigorous demands of Lexbe’s clients in the legal industry.

Key AWS services used:

Amazon Bedrock. A fully managed service offering high-performing foundation models (FMs) for large-scale language tasks. By using these models, Lexbe can rapidly analyze vast amounts of legal documents with exceptional accuracy.

Amazon Bedrock Knowledge Bases. Provides fully managed support for an end-to-end Retrieval-Augmented Generation (RAG) workflow, enabling Lexbe to ingest documents, perform semantic searches, and retrieve contextually relevant information.

Amazon OpenSearch. Indexes all the document text and corresponding metadata. Both Vector and Text modes are used. This allows Lexbe to quickly locate specific documents or key information across large datasets by vector or by keyword.

AWS Fargate. Orchestrates the analysis and processing of large-scale workloads in a serverless container environment, allowing Lexbe to scale horizontally without the need to manage underlying server infrastructure.

Amazon Bedrock Knowledge Bases architecture and workflow

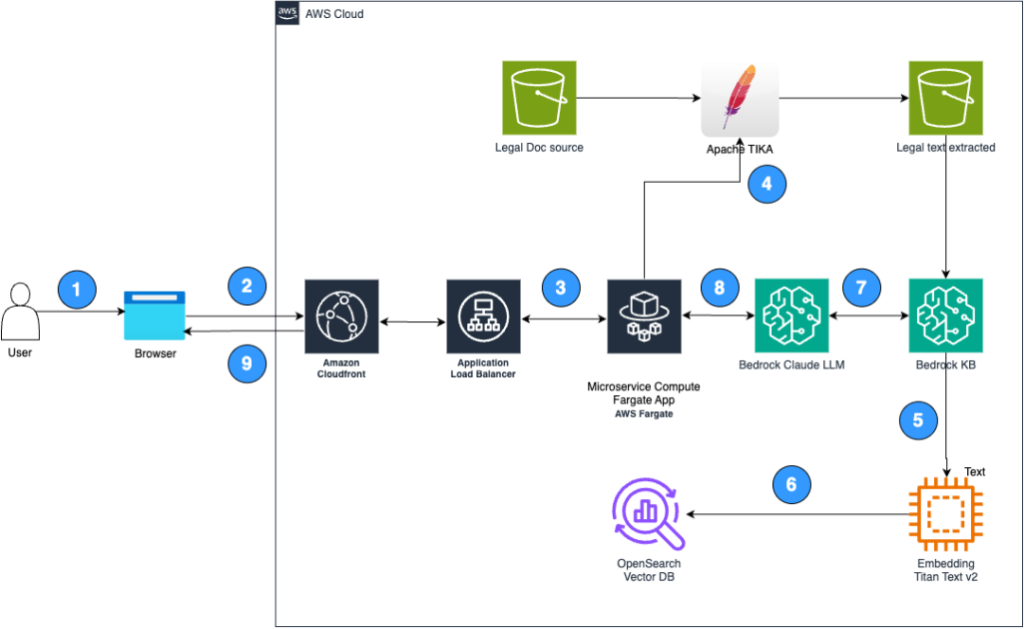

The integration of Amazon Bedrock within Lexbe’s platform is shown in the following architecture diagram. The architecture is designed to handle both large-scale ingestion and retrieval of legal documents.

User access: A user accesses the frontend application through a web browser.

Request routing: The request is routed through Amazon CloudFront, which connects to the backend through an Application Load Balancer.

Backend processing: Backend services running on Fargate handle the request and interact with the system components.

Document handling: Legal documents are stored in an Amazon Simple Storage Service (Amazon S3) bucket, and Apache Tika extracts text from these documents. The extracted text is stored as individual text files in a separate S3 bucket. This bucket is used as the source repository for Amazon Bedrock.

Embedding creation: The extracted text is processed using Titan Text v2 to generate embeddings. Lexbe experimented with multiple embedding models—including Amazon Titan and Cohere—and tested configurations with varying token sizes (for example, 512 compared to 1024 tokens).

Embedding sorage: The generated embeddings are stored in a vector database for fast retrieval.

Query execution: Amazon Bedrock Knowledge Bases retrieves relevant data from the vector database for a given query.

LLM integration: The Amazon Bedrock Sonnet 3.5 large language model (LLM) processes the retrieved data to generate a coherent and accurate response.

Response delivery: The final response is returned to the user using the frontend application through CloudFront.

Amazon and Lexbe collaboration

Over an eight-month period, Lexbe worked hand-in-hand with the Amazon Bedrock Knowledge Bases team to enhance the performance and accuracy of its Pilot feature. This collaboration included weekly strategy meetings between senior teams from both organizations, enabling rapid iterations. From the outset, Lexbe established clear acceptance criteria focused on achieving specific recall rates. These metrics served as a benchmark for when the feature was ready for production. As illustrated in the following figure, the system’s performance underwent five significant milestones, each marking a leap toward production. We focused on Recall Rate because identifying the right documents is critical to getting the correct response. Unlike some use cases for Retrieval Augmented Generation (RAG) where the user has a specific question that can often be answered by a few documents, we are looking to generate finding-of-facts reports that require a large number of source documents. For this reason, we focused on Recall Rate to help ensure that Amazon Bedrock Knowledge Bases was not leaving out important information.

First iteration: January 2024. The initial system only had a 5% Recall Rate showing that much work was needed to get to production.

Second iteration: April 2024. New features were added to Amazon Bedrock Knowledge Bases leading to a noticeable boost in accuracy. We were now at 36% Recall Rate.

Third iteration: June 2024. Parameter adjustment, particularly around token size, led to another jump in performance. This brought Recall Rate to 60%.

Fourth iteration: August 2024. A Recall Rate of 66% was achieved using Titan Embed text-v2 models.

Fifth iteration: December 2024. Introduction of Reranker technology proved invaluable and enabled up to 90% Recall Rate.

The final outcome is impressive

Broad, human-style reporting. In an industrial-accident matter, Pilot was asked to conduct a full findings-of-fact analysis. It produced a polished, five-page report complete with clear section headings and hyperlinks back to every source document regardless of whether those documents were in English, Spanish, or any other language.

Deep, automated inference. In a case of tens of thousands of documents, we asked, “Who is Bob’s son?” There was no explicit reference to his children anywhere. Yet Pilot zeroed in on an email that began “Dear Fam,” closed with “Love, Mom/Linda,” and included the children’s first and last names in the metadata. By connecting those dots, it accurately identified Bob’s son and cited the exact email where the inference was made.

Traditional techniques in eDiscovery are unable to do either of the above. With Pilot, legal teams can:

Generate actionable reports that attorneys can swiftly iterate for deeper analysis.

Streamline eDiscovery by surfacing critical connections that go far beyond simple text matches.

Unlock strategic insights in moments, even from multilingual data.

Whether you need a comprehensive, human-readable report or laser-focused intelligence on the relationships lurking in your data, Lexbe Pilot, powered by Amazon Bedrock Knowledge Bases, delivers the precise information you need—fast.

Benefits of integrating Amazon Bedrock and AWS services

By integrating Amazon Bedrock with other AWS services, Lexbe gained several strategic advantages in their document review process:

Scalability. Using Amazon Elastic Container Service (Amazon ECS) and AWS Fargate, Lexbe can dynamically scale its processing infrastructure.

Cost efficiency. Processing in Amazon ECS Linux Spot Market provides a significant cost advantage.

Security. The robust security framework of AWS, including encryption and role-based access controls, safeguards sensitive legal documents. This is critical for Lexbe’s clients, who must adhere to strict confidentiality requirements.

Conclusion: A scalable, accurate, and cost-effective solution

Through its integration of Amazon Bedrock, Lexbe has transformed its document review platform into a highly efficient, scalable, and accurate solution. By combining Amazon Bedrock, Amazon OpenSearch, and AWS Fargate, they achieved marked improvements in both retrieval accuracy and processing speed—all while keeping costs under control. Lexbe’s success illustrates the power of AWS AI/ML services to tackle complex, real-world challenges. By harnessing the flexible, scalable, and cost-effective offerings of AWS, Lexbe is well-equipped to meet the evolving needs of the legal industry—both today and in the future. If your organization is facing complex challenges that could benefit from AI/ML-powered solutions, take the next step with AWS. Start by working closely with your AWS Solutions Architect to design a tailored strategy that aligns with your unique needs. Engage with the AWS product team to explore cutting-edge services to make sure that your solution is scalable, secure, and future-ready. Together, we can help you innovate faster, reduce costs, and deliver transformative outcomes.

About the authors

Wei Chen is a Senior Solutions Architect at Amazon Web Services, based in Austin, Texas. With over 20 years of experience, he specializes in helping customers design and implement solutions for complex technical challenges. In his role at AWS, Wei partners with organizations to modernize their applications and fully leverage cloud capabilities to meet strategic business goals. His area of expertise is AI/ML and AWS Security services.

Wei Chen is a Senior Solutions Architect at Amazon Web Services, based in Austin, Texas. With over 20 years of experience, he specializes in helping customers design and implement solutions for complex technical challenges. In his role at AWS, Wei partners with organizations to modernize their applications and fully leverage cloud capabilities to meet strategic business goals. His area of expertise is AI/ML and AWS Security services.

Gopikrishnan Anilkumar is a Principal Technical Product Manager in Amazon. He has over 10 years of product management experience across a variety of domains and is passionate about AI/ML.

Gopikrishnan Anilkumar is a Principal Technical Product Manager in Amazon. He has over 10 years of product management experience across a variety of domains and is passionate about AI/ML.

Sandeep Singh is a Senior Generative AI Data Scientist at Amazon Web Services, helping businesses innovate with generative AI. He specializes in generative AI, machine learning, and system design. He has successfully delivered state-of-the-art AI/ML-powered solutions to solve complex business problems for diverse industries, optimizing efficiency and scalability.

Sandeep Singh is a Senior Generative AI Data Scientist at Amazon Web Services, helping businesses innovate with generative AI. He specializes in generative AI, machine learning, and system design. He has successfully delivered state-of-the-art AI/ML-powered solutions to solve complex business problems for diverse industries, optimizing efficiency and scalability.

Karsten Weber is the CTO and Co-founder of Lexbe, an eDiscovery provider, since January 2006. Based in Austin, Texas, Lexbe offers Lexbe Online™, a cloud-based application for eDiscovery, litigation, and legal document processing, production, review, and case management. Under Karsten’s leadership, Lexbe has developed a robust platform and comprehensive eDiscovery services that assist law firms and organizations with efficiently managing large ESI data sets for legal review and discovery production. Karsten’s expertise in technology and innovation has been pivotal in driving Lexbe’s success over the past 19 years.

Karsten Weber is the CTO and Co-founder of Lexbe, an eDiscovery provider, since January 2006. Based in Austin, Texas, Lexbe offers Lexbe Online™, a cloud-based application for eDiscovery, litigation, and legal document processing, production, review, and case management. Under Karsten’s leadership, Lexbe has developed a robust platform and comprehensive eDiscovery services that assist law firms and organizations with efficiently managing large ESI data sets for legal review and discovery production. Karsten’s expertise in technology and innovation has been pivotal in driving Lexbe’s success over the past 19 years.

Rosary Wang is a Sr. Software Engineer at Lexbe, an eDiscovery software and services provider based in Austin, Texas.

Rosary Wang is a Sr. Software Engineer at Lexbe, an eDiscovery software and services provider based in Austin, Texas.