Today, Tencent Hunyuan officially announced the release of Hunyuan World-Voyager (referred to as Hunyuan Voyager), the first ultra-long roaming world model in the industry that supports native 3D reconstruction.

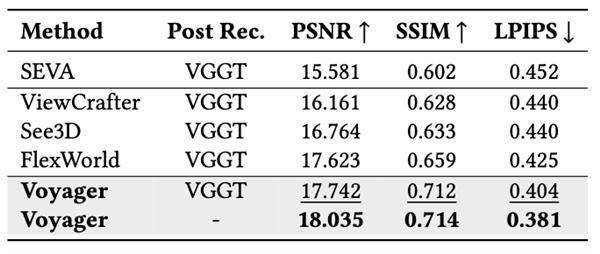

This model ranks first in overall capability on the WorldScore benchmark test released by Stanford University’s Fei-Fei Li team, surpassing existing open-source methods and demonstrating excellent performance in both video generation and 3D reconstruction tasks.

In both video generation and video 3D reconstruction tasks, Voyager has also achieved better results.

According to reports, Hunyuan Voyager focuses on the application expansion of AI in the field of spatial intelligence, providing high-fidelity 3D scene roaming capabilities for virtual reality, physical simulation, game development, and other fields.

The model breaks through the limitations of traditional video generation in spatial consistency and exploration range, capable of generating long-distance, world-consistent roaming scenes, and supports direct export of videos into 3D formats.

It is understood that the 3D input-3D output feature of Hunyuan Voyager is highly compatible with the previously open-sourced Hunyuan World Model 1.0, which can further expand the roaming range of the 1.0 model, enhance the generation quality of complex scenes, and allow for stylization control and editing of the generated scenes.

In addition, this model supports various 3D understanding and generation applications such as video scene reconstruction, 3D object texture generation, customized video style generation, and video depth estimation.

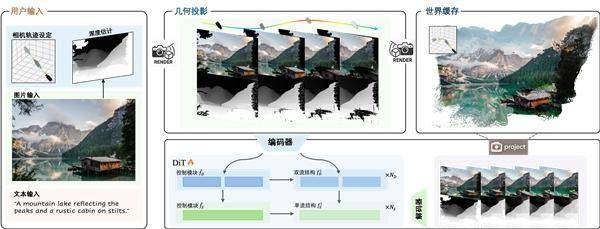

The official statement indicates that Hunyuan Voyager supports native 3D memory and scene reconstruction for the first time through a combination of spatial and feature methods, avoiding the latency and accuracy loss caused by traditional post-processing.

At the same time, adding 3D conditions at the input end ensures accurate scene perspective, while the output end directly generates 3D point clouds, adapting to various application scenarios.

Additional depth information can also support functionalities such as video scene reconstruction, 3D object texture generation, stylization editing, and depth estimation.返回搜狐,查看更多