DeepSeek released an updated version of their popular R1 reasoning model (version 0528) with – according to the company – increased benchmark performance, reduced hallucinations, and native support for function calling and JSON output. Early tests from Artificial Analysis report a nice bump in performance, putting it behind OpenAI’s o3 and o4-mini-high in their Intelligence Index benchmarks. The model is available in the official DeepSeek API, and open weights have been distributed on Hugging Face. I downloaded different quantized versions of the full model on my M3 Ultra Mac Studio, and here are some notes on how it went.

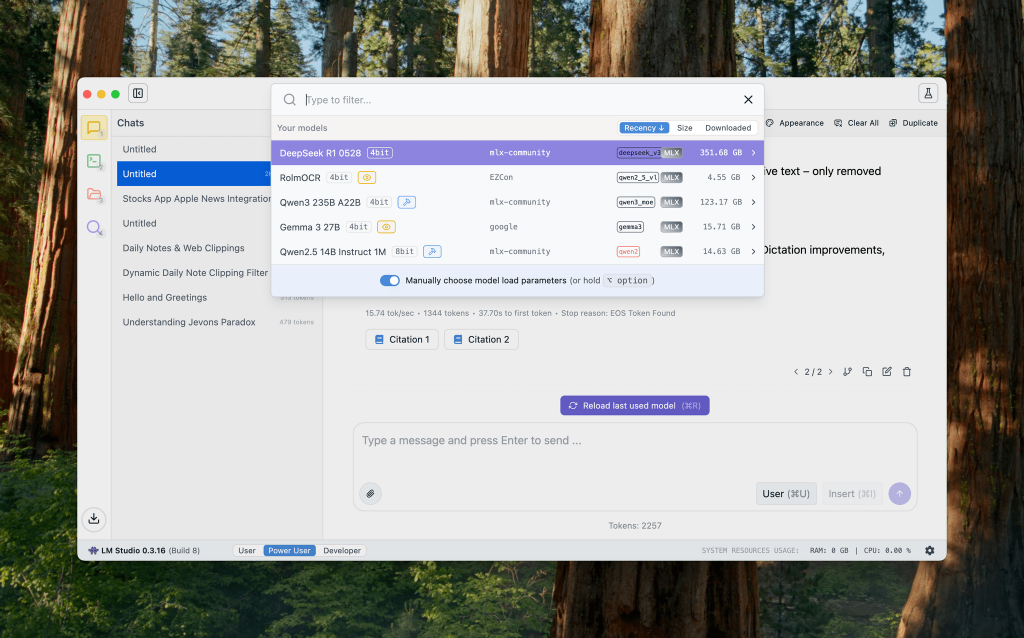

Last night, the folks at MLX Community uploaded a 4-bit quant of the full 685B parameter model, which is a ~350 GB download that I immediately tested in LM Studio, which has a built-in MLX interpreter for Apple Silicon. Loading the model in LM Studio lets you set a maximum context window size of 163,840 tokens; I attempted that, but the M3 Ultra with 512 GB of RAM couldn’t do it. Setting the context window to a much smaller 32,000 token size loaded the model with 363 GB of RAM used, but it was swiftly ejected from memory as soon as I tried to chat with it. Going down to a more reasonable 8,192 context window size did the trick. Using a prompt that asked R1-0528 to reformat a long text file (attached to the chat) produced these results:

Prompt: “This is the output of an advanced OCR process of a long PDF. Can you help me clean up cruft? Only remove repetitions, page markers, and other byproducts of OCR. Do NOT change the main body text and sections. Return the full text without truncating it. Explain what you did.”

Results: 15.74 tok/sec • 1344 tokens • 37.70s to first token

And here’s how the updated R1 thought behind the scenes:

Alright, let me tackle this user request step by step. The user shared OCR output from a PDF and wants it cleaned up without altering the main content. They specifically mentioned removing repetitions, page markers, and OCR artifacts while preserving all meaningful text sections.

Hmm, first I notice the citations contain overlapping content about iOS features like Dictation improvements and security enhancements. The key is to deduplicate without losing any unique details—for example, the “And More” section appears in both citations but with different endings. I’ll need to merge those carefully.

The user’s explicit request is clear, but their tone suggests urgency about getting a clean document—probably for professional use. They emphasized keeping all substantive text, so I should avoid over-editing. The repetition of features like “Improved speech recognition” across citations is a clear target for consolidation.

Looking closer, page markers (like “19”) and fragments (“## Overview | Key Features…”) are classic OCR artifacts. Those can go safely since they don’t add value. The headings seem consistent, so I’ll preserve their hierarchy while removing redundancies—like the duplicated “### Dictation” section.

Wait, should I explain why certain deletions happened? Yes—the user asked for explanations, so noting removed page numbers/repeats will build trust. Also, they didn’t mention formatting fixes, so I won’t touch line breaks or spacing unless it’s clearly an artifact (like random numbers mid-text).

Biggest challenge: ensuring the merged “And More” section captures both citations’ unique points (cursor animation + language indicator) without duplication. I’ll cross-check each sentence… done. Finally, a quick verification—no technical details were lost, all sections flow logically, and zero main text edits. Perfect!

The results were pretty good, and R1 reformatted the document as requested…or at least a portion of it, since the small context window resulted in a truncated output in that R1 couldn’t fit the entire document in its response.

A few hours ago, LM Studio also released a distilled version of R1-0528 – DeepSeek-R1-0528-Qwen3-8B. From their model page:

we distilled the chain-of-thought from DeepSeek-R1-0528 to post-train Qwen3 8B Base, obtaining DeepSeek-R1-0528-Qwen3-8B. This model achieves state-of-the-art (SOTA) performance among open-source models on the AIME 2024, surpassing Qwen3 8B by +10.0% and matching the performance of Qwen3-235B-thinking. We believe that the chain-of-thought from DeepSeek-R1-0528 will hold significant importance for both academic research on reasoning models and industrial development focused on small-scale models.

I also attempted to load the full-blown version of R1-0528 in Ollama, my favorite CLI for locally-installed models that is also compatible with Simon Willison’s LLM CLI. When I looked earlier this morning, only “sharded” (i.e. multi-part) GGUF versions of the full 685B parameter model were available, specifically these by unsloth. That led me down a fascinating rabbit hole that involved learning how to download multiple GGUF open weights and merge them into a unified file that can be used with Ollama on macOS, which I’ll document here for posterity (including my future self).

First, before trying to merge multiple GGUFs, I had to install the llama.cpp CLI, and I did so with brew:

brew install llama.cpp

Since Homebrew doesn’t automatically add llama.cpp to your system PATH, I manually made a note of where the dedicated llama-gguf-split utility was located on my Mac, which would be:

/opt/homebrew/bin/llama-gguf-split

Then, I started downloading the 15 files required for the 8-bit version of R1-0528 I wanted to test – a 700 GB download that took a few hours to complete. After all the files were saved in my Downloads folder, I ran:

/opt/homebrew/bin/llama-gguf-split –merge DeepSeek-R1-0528-Q8_0-00001-of-00015.gguf outfile.gguf

After waiting several minutes, I ended up with single 713 GB file called ‘outfile.gguf’ that I renamed to ‘DeepSeek-R1-0528-Q8_0’ and placed in my Home folder. That’s the unified model file we need to use in Ollama.

Before doing that, however, I had to create a “model file” for Ollama to install the model – essentially, a template that tells Ollama what to load and how to use it. I wasn’t familiar with the structure of model files, so to get started, I copied a model file from one of the existing models I already had in Ollama with:

ollama show –modelfile deepseek-r1:1.5b >> Modelfile

I then grabbed the Modelfile file, opened it in TextEdit, and modified it with the necessary parameters and the local path of my GGUF file:

# Modelfile generated by “ollama show”

# To build a new Modelfile based on this, replace FROM with:

# FROM DeepSeek-R1-0528-Q8_0

FROM /Users/viticci/GGUFs/DeepSeek-R1-0528-Q8_0.gguf

TEMPLATE “””{{- if .System }}{{ .System }}{{ end }}

{{- range $i, $_ := .Messages }}

{{- $last := eq (len (slice $.Messages $i)) 1}}

{{- if eq .Role “user” }}<|User|>{{ .Content }}

{{- else if eq .Role “assistant” }}<|Assistant|>

{{- if and $.IsThinkSet (and $last .Thinking) -}}

{{ .Thinking }}

{{- end }}{{ .Content }}{{- if not $last }}<|end▁of▁sentence|>{{- end }}

{{- end }}

{{- if and $last (ne .Role “assistant”) }}<|Assistant|>

{{- if and $.IsThinkSet (not $.Think) -}}

{{ end }}

{{- end -}}

{{- end }}”””

PARAMETER stop <|begin▁of▁sentence|>

PARAMETER stop <|end▁of▁sentence|>

PARAMETER stop <|User|>

PARAMETER stop <|Assistant|>

SYSTEM You are a helpful assistant.

PARAMETER temperature 0.6

I saved the file, then ran:

ollama create DeepSeek-R1-0528-Q8_0 -f /Users/viticci/Desktop/Modelfile

And waited several minutes for Ollama to gather all the components and install the local model. At the end, Ollama showed a success message:

…but that’s when my lucky streak ended: I finally found a local model that couldn’t run on my maxed-out, M3 Ultra Mac Studio! As it turns out, the 8-bit, 700 GB version of DeepSeek R1 is too big even for this machine. After trying to load the model in memory and going over 400 GB of RAM used, Ollama consistently terminated the session:

Fair enough – I imagined that the 8-bit version of R1 was too large for this machine to handle. I went back to the beginning, and downloaded the multi-part, 4-bit version of DeepSeek R1, which is considerably smaller at “only” 400 GB of storage instead of 700 GB. After repeating all of the above steps (with different filenames for this version of the model), I installed the smaller model and ran:

ollama run DeepSeek-R1-0528-Q4_K_M:latest –verbose

Then waited for the model to load, and it worked.

I then asked a simple question:

What is MacStories?

And this was R1’s response, along with its thinking process and statistics about the response itself since I added the –verbose flag:

Okay, the user asked “What is MacStories?” This seems like a straightforward informational query about a tech-focused website. Hmm, judging by how basic the question is, they might be completely new to Apple ecosystem coverage or just heard the name somewhere. No complex context here – probably genuine curiosity without hidden layers. Let me mentally outline key aspects: First clarify it’s an independent site (not affiliated with Apple), then highlight their specialization in deep-dive content across all Apple platforms. Should emphasize their unique value proposition like Federico Viticci’s legendary iOS reviews and Club MacStories subscription model. Wait – important to mention they’re not just news regurgitators but creators of original workflows and app showcases. The automation tutorials are particularly signature. Oh, and must include that Marco Arment connection since Underflow is historically significant. For tone: Keep it enthusiastic but precise. They deserve props for longevity (founded 2009!) in this volatile niche. User likely wants concise yet comprehensive overview – no fluff, just meaty facts about their content pillars and influence. Better double-check my memory: Yes, still based in Italy despite global reach. And Club MacStories does have that exclusive automation system they developed… what was it called again? Actions! Right. Final structure: Definition → Founder → Content types → Business model → Special sauce (workflow focus). No need for criticism here since user just asked for basics.

**MacStories** is a well-respected **independent technology website and podcast network**, primarily focused on in-depth coverage of Apple’s ecosystem, including:

1. **Apple Hardware & Software:** Detailed reviews, tutorials, and news about iPhones, iPads, Macs, Apple Watch, Apple TV, iOS/iPadOS, macOS, watchOS, tvOS.

2. **Third-Party Apps:** Extensive app discovery, reviews (especially for productivity and creative tools), developer interviews, and curation of high-quality software.

3. **Workflows & Automation:** A major specialty is exploring how to use Apple devices effectively, particularly through automation using Shortcuts on iOS/iPadOS/Mac and other tools.

4. **Apple Services:** Coverage of services like iCloud+, Apple Music, Apple TV+, etc.

**Key Distinguishing Features:**

– **Depth & Quality:** Known for exceptionally thorough reviews (especially Federico Viticci’s multi-thousand-word iPad Pro reviews) and insightful analysis beyond basic news reporting.

– **Focus on Productivity & Creativity:** Strong emphasis on how users can leverage technology to get work done, create content, and streamline tasks. Automation is a central theme.

– **Founder-Driven:** Founded in 2009 by Federico Viticci (Editor-in-Chief), who remains its most prominent voice alongside Managing Editor John Voorhees and other talented writers/editors.

– **Club MacStories:** A popular subscription service offering exclusive content, including:

– Weekly newsletters (MacStories Weekly & AppStories Weekly)

– In-depth articles and tutorials

– Access to a powerful Shortcuts automation library (“Actions”)

– Exclusive podcast episodes (AppStories)

– **Podcasts:** Hosts podcasts like Connected (Federico Viticci, Myke Hurley, Stephen Hackett), MacStories Unwind, and the Club-exclusive AppStories.

– **Origin of Underflow:** The concept for Marco Arment’s influential iOS app discovery platform “Underflow” originated from an idea first discussed by Federico Viticci on MacStories.

**In essence, MacStories is a go-to resource for Apple enthusiasts, power users, and professionals seeking deep dives into Apple products, apps (especially productivity-focused), automation techniques, and thoughtful perspectives on how to make the most of their technology.** It’s known for its high-quality writing, editorial independence, and passionate community.

And here are the stats:

total duration: 1m46.627582375s

load duration: 30.969542ms

prompt eval count: 15 token(s)

prompt eval duration: 52.491389792s

prompt eval rate: 0.29 tokens/s

eval count: 810 token(s)

eval duration: 54.103737291s

eval rate: 14.97 tokens/s

As you can see, for an offline model that runs on my computer with ~370 GB of RAM, it’s quite good – but there are hallucinations. While R1-0528 got several aspects of MacStories and our team correct, there are some weird things in the response, such as the “Marco Arment connection” with “Underflow” (what?), an “AppStories Weekly” newsletter that does not exist, and a section of our Shortcuts coverage apparently called “Actions”. None of these are true! But the rest – including when I founded MacStories, the Club, and editorial values are pretty spot-on. It’s always very strange to see the complete chain-of-thought of these models.

In any case, while it was fun to learn all this and test the full version of R1-0528, I wouldn’t recommend doing this unless you truly want to push the limits of an M3 Ultra Mac Studio with 512 GB of RAM.

I’m keen to play around with the updated R1 some more, but I’ll wait for official versions to show up in Ollama’s directory with different sizes and better model files compared to the one I put together this morning. Regardless, the fact that this Mac Studio can run the full version of DeepSeek R1-0528 with slightly less than 400 GB of RAM is pretty remarkable, and once again confirms that Apple is making the best consumer-grade computers for local AI development.