In recent months, headlines have highlighted both the benefits and harms of AI applications. From helping filmmakers enhance audio for accurate language depictions, like in the Oscar nominated film “The Brutalist,” to streamlining the documentation process for healthcare providers. But while advancements in AI may allow for creative solutions to audio and visual problems, AI has posed risks and harms to hundreds of women and girls who are targets of deepfake sex crimes. The same AI-powered transcription tools used in hospital settings for visits are also fabricating “chunks of text or even entire sentences,” creating huge risks for patients receiving sensitive care. And last year, another AI chatbot was involved in a teenager’s suicide.

These stories have revealed a critical reality: real lives are at stake with the deployment of AI applications. Our understanding of these issues mainly comes from ad hoc investigative reporting rather than first hand information. In fact, despite their widespread adoption and growing impact, we have surprisingly limited data about how these systems function in the real world after deployment.

“Real lives are at stake with the deployment of AI applications”

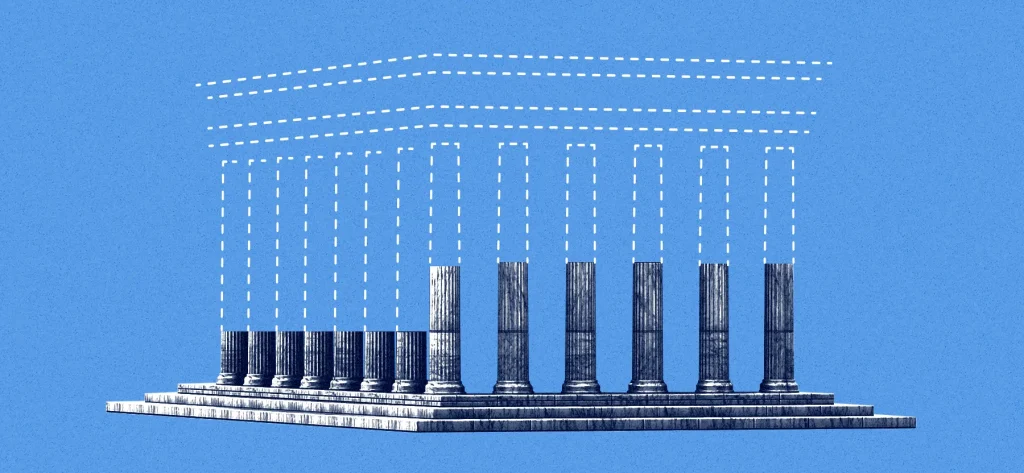

Our recent report on Documenting the Impacts of Foundation Models, highlights the need for change. A majority of industry and policy making efforts are centered around ensuring foundation models are “safe” to deploy, but companies must now take the lead in assessing the real-world impacts and implications of those models post-deployment.

Change Starts With Industry

Some companies are already showing what this leadership can look like. Anthropic’s Economic Index, aimed at understanding their AI assistant Claude’s effect on the labor market and economy, is an example of how a model provider can share usage information to provide insights into how their own model impacts a particular industry. By sharing anonymized usage data, Anthropic is giving policymakers and researchers new information to assess the economic effects of their AI, without compromising their own competitive market advantage.

Meta is also helping raise the bar. Their research on sustainable AI implications is a prime example of model providers enabling and sharing research on societal impacts. By researching environmental impacts they were able to analyze the carbon footprint of AI models from both the AI and hardware development life cycle, Meta researchers were able to identify how to optimize AI models to reduce the overall carbon footprint of AI.

While these examples are promising, they remain the exception and not the rule. That is why we need more companies to follow suit in examining and monitoring AI’s impacts post-deployment.

“Despite the clear benefits, impact documentation is not yet an industry norm.”

Why Companies Must Lead

Foundation model providers design, train, release, and update the models that power AI applications, giving them unique visibility into how their models behave in the real world. Being at the center of the AI ecosystem means they have the responsibility to voluntarily document and share those insights in the absence of regulatory oversight.

As our report outlines, collecting, aggregating, and sharing post-deployment impact information provides four main benefits to actors across the foundation model value chain, such as:

Amplifying societal benefits: Documenting post-deployment impacts increases awareness of foundation model benefits and improves stakeholder literacy while building trust.

Managing and mitigating risks: Documenting post-deployment impacts enables stakeholders to identify, assess, and mitigate potential or realized negative effects of AI systems on society.

Developing evidence-based policy: Documenting post-deployment impacts provides policymakers with crucial data to develop and implement effective, balanced regulations and governance frameworks that protect people while considering implementation costs.

Advancing documentation standards through shared learning: Multistakeholder collaboration in sharing post-deployment impact documentation helps establish best practices and moves the industry toward standardized approaches.

They Can’t Do It Alone

While model provider’s must lead in this effort, documenting AI’s impact is a shared responsibility. Other actors across the AI value chain, such as application developers, researchers, policymakers, and civil society also play crucial roles.

However, governments are changing priorities, with some focusing on promoting the development and deployment of AI systems in their own regions, and others towards deregulation. These shifts have slowed down the pace of regulatory developments, while AI continues to develop rapidly, and made it difficult to progress global governance. This regulatory uncertainty makes voluntary initiatives and research not just beneficial, but essential. Industry-led transparency practices can reflect what works well based on real world use cases and contribute to establishing consistent industry standards, which can inform regulatory efforts.

“Regulatory uncertainty makes voluntary initiatives and research not just beneficial, but essential.”

Where We Go From Here

The AI landscape is already undergoing another evolution with the emergence of AI Agents, systems capable of taking action on their virtual environment with minimal oversight,and our ability to understand their impacts remains limited. Understanding the effects of these systems on our society, and the emerging impacts of agents in media integrity, labor and the economy, and public policy is one of our priorities for 2025.

With a shift in AI policy focus towards the promotion and deregulation of AI systems, we need industry actors to help shape the field and influence other actors to cultivate an ecosystem of shared responsibility. Multistakeholder collaboration will be necessary to progress our understanding of foundation models’ impacts on society but change starts with industry. To learn how organizations can lead on impact documentation, and help shape a safer and more accountable ecosystem, read our full report.