Popular AI image generation service Midjourney has launched its first AI video generation model V1, marking a pivotal shift for the company from image generation toward full multimedia content creation.

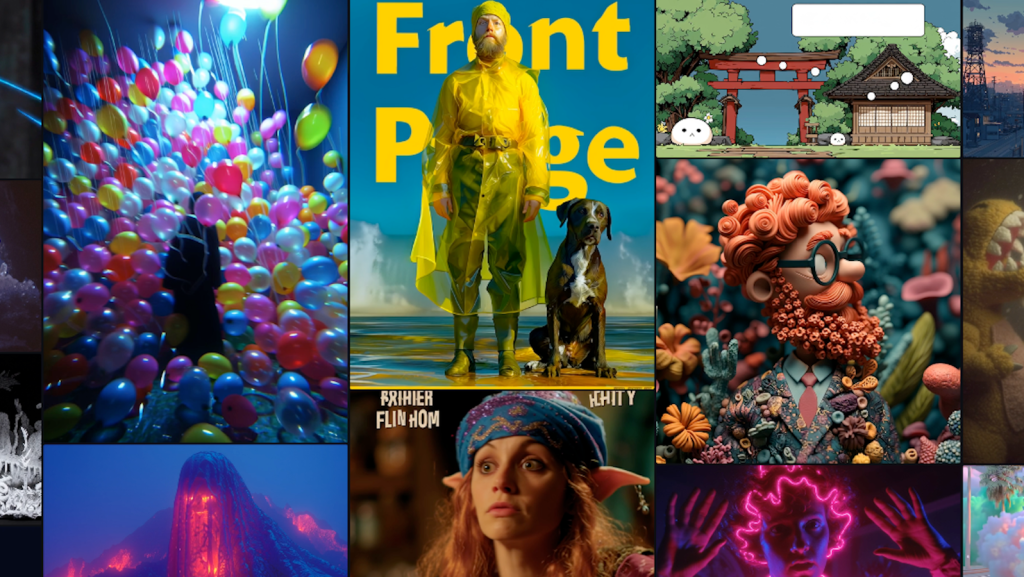

Starting today, Midjourney’s nearly 20 million users can animate images via the website, transforming their generated or uploaded stills into 5-second long clips with options for extending the generation longer up to 20 seconds (in 5 second bursts), and guiding them with text.

With the launch, the bootstrapped small lab Midjourney positions itself in a rapidly intensifying AI video race. At the same time, it’s also confronting serious legal challenges from two of the largest entertainment studios in the world.

What does it mean for AI creators and enterprises looking to harness the latest in creative tech for advertising, marketing or user engagement? And how does Midjourney stack up against a long and growing list of AI video model competitors? Read on to find out.

A new product built directly atop Midjourney’s popular AI image generator

Midjourney’s new offering extends its familiar image-based workflow, including its new v7 text-to-image model.

Users generate a still image, either within the Midjourney platform or by uploading an external file, then press “Animate” to turn that image into video.

Two primary modes exist: one uses automated motion synthesis, while the other lets users write a custom motion prompt to dictate via text how elements should move in the scene. So Midjourney video arrives with support for both image-to-video and text-to-video edits and modifications.

From a creative standpoint, users can toggle between two motion settings. There’s a low motion mode is optimized for ambient or minimalist movement — such as a character blinking or a light breeze shifting scenery — and high motion mode that attempts more dynamic animation of both subject and camera, though this can increase the chance of visual errors.

These are accessed below a generated or uploaded image on the Midjourney website in the right hand options pane below a field labeled “Animate Image,” as seen here:

Each video job generates four different 5-second clips as options, and users can extend the animation by 4 seconds per clip, up to a total of 20 seconds.

While this is relatively short-form, the company has indicated that video duration and features will expand in future updates.

Midjourney, launched in summer 2022, is widely considered by many AI image creators as the premiere or “gold standard” in AI image generation to this day thanks to its relatively frequent and more realistic and varied creation options, so there were high expectations surrounding its entry into the AI video space.

Initial reactions from users we’ve seen have been mainly promising, with some like Perplexity AI designer Phi Hoang (@apostraphi on X) commenting: “it’s surpassing all my expectations,” on a post on X.

Here’s a sample generation I created with my personal Midjourney account:

Affordable price

Midjourney is offering video access as part of its existing subscription plans, starting at $10 per month.

The company states that each video job will cost approximately 8x more than an image generation task. However, since each video job produces 20 seconds of content, the cost-per-second is roughly equivalent to generating one still image—a pricing model that appears to undercut many competitors.

A “video relax mode” is being tested for “Pro” subscribers and above. This mode, like its counterpart in image generation, would offer delayed processing in exchange for reduced compute costs. Fast generation remains metered through GPU minutes based on tiered subscription plans.

Community commentators have largely received the pricing positively. AI content creator @BLVCKLIGHTai emphasized on social media that the cost is roughly in line with what users pay for upscaling images—making the tool surprisingly affordable for short-form video experimentation.

It’s comparable to rival Luma AI’s “Web Lite Plan” for $9.99 per month and below Runway’s “Standard” plan ($15 monthly).

Here are some of the other offerings available:

No sound yet and a more limited built-in editor than AI video rivals such as Runway, Sora, Luma

The model’s most noticeable limitation is its lack of sound.

Unlike competitors such as Google’s Veo 3 and Luma Labs’ Dream Machine, Midjourney’s system does not generate accompanying audio tracks or ambient sound effects.

For now, any soundtrack would need to be added manually in post-production using separate tools.

In addition, Midjourney’s outputs remain short and are capped at 20 seconds. There is no current support for editing timelines, scene transitions, or continuity between clips.

Midjourney has stated this is only the beginning and that the initial release is intended to be exploratory, accessible, and scalable.

Rising stakes in crowded AI video market

The launch lands at a time when AI video generation is rapidly becoming one of the most competitive corners of the generative AI landscape.

Tech giants, venture-backed startups, and open-source projects are all moving fast.

This week, Chinese startup MiniMax released Hailuo 02, an upgrade to its previous video model. Early feedback has praised its realism, motion adherence to prompts, and 1080p resolution, though some reviewers noted that render times are still relatively slow.

The model appears especially adept at interpreting complex motion or cinematic camera angles, putting it in direct comparison with Western offerings like Runway’s Gen-3 Alpha and Google’s Veo line.

Meanwhile, Luma Labs’ Dream Machine has gained traction for its ability to co-generate audio alongside high-fidelity video, a feature missing from Midjourney’s new release, and like Runway, allows for re-stylizing or “re-skinning” video with a new feature called Modify Video.

Google’s Veo 3 and OpenAI’s upcoming Sora model are similarly working toward broader multimodal synthesis, integrating text, image, video, and sound into cohesive, editable scenes.

Midjourney’s bet appears to be on simplicity and cost-effectiveness—a “good enough” solution priced for scale—but that also means it launches without many advanced features now standard in the premium AI video tier.

The shadow of litigation from Disney and Universal over IP infringement

Just days before the launch, Midjourney was named in a sweeping copyright infringement lawsuit filed by Disney and Universal in U.S. District Court.

The complaint, spanning more than 100 pages, accuses Midjourney of training its models on copyrighted characters—including those from Marvel, Star Wars, The Simpsons, and Shrek—without authorization and continuing to allow users to generate derivative content.

The studios allege that Midjourney has created a “bottomless pit of plagiarism,” intentionally enabling users to produce downloadable images featuring characters like Darth Vader, Elsa, Iron Man, Bart Simpson, Shrek, and Toothless with little friction.

They further claim that Midjourney used data scraping tools and web crawlers to ingest copyrighted materials and failed to implement technical safeguards to block outputs resembling protected IP.

Of particular note: the lawsuit preemptively names Midjourney’s Video Service as a likely source of future infringement, stating that the company had begun training the model before launch and was likely already replicating protected characters in motion.

According to the complaint, Midjourney earned $300 million in revenue in 2024 and serves nearly 21 million users. The studios argue that this scale gives the platform a commercial advantage built atop uncompensated creative labor.

Disney’s general counsel, Horacio Gutierrez, stated plainly: “Piracy is piracy. And the fact that it’s done by an AI company does not make it any less infringing.”

The lawsuit is expected to test the limits of U.S. copyright law as it relates to AI training data and output control—and could influence how platforms like Midjourney, OpenAI, and others must structure future content filters or licensing agreements.

For enterprises concerned about infringement risks, services with built-in indemnity like OpenAI’s Sora or Adobe Firefly Video are probably better options for AI video creation.

A ‘world model’ and realtime world generation is the goal

Despite the immediate risks, Midjourney’s long-term roadmap is clear and ambitious. In public statements surrounding the video model’s release, the company said its goal is to eventually merge static image generation, animated motion, 3D spatial navigation, and real-time rendering into a single, unified system, also known as a world model.

These systems aim to let users navigate through dynamically generated environments—spaces where visuals, characters, and user inputs evolve in real time, like immersive video games or VR experiences.

They envision a future where users can issue commands like “walk through a market in Morocco at sunset,” and the system responds with an explorable, interactive simulation—complete with evolving visuals and perhaps, eventually, generative sound.

For now, the video model serves as an early step in this direction. Midjourney has described it as a “technical stepping stone” to more complex systems.

But Midjourney is far from the only AI research lab pursuing such ambitious plans.

Odyssey, a startup co-founded by self-driving tech veterans Oliver Cameron and Jeff Hawke, recently debuted a system that streams video at 30 frames per second with spatial interaction capabilities. Their model attempts to predict the “next state of the world” based on prior states and actions, enabling users to look around and explore scenes as if navigating a 3D space.

Odyssey combines AI modeling with its own 360-degree camera hardware and is pursuing integrations with 3D platforms like Unreal Engine and Blender for post-generation editing. However, it does not yet allow for much user control beyond moving the position of the camera and seeing what random sights the model produces as the user navigates the generated space.

Similarly, Runway, a longtime player in AI video generation, has begun folding world modeling into its public roadmap. The company’s AI video models — the latest among them, Gen-4 introduced in April 2025 — support advanced AI camera controls that allow users to arc around subjects, zoom in and out, or smoothly glide across environments—features that begin to blur the line between video generation and scene simulation.

In a 2023 blog post, Runway’s CTO Anastasis Germanidis defined general world models as systems that understand environments deeply enough to simulate future events and interactions within them. In other words, they’re not just generating what a scene looks like—they’re predicting how it behaves.

Other major AI efforts in this space include:

DeepMind, which has conducted foundational research into world modeling for robotic training and reinforcement learning;

World Labs, the new venture led by AI researcher Fei-Fei Li, focused specifically on simulation-centric models;

Microsoft, which is exploring world models for enterprise applications like digital twins and simulation-based training;

Decart, a stealthier but well-funded startup working on multi-agent simulation models.

While Midjourney’s approach has so far emphasized accessibility and ease of use, it’s now signaling an evolution toward these more sophisticated simulation frameworks. The company says that to achieve this, it must first build the necessary components: static visuals (its original image models), motion (video models), spatial control (3D positioning), and real-time responsiveness. Its new video model, then, serves as one foundational block in this longer arc.

This puts Midjourney in a global race—not just to generate beautiful media, but to define the infrastructure of interactive, AI-generated worlds.

A calculated and promising leap into an increasingly complicated competitive space

Midjourney’s entry into video generation is a logical extension of its popular image platform, priced for broad access and designed to lower the barrier for animation experimentation. It offers an easy path for creators to bring their visuals to life—at a cost structure that, for now, appears both aggressive and sustainable.

But this launch also places the company squarely in the crosshairs of multiple challenges. On the product side, it faces capable and fast-moving competitors with more features and less legal baggage. On the legal front, it must defend its practices in a lawsuit that could reshape how AI firms are allowed to train and deploy generative models in the U.S.

For enterprise leaders evaluating AI creative platforms, Midjourney’s release presents a double-edged sword: a low-cost, fast-evolving tool with strong user adoption — but with unresolved regulatory and IP exposure that could affect reliability or continuity in enterprise deployments.

The question going forward is whether Midjourney can maintain its velocity without hitting a legal wall or whether it will have to significantly restructure its business and technology to stay viable in a maturing AI content ecosystem.