CAIS and Scale AI are excited to announce the launch of Humanity’s Last Exam, a project aimed at measuring how close we are to achieving expert-level AI systems. The exam is aimed at building the world’s most difficult public AI benchmark gathering experts across all fields. People who submit successful questions will be invited as coauthors on the paper for the dataset and have a chance to win money from a $500,000 prize pool.

Why Participate?

AI is developing at a rapid pace. Just a few years ago, AI systems performed no better than random chance on MMLU, the AI community’s most-downloaded benchmark (developed by CAIS). But just last week, OpenAI’s newest model performed around the ceiling on all of the most popular benchmarks, including MMLU, and received top scores on a variety of highly competitive STEM olympiads. Humanity must maintain a good understanding of the capabilities of AI systems. Existing tests now have become too easy and we can no longer track AI developments well, or how far they are from becoming expert-level.

Despite these advances, AI systems are still far from being able to answer difficult research and other intellectual questions. To keep track of how far the AI systems are from expert-level capabilities, we are developing Humanity’s Last Exam, which aims to be the world’s most difficult AI test.

Your Role

We’re assembling the largest, broadest coalition of experts in history to design questions that test how far AIs are from the human intelligence frontier. If there is a question that would genuinely impress you if an AI could solve it, we’d like to hear it from you!

If one or more of your questions is accepted, you will be offered optional co-authorship of the resulting paper. We have already received questions from researchers from MIT, UC Berkeley, Stanford, and more. The more questions accepted, the higher your name will appear.The top 50 questions will earn $5000 each.The next top 500 questions will earn $500 each.

Prizes may be awarded on question quality or question novelty compared to other questions. People who have already submitted questions prior to this announcement are also eligible for these prizes. A small set of questions will be kept private to catch if an AI is memorizing answers to public questions, but prizes can and co-authorship can be awarded to people who have their questions kept part of the private set.

Submission Guidelines

Challenge Level: Questions should be difficult for non-experts and not easily answerable via a quick online search. Avoid trick questions. Frontier AI systems are very good at answering even masters-level questions. It’s strongly encouraged that question-writers have 5+ years of experience in a technical industry job (e.g., SpaceX) or are a PhD student or above in academic training. In preparation for Humanity’s Last Exam, we found questions written by undergraduates tend to be too easy for the models. As a rule of thumb, if a randomly selected undergraduate can understand what is being asked, it is likely too easy for the frontier LLMs of today and tomorrow.Objectivity: Answers should be accepted by other experts in the field and free from personal taste, ambiguity, or subjectivity. Provide all necessary context and definitions within the question. Use standard, unambiguous jargon and notation.Originality: Questions must be your own work and not copied from others.Confidentiality: Questions and answers should not be publicly available. You may use questions from past exams you’ve given if they’re not accessible to the public.Weaponization Restrictions: Do not submit questions related to chemical, biological, radiological, nuclear, cyberweapons, or virology.

Terms and conditions here.

Deadline: November 1, 2024

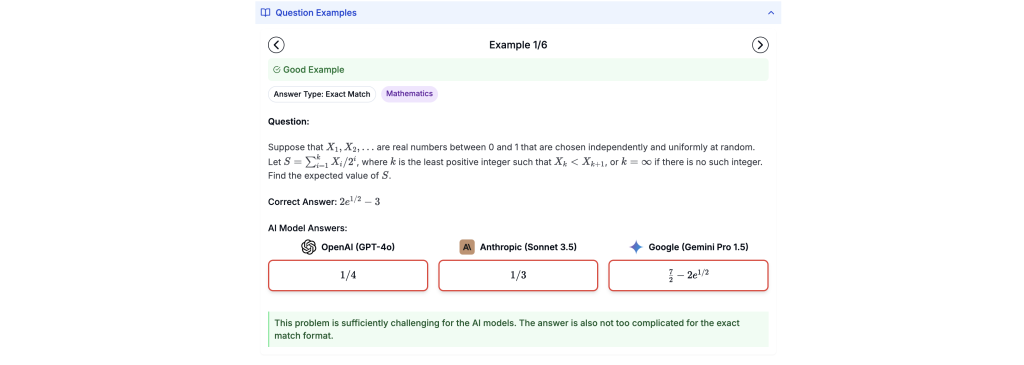

For a detailed list of instructions and example questions, please visit agi.safe.ai/submit.