Crafting unique, customized experiences that resonate with customers is a potent strategy for boosting engagement and fostering brand loyalty. However, creating dynamic personalized content is challenging and time-consuming because of the need for real-time data processing, complex algorithms for customer segmentation, and continuous optimization to adapt to shifting behaviors and preferences—all while providing scalability and accuracy. Despite these challenges, the potential rewards make personalization a worthwhile pursuit for many businesses. Amazon Personalize is a fully managed machine learning (ML) service that uses your data to generate product and content recommendations for your users. Amazon Personalize helps accelerate time-to-value with custom models that are trained on data you provide, such as your users, catalog items, and the interactions between users and items to generate personalized content and product recommendations. You can choose from various recipes—algorithms for specific use-cases—to find the ones that fit your needs, such as recommending items that a user is mostly likely to engage with next given their past interactions or next best action that a user is most likely to take.

To maintain a personalized user experience, it’s crucial to implement machine learning operations (MLOps) practices, including continuous integration, deployment, and training of your ML models. MLOps facilitates seamless integration across various ML tools and frameworks, streamlining the development process. A robust machine learning solution for maintaining personalized experiences typically includes automated pipeline construction, as well as automated configuration, training, retraining, and deployment of personalization models. While services like Amazon Personalize offer a ready-to-use recommendation engine, establishing a comprehensive MLOps lifecycle for a personalization solution remains a complex undertaking. This process involves intricate steps to make sure that models remain accurate and relevant as user behaviors and preferences evolve over time.

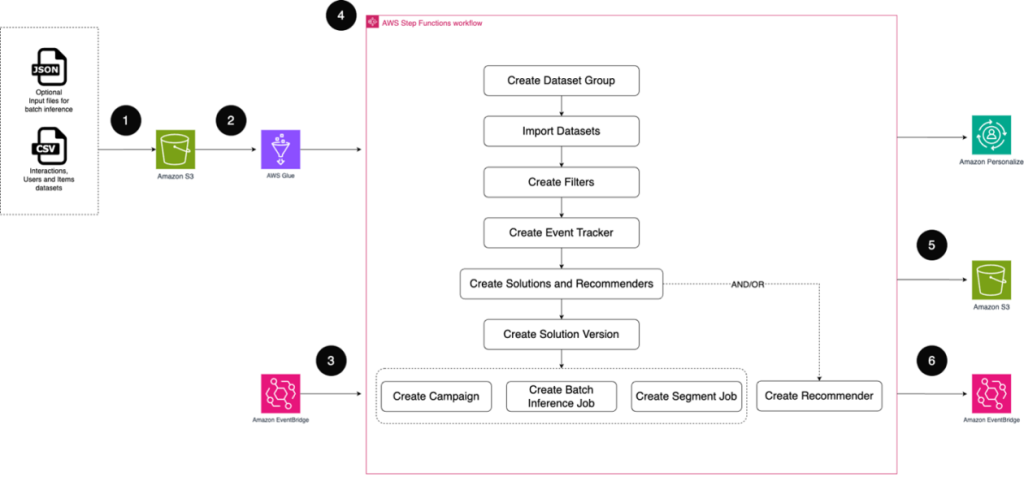

This blog post presents an MLOps solution that uses AWS Cloud Development Kit (AWS CDK) and services like AWS Step Functions, Amazon EventBridge and Amazon Personalize to automate provisioning resources for data preparation, model training, deployment, and monitoring for Amazon Personalize.

Features and benefits

Deploying this solution offers improved scalability and traceability and allows you to quickly set up a production-ready environment to seamlessly deliver tailored recommendations to users using Amazon Personalize. This solution:

Streamlines the creation and management of Amazon Personalize resources.

Provides greater flexibility in resource management and selective feature activation.

Enhances readability and comprehensibility of complex workflows.

Enables event-driven architecture by publishing key Amazon Personalize events, allowing real-time monitoring, and enabling automated responses and integrations with other systems.

Includes automated creation of Amazon Personalize resources, including recommenders, solutions, and solution versions.

Facilitates end-to-end workflow automation for dataset import, model training, and deployment in Amazon Personalize.

Improves organization and modularity of complex processes through nested step functions.

Provides flexible activation of specific solution components using AWS CDK.

Solution overview

This solution uses AWS CDK layer 3 constructs. Constructs are the basic building blocks of AWS CDK applications. A construct is a component within your application that represents one or more AWS CloudFormation resources and their configuration.

The solution architecture is shown in the preceding figure and includes:

An Amazon Simple Storage Service (Amazon S3) bucket is used to store interactions, users, and items datasets. In this step, you need to configure your bucket permissions so that Amazon Personalize and AWS Glue can access the datasets and input files.

AWS Glue is used to preprocess the interactions, users, and item datasets. This step helps ensure that the datasets comply with the training data requirements of Amazon Personalize. For more information, see Preparing training data for Amazon Personalize.

EventBridge is used to schedule regular updates, by triggering the workflow and for publishing events related to resource provisioning. Because Step Functions workflow orchestrates the workflow based on the input configuration file, you use that configuration when setting up the scheduled start of Step Functions.

Step Functions workflow manages all resource provisioning of the Amazon Personalize dataset group (including datasets, schemas, event tracker, filters, solutions, campaigns, and batch inference jobs). Step Functions provides monitoring across the solution through event logs. You can also visually track the stages of your workflow in the Step Functions console. You can adjust the input configuration file to better fit your use case, by defining schemas, recipes, and inference options. The solution workflow will have the following steps:

A preprocessing job that runs an AWS Glue job, if provided. This step facilitates any preprocessing of the data that might be required.

Create a dataset group, which is a container for Amazon Personalize resources.

Create a dataset import job for the datasets based on the defined S3 bucket.

Create filters that define any filtering that you want to apply on top of the recommendations.

Create an event tracker for ingesting real-time events, such as user interactions, which in turn influence the recommendations provided.

Create solutions and recommenders for creating custom resources and domain recommenders.

Create a campaign; or a batch inference or segment job for generating inferences for real-time, batch, and segmentation use cases respectively.

If you have a batch inference use case, then recommendations that match your inputs will be output into the S3 bucket that you defined in the input configuration file.

An Amazon EventBridge event bus, where resource status notification updates are posted throughout the AWS Step Functions workflow.

Prerequisites

Before you deploy the AWS CDK stack, make sure that you have the following prerequisites in place:

Install and configure AWS Command Line Interface (AWS CLI).

Install Python 3.12 or newer

Install Node.js 20.16.0 or newer.

Install AWS CDK 2.88.0 or newer.

Docker 27.5.1 or newer (required for AWS Lambda function bundling).

Newer versions of AWS CLI, Python, Node.js, and the AWS CDK are generally compatible, this solution has been tested with the versions listed.

Deploy the solution

With the prerequisites in place, use the following steps to deploy the solution:

Clone the repository to a new folder on your desktop using the following command:

Create a Python virtual environment for development:

Define an Amazon Personalize MLOps pipeline instance PersonalizeMlOpsPipeline (see personalize_pipeline_stack.py for the complete example, which also includes different inference options). In this walkthrough, you create a custom solution with an associated campaign and batch inference job:

Where:

‘PersonalizePipelineSolution‘ – The name of the pipeline solution stack

pre_processing_config – Configuration for the pre-processing job to transform raw data into a format usable by Amazon Personalize. For using AWS Glue jobs for preprocessing specify the AWS Glue job class (PreprocessingGlueJobFlow) as a value to the parameter job_class. Currently, only AWS Glue jobs are supported. You can pass the name of the AWS Glue job that you need to run as a part of the input config. This doesn’t deploy the actual AWS Glue job responsible for pre-processing the files; the actual AWS Glue must be created outside of this solution and the name passed as an input to the state machine. A sample AWS Glue job is supplied in the accompanying repo, which shows how pre-processing can be done.

enable_filters – A Boolean value to enable dataset filters for pre-processing. When set to true, the pipeline will create the state machines needed to create filters. Supported options are true or false. If you specify this value as false, the corresponding state machine is not deployed.

enable_event_tracker – A Boolean value to enable the Amazon Personalize event tracker. When set to true, the pipeline will create the state machines needed to create an event tracker. Supported options are true or false. If you specify this value as false, the corresponding state machine is not deployed.

recommendation_config – Configuration options for recommendations. The two types currently supported are solutions and recommenders. Within the solutions type, you can have multiple options such as campaigns, batchInferenceJobs, and batchSegmentJobs. Based on the selected options, the corresponding state machine and components are created. In the earlier example, we used campaigns and batchInferenceJobs as the option, which means that only the campaigns and batch inference job state machines will be deployed with the AWS CDK.

After the infrastructure is deployed you can also enable and disable certain options through the state machine input configuration file. You can use this AWS CDK code to control what components are deployed in your AWS environment and with the input config, you can select what components run.

Preprocessing: As an optional step, you can use an existing AWS Glue job for preprocessing your data before feeding it into Amazon Personalize, which uses this data to generate recommendations for your end users. While this post demonstrates the process using the Movie Lens dataset, you can adapt it for your own datasets or custom processing needs. To do so, navigate to the glue_job folder and modify the movie_script.py file accordingly, or create an entirely new AWS Glue job tailored to your specific requirements. This preprocessing step, though optional, can be crucial in making sure that your data is optimally formatted for Amazon Personalize to generate accurate recommendations.

Make sure that the AWS Glue job is configured to write its output to an S3 bucket. This bucket should then be specified as an input source in the Step Functions input configuration file.

Verify that the AWS Glue service has the necessary permissions to access the S3 bucket mentioned in your script.

In the input configuration, you’ll need to provide the name of the AWS Glue job that will be executed by the main state machine workflow. It’s crucial that this specified AWS Glue job runs without any errors, because any failures could potentially cause the entire state machine execution to fail.

Package and deploy the solution with AWS CDK, allowing for the most flexibility in development:

Before you can deploy the pipeline using AWS CDK, you need to set up AWS credentials on your local machine. You can refer Set up AWS temporary credentials for more details.

Run the pipeline

Before initiating the pipeline, create the resources that follow and document the resource names for future reference.

Set up an S3 bucket for dataset storage. If you plan to use the preprocessing step, this should be the same bucket as the output destination.

Update the S3 bucket policy to grant Amazon Personalize the necessary access permissions. See Giving Amazon Personalize access to Amazon S3 resources for policy examples.

Create an AWS Identity and Access Management (IAM) role to be used by the state machine for accessing Amazon Personalize resources.

You can find detailed instructions and policy examples in the GitHub repository.

After you’ve set up these resources, you can create the input configuration file for the Step Functions state machine. If you configure the optional AWS Glue job it will create the input files that are required as an input to the pipeline, refer Configure the Glue Job to create the output files for more details.

Create input configuration

This input file is crucial because it contains all the essential information needed to create and manage your Amazon Personalize resources, this input configuration json acts as input to the Step Functions state machine. The file can contain the following top level objects

datasetGroup

datasets

eventTracker

filters

solutions (can contain campaigns, batchInferenceJobs and batchSegmentJobs)

recommenders

Customize the configuration file according to your specific requirements and include or exclude sections based on the Amazon Personalize artifacts that you want to create. For the dataset import jobs in the datasets section, replace AWS_ACCOUNT_ID, S3_BUCKET_NAME and IAM_ROLE_ARN with the appropriate values. The following is a snippet of the input configuration file. For a complete sample, see input_media.json.

Likewise, if you’re using batch inference or batch segment jobs, remember to also update the BUCKET_NAME and IAM ROLE ARN in those sections. It’s important to verify that you have the required input files for batch inference stored in your S3 bucket. Adjust the file paths in your configuration to accurately reflect the location of these files within your bucket structure. This helps ensure that Amazon Personalize can access the correct data when executing these batch processes.

Adjust the AWS Glue Job name in the configuration file if you have configured it as a part of the AWS CDK stack.

See the property table for a deep dive into each property and identify whether it’s optional or required.

Execute the pipeline

You can run the pipeline using the main state machine by the name PersonalizePipelineSolution from the Step Functions Console or set up a schedule in EventBridge (find the step-by step process in the Schedule the workflow for continued maintenance of the solution section of this post).

In the AWS Management Console for Step Functions, navigate to State machines and select the PersonalizePipelineSolution.

Choose Start Execution and enter the configuration file that you created for your use case based on the steps in the Create input configuration section.

Choose Start execution and monitor the State Machine execution. In the Step Functions console, you will find a visual representation of the workflow and can track at what stage the execution is. Event logs will give you insight into the progress of the stages and information if there are any errors. The following figure is an example of a completed workflow:

After the workflow finishes, you can view the resources in the Amazon Personalize console. For batch inference jobs specifically, you can locate the corresponding step under the Inference tasks section of the graph, and within the Custom Resources area of the Amazon Personalize console.

Get recommendations (real-time inference)

After your pipeline has completed its run successfully, you can obtain recommendations. In the example configuration, we chose to deploy campaigns as the inference option. As a result, you’ll have access to a campaign that can provide real-time recommendations.

We use the Amazon Personalize console to get recommendations. Choose Dataset groups and select your dataset group name. Choose Campaigns and select your campaign name. Enter a userid and items Ids of your choice to test personalized ranking, you can get the userid and item Ids from the input file in the Amazon S3 bucket you configured.

Get recommendations (batch inference)

If you have configured batch inference to run, start by verifying that the batch inference step has successfully completed in the Step Functions workflow. Then, use the Amazon S3 console to navigate to the destination S3 bucket for your batch inference job. If you don’t see an output file there, verify that you’ve provided the correct path for the input file in your input configuration.

Schedule the workflow for continued maintenance of the solution

While Amazon Personalize offers automatic training for solutions through its console or SDK, allowing users to set retraining frequencies such as every three days, this MLOps workflow provides an enhanced approach. By using EventBridge schedules you gain more precise control over the timing of retraining processes. Using this method, you can specify exact dates and times for retraining executions. To implement this advanced scheduling, you can configure an EventBridge schedule to trigger the Step Functions execution, giving you finer granularity in managing your machine learning model updates.

Navigate to the Amazon EventBridge Console and select EventBridge Schedule and then choose Create schedule.

You can establish a recurring schedule for executing your entire workflow. A key benefit of this solution is the enhanced control it offers over the specific date and time you want your workflow to run. This allows for precise timing of your processes, which you can use to align the workflow execution with your operational needs or optimal data processing windows.

Select AWS Step Functions (as shown below) as your target.

Insert the input configuration file that you prepared previously as the input and click Next.

An additional step you can take is to set up a dead-letter queue with Amazon Simple Query Service (Amazon SQS) to handle failed Step Functions executions.

Monitoring and notification

To maintain the reliability, availability, and performance of Step Functions and your solution, set up monitoring and logging. You can set up an EventBridge rule to receive notifications about events that are of interest, such as batch inference being ready in the S3 bucket. Here is how you can set that up:

Navigate to Amazon Simple Notification Service (Amazon SNS) console and create an SNS topic that will be the target for your event.

Amazon SNS supports subscription for different endpoint types such as HTTP/HTTPS, email, Lambda, SMS, and so on. For this example, use an email endpoint.

After you create the topic and the subscription, navigate to the EventBridge console and select Create Rule. Define the details associated with the event such as the name, description, and the event bus.

To set up the event rule, you’ll use the pattern form. You use this form to define the specific events that will trigger notifications. For the batch segment job completion step, you should configure the source and detail-type fields as follows:

Select the SNS topic as your target and proceed.

With this procedure, you have set up an EventBridge rule to receive notifications on your email when an object is created in your batch inference bucket. You can also set up logic based on your use case to trigger any downstream processes such as creation of email campaigns with the results of your inference by choosing different targets such as Lambda.

Additionally, you can use Step Functions and Amazon Personalize monitoring through Amazon CloudWatch metrics. See Logging and Monitoring AWS Step Functions and Monitoring Amazon Personalize for more information.

Handling schema updates

Schema updates are available in Amazon Personalize for adding columns to the existing schema. Note that deleting columns from existing schemas isn’t currently supported. To update the schema, make sure that you’re modifying the schema in the input configuration passed to Step Functions. See Replacing a dataset’s schema to add new columns for more information.

Clean up

To avoid incurring additional costs, delete the resources you created during this solution walkthrough. You can clean up the solution by deleting the CloudFormation stack you deployed as part of the setup.

Using the console

Sign in to the AWS CloudFormation console.

On the Stacks page, select this solution’s installation stack.

Choose Delete.

Using the AWS CLI

Conclusion

This MLOps solution for Amazon Personalize offers a powerful, automated approach to creating and maintaining personalized user experiences at scale. By using AWS services like AWS CDK, Step Functions, and EventBridge, the solution streamlines the entire process from data preparation through model deployment and monitoring. The flexibility of this solution means that you can customize it to fit various use cases, and integration with EventBridge keeps models up to date. Delivering exceptional personalized experiences is critical for business growth, and this solution provides an efficient way to harness the power of Amazon Personalize to improve user engagement, customer loyalty, and business results. We encourage you to explore and adapt this solution to enhance your personalization efforts and stay ahead in the competitive digital landscape.

To learn more about the capabilities discussed in this post, check out Amazon Personalize features and the Amazon Personalize Developer Guide.

Additional resources:

About the Authors

Reagan Rosario brings over a decade of technical expertise to his role as a Sr. Specialist Solutions Architect in Generative AI at AWS. Reagan transforms enterprise systems through strategic implementation of AI-powered cloud solutions, automated workflows, and innovative architecture design. His specialty lies in guiding organizations through digital evolution—preserving core business value while implementing cutting-edge generative AI capabilities that dramatically enhance operations and create new possibilities.

Reagan Rosario brings over a decade of technical expertise to his role as a Sr. Specialist Solutions Architect in Generative AI at AWS. Reagan transforms enterprise systems through strategic implementation of AI-powered cloud solutions, automated workflows, and innovative architecture design. His specialty lies in guiding organizations through digital evolution—preserving core business value while implementing cutting-edge generative AI capabilities that dramatically enhance operations and create new possibilities.

Nensi Hakobjanyan is a Solutions Architect at Amazon Web Services, where she supports enterprise Retail and CPG customers in designing and implementing cloud solutions. In addition to her deep expertise in cloud architecture, Nensi brings extensive experience in Machine Learning and Artificial Intelligence, helping organizations unlock the full potential of data-driven innovation. She is passionate about helping customers through digital transformation and building scalable, future-ready solutions in the cloud.

Nensi Hakobjanyan is a Solutions Architect at Amazon Web Services, where she supports enterprise Retail and CPG customers in designing and implementing cloud solutions. In addition to her deep expertise in cloud architecture, Nensi brings extensive experience in Machine Learning and Artificial Intelligence, helping organizations unlock the full potential of data-driven innovation. She is passionate about helping customers through digital transformation and building scalable, future-ready solutions in the cloud.