Multi-channel transcription streaming is a feature of Amazon Transcribe that can be used in many cases with a web browser. Creating this stream source has it challenges, but with the JavaScript Web Audio API, you can connect and combine different audio sources like videos, audio files, or hardware like microphones to obtain transcripts.

In this post, we guide you through how to use two microphones as audio sources, merge them into a single dual-channel audio, perform the required encoding, and stream it to Amazon Transcribe. A Vue.js application source code is provided that requires two microphones connected to your browser. However, the versatility of this approach extends far beyond this use case—you can adapt it to accommodate a wide range of devices and audio sources.

With this approach, you can get transcripts for two sources in a single Amazon Transcribe session, offering cost savings and other benefits compared to using a separate session for each source.

Challenges when using two microphones

For our use case, using a single-channel stream for two microphones and enabling Amazon Transcribe speaker label identification to identify the speakers might be enough, but there are a few considerations:

Speaker labels are randomly assigned at session start, meaning you will have to map the results in your application after the stream has started

Mislabeled speakers with similar voice tones can happen, which even for a human is hard to distinguish

Voice overlapping can occur when two speakers talk at the same time with one audio source

By using two audio sources with microphones, you can address these concerns by making sure each transcription is from a fixed input source. By assigning a device to a speaker, our application knows in advance which transcript to use. However, you might still encounter voice overlapping if two nearby microphones are picking up multiple voices. This can be mitigated by using directional microphones, volume management, and Amazon Transcribe word-level confidence scores.

Solution overview

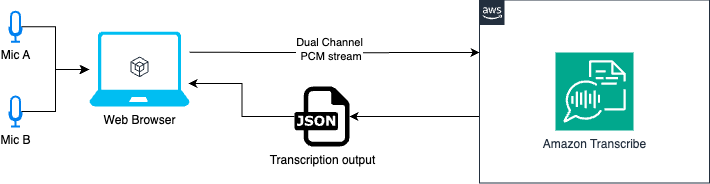

The following diagram illustrates the solution workflow.

Application diagram for two microphones

We use two audio inputs with the Web Audio API. With this API, we can merge the two inputs, Mic A and Mic B, into a single audio data source, with the left channel representing Mic A and the right channel representing Mic B.

Then, we convert this audio source to PCM (Pulse-Code Modulation) audio. PCM is a common format for audio processing, and it’s one of the formats required by Amazon Transcribe for the audio input. Finally, we stream the PCM audio to Amazon Transcribe for transcription.

Prerequisites

You should have the following prerequisites in place:

{

“Version”: “2012-10-17”,

“Statement”: [

{

“Sid”: “DemoWebAudioAmazonTranscribe”,

“Effect”: “Allow”,

“Action”: “transcribe:StartStreamTranscriptionWebSocket”,

“Resource”: “*”

}

]

}

Start the application

Complete the following steps to launch the application:

Go to the root directory where you downloaded the code.

Create a .env file to set up your AWS access keys from the env.sample file.

Install packages and run bun install (if you’re using node, run node install).

Start the web server and run bun dev (if you’re using node, run node dev).

Open your browser in http://localhost:5173/.

Application running on http://localhost:5173 with two connected microphones

Code walkthrough

In this section, we examine the important code pieces for the implementation:

The first step is to list the connected microphones by using the browser API navigator.mediaDevices.enumerateDevices():

const devices = await navigator.mediaDevices.enumerateDevices()

return devices.filter((d) => d.kind === ‘audioinput’)

Next, you need to obtain the MediaStream object for each of the connected microphones. This can be done using the navigator.mediaDevices.getUserMedia() API, which enables access the user’s media devices (such as cameras and microphones). You can then retrieve a MediaStream object that represents the audio or video data from those devices:

const streams = []

const stream = await navigator.mediaDevices.getUserMedia({

audio: {

deviceId: device.deviceId,

echoCancellation: true,

noiseSuppression: true,

autoGainControl: true,

},

})

if (stream) streams.push(stream)

To combine the audio from the multiple microphones, you need to create an AudioContext interface for audio processing. Within this AudioContext, you can use ChannelMergerNode to merge the audio streams from the different microphones. The connect(destination, src_idx, ch_idx) method arguments are:

destination – The destination, in our case mergerNode.

src_idx – The source channel index, in our case both 0 (because each microphone is a single-channel audio stream).

ch_idx – The channel index for the destination, in our case 0 and 1 respectively, to create a stereo output.

// instance of audioContext

const audioContext = new AudioContext({

sampleRate: SAMPLE_RATE,

})

// this is used to process the microphone stream data

const audioWorkletNode = new AudioWorkletNode(audioContext, ‘recording-processor’, {…})

// microphone A

const audioSourceA = audioContext.createMediaStreamSource(mediaStreams[0]);

// microphone B

const audioSourceB = audioContext.createMediaStreamSource(mediaStreams[1]);

// audio node for two inputs

const mergerNode = audioContext.createChannelMerger(2);

// connect the audio sources to the mergerNode destination.

audioSourceA.connect(mergerNode, 0, 0);

audioSourceB.connect(mergerNode, 0, 1);

// connect our mergerNode to the AudioWorkletNode

merger.connect(audioWorkletNode);

The microphone data is processed in an AudioWorklet that emits data messages every defined number of recording frames. These messages will contain the audio data encoded in PCM format to send to Amazon Transcribe. Using the p-event library, you can asynchronously iterate over the events from the Worklet. A more in-depth description about this Worklet is provided in the next section of this post.

import { pEventIterator } from ‘p-event’

…

// Register the worklet

try {

await audioContext.audioWorklet.addModule(‘./worklets/recording-processor.js’)

} catch (e) {

console.error(‘Failed to load audio worklet’)

}

// An async iterator

const audioDataIterator = pEventIterator<‘message’, MessageEvent>(

audioWorkletNode.port,

‘message’,

)

…

// AsyncIterableIterator: Every time the worklet emits an event with the message `SHARE_RECORDING_BUFFER`, this iterator will return the AudioEvent object that we need.

const getAudioStream = async function* (

audioDataIterator: AsyncIterableIterator>,

) {

for await (const chunk of audioDataIterator) {

if (chunk.data.message === ‘SHARE_RECORDING_BUFFER’) {

const { audioData } = chunk.data

yield {

AudioEvent: {

AudioChunk: audioData,

},

}

}

}

}

To start streaming the data to Amazon Transcribe, you can use the fabricated iterator and enabled NumberOfChannels: 2 and EnableChannelIdentification: true to enable the dual channel transcription. For more information, refer to the AWS SDK StartStreamTranscriptionCommand documentation.

import {

LanguageCode,

MediaEncoding,

StartStreamTranscriptionCommand,

} from ‘@aws-sdk/client-transcribe-streaming’

const command = new StartStreamTranscriptionCommand({

LanguageCode: LanguageCode.EN_US,

MediaEncoding: MediaEncoding.PCM,

MediaSampleRateHertz: SAMPLE_RATE,

NumberOfChannels: 2,

EnableChannelIdentification: true,

ShowSpeakerLabel: true,

AudioStream: getAudioStream(audioIterator),

})

After you send the request, a WebSocket connection is created to exchange audio stream data and Amazon Transcribe results:

const data = await client.send(command)

for await (const event of data.TranscriptResultStream) {

for (const result of event.TranscriptEvent.Transcript.Results || []) {

callback({ …result })

}

}

The result object will include a ChannelId property that you can use to identify your microphone source, such as ch_0 and ch_1, respectively.

Deep dive: Audio Worklet

Audio Worklets can execute in a separate thread to provide very low-latency audio processing. The implementation and demo source code can be found in the public/worklets/recording-processor.js file.

For our case, we use the Worklet to perform two main tasks:

Process the mergerNode audio in an iterable way. This node includes both of our audio channels and is the input to our Worklet.

Encode the data bytes of the mergerNode node into PCM signed 16-bit little-endian audio format. We do this for each iteration or when required to emit a message payload to our application.

The general code structure to implement this is as follows:

class RecordingProcessor extends AudioWorkletProcessor {

constructor(options) {

super()

}

process(inputs, outputs) {…}

}

registerProcessor(‘recording-processor’, RecordingProcessor)

You can pass custom options to this Worklet instance using the processorOptions attribute. In our demo, we set a maxFrameCount: (SAMPLE_RATE * 4) / 10 as a bitrate guide to determine when to emit a new message payload. A message is for example:

this.port.postMessage({

message: ‘SHARE_RECORDING_BUFFER’,

buffer: this._recordingBuffer,

recordingLength: this.recordedFrames,

audioData: new Uint8Array(pcmEncodeArray(this._recordingBuffer)), // PCM encoded audio format

})

PCM encoding for two channels

One of the most important sections is how to encode to PCM for two channels. Following the AWS documentation in the Amazon Transcribe API Reference, the AudioChunk is defined by: Duration (s) * Sample Rate (Hz) * Number of Channels * 2. For two channels, 1 second at 16000Hz is: 1 * 16000 * 2 * 2 = 64000 bytes. Our encoding function it should then look like this:

// Notice that input is an array, where each element is a channel with Float32 values between -1.0 and 1.0 from the AudioWorkletProcessor.

const pcmEncodeArray = (input: Float32Array[]) => {

const numChannels = input.length

const numSamples = input[0].length

const bufferLength = numChannels * numSamples * 2 // 2 bytes per sample per channel

const buffer = new ArrayBuffer(bufferLength)

const view = new DataView(buffer)

let index = 0

for (let i = 0; i < numSamples; i++) {

// Encode for each channel

for (let channel = 0; channel < numChannels; channel++) {

const s = Math.max(-1, Math.min(1, input[channel][i]))

// Convert the 32 bit float to 16 bit PCM audio waveform samples.

// Max value: 32767 (0x7FFF), Min value: -32768 (-0x8000)

view.setInt16(index, s < 0 ? s * 0x8000 : s * 0x7fff, true)

index += 2

}

}

return buffer

}

For more information how the audio data blocks are handled, see AudioWorkletProcessor: process() method. For more information on PCM format encoding, see Multimedia Programming Interface and Data Specifications 1.0.

Conclusion

In this post, we explored the implementation details of a web application that uses the browser’s Web Audio API and Amazon Transcribe streaming to enable real-time dual-channel transcription. By using the combination of AudioContext, ChannelMergerNode, and AudioWorklet, we were able to seamlessly process and encode the audio data from two microphones before sending it to Amazon Transcribe for transcription. The use of the AudioWorklet in particular allowed us to achieve low-latency audio processing, providing a smooth and responsive user experience.

You can build upon this demo to create more advanced real-time transcription applications that cater to a wide range of use cases, from meeting recordings to voice-controlled interfaces.

Try out the solution for yourself, and leave your feedback in the comments.

About the Author

Jorge Lanzarotti is a Sr. Prototyping SA at Amazon Web Services (AWS) based on Tokyo, Japan. He helps customers in the public sector by creating innovative solutions to challenging problems.

Jorge Lanzarotti is a Sr. Prototyping SA at Amazon Web Services (AWS) based on Tokyo, Japan. He helps customers in the public sector by creating innovative solutions to challenging problems.