In October 2022, Microsoft Research Asia hosted a workshop that brought together experts in computer science, psychology, sociology, and law as part of Microsoft’s commitment to responsible AI (opens in new tab). The event led to ongoing collaborations exploring AI’s societal implications, including the Value Compass (opens in new tab) project.

As these efforts grew, researchers focused on how AI systems could be designed to meet the needs of people and institutions in areas like healthcare, education, and public services. This work culminated in Societal AI: Research Challenges and Opportunities, a white paper that explores how AI can better align with societal needs.

What is Societal AI?

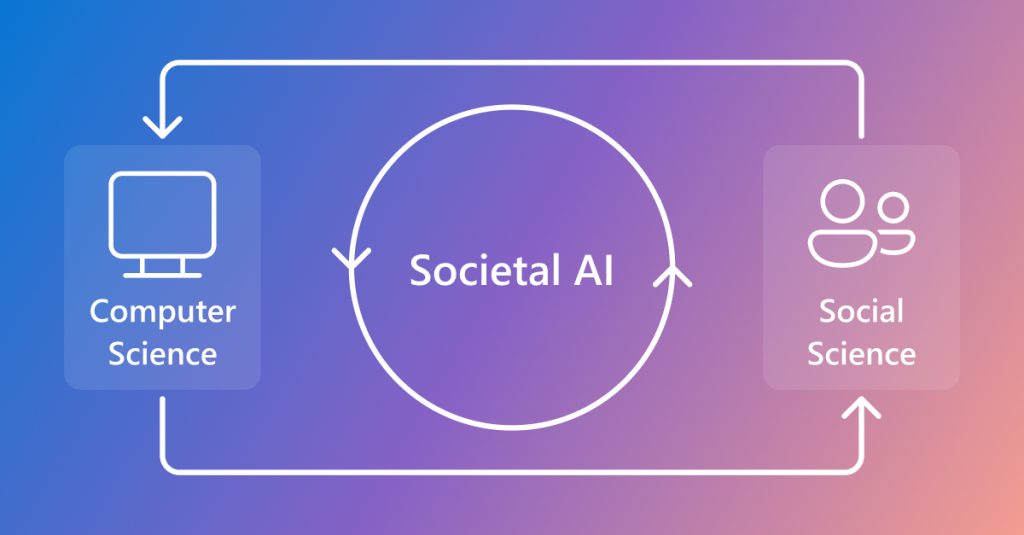

Societal AI is an emerging interdisciplinary area of study that examines how AI intersects with social systems and public life. It focuses on two main areas: (1) the impact of AI technologies on fields like education, labor, and governance; and (2) the challenges posed by these systems, such as evaluation, accountability, and alignment with human values. The goal is to guide AI development in ways that respond to real-world needs.

Microsoft research podcast

What’s Your Story: Lex Story

Model maker and fabricator Lex Story helps bring research to life through prototyping. He discusses his take on failure; the encouragement and advice that has supported his pursuit of art and science; and the sabbatical that might inspire his next career move.

The white paper offers a framework for understanding these dynamics and provides recommendations for integrating AI responsibly into society. This post highlights the paper’s key insights and what they mean for future research.

Tracing the development of Societal AI

Societal AI began nearly a decade ago at Microsoft Research Asia, where early work on personalized recommendation systems uncovered risks like echo chambers, where users are repeatedly exposed to similar viewpoints, and polarization, which can deepen divisions between groups. Those findings led to deeper investigations into privacy, fairness, and transparency, helping inform Microsoft’s broader approach to responsible AI.

The rapid rise of large-scale AI models in recent years has made these concerns more urgent. Today, researchers across disciplines are working to define shared priorities and guide AI development in ways that reflect social needs and values.

Key insights

The white paper outlines several important considerations for the field:

Interdisciplinary framework: Bridges technical AI research with the social sciences, humanities, policy studies, and ethics to address AI’s far-reaching societal effects.

Actionable research agenda: Identifies ten research questions that offer a roadmap for researchers, policymakers, and industry leaders.

Global perspective: Highlights the importance of different cultural perspectives and international cooperation in shaping responsible AI development dialogue.

Practical insights: Balances theory with real-world applications, drawing from collaborative research projects.

“AI’s impact extends beyond algorithms and computation—it challenges us to rethink fundamental concepts like trust, creativity, agency, and value systems,” says Lidong Zhou, managing director of Microsoft Research Asia. “It recognizes that developing more powerful AI models is not enough; we must examine how AI interacts with human values, institutions, and diverse cultural contexts.”

Guiding principles for responsible integration

The research agenda is grounded in three key principles:

Harmony: AI should minimize conflict and build trust to support acceptance.

Synergy: AI should complement human capabilities, enabling outcomes that neither humans nor machines could achieve alone.

Resilience: AI should be robust and adaptable as social and technological conditions evolve.

Ten critical questions

These questions span both technical and societal concerns:

How can AI be aligned with diverse human values and ethical principles?

How can AI systems be designed to ensure fairness and inclusivity across different cultures, regions, and demographic groups?

How can we ensure AI systems are safe, reliable, and controllable, especially as they become more autonomous?

How can human-AI collaboration be optimized to enhance human abilities?

How can we effectively evaluate AI’s capabilities and performance in new, unforeseen tasks and environments?

How can we enhance AI interpretability to ensure transparency in its decision-making processes?

How will AI reshape human cognition, learning, and creativity, and what new capabilities might it unlock?

How will AI redefine the nature of work, collaboration, and the future of global business models?

How will AI transform research methodologies in the social sciences, and what new insights might it enable?

How should regulatory frameworks evolve to govern AI development responsibly and foster global cooperation?

This list will evolve alongside AI’s developing societal impact, ensuring the agenda remains relevant over time. Building on these questions, the white paper underscores the importance of sustained, cross-disciplinary collaboration to guide AI development in ways that reflect societal priorities and public interest.

“This thoughtful and comprehensive white paper from Microsoft Research Asia represents an important early step forward in anticipating and addressing the societal implications of AI, particularly large language models (LLMs), as they enter the world in greater numbers and for a widening range of purposes,” says research collaborator James A. Evans (opens in new tab), professor of sociology at the University of Chicago.

Looking ahead

Microsoft is committed to fostering collaboration and invites others to take part in developing governance systems. As new challenges arise, the responsible use of AI for the public good will remain central to our research.

We hope the white paper serves as both a guide and a call to action, emphasizing the need for engagement across research, policy, industry, and the public.

For more information, and to access the full white paper, visit the Microsoft Research Societal AI page. Listen to the author discuss more about the research in this podcast.

Acknowledgments

We are grateful for the contributions of the researchers, collaborators, and reviewers who helped shape this white paper.