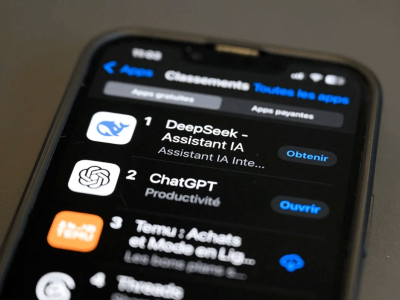

DeepSeek says its R1 model did not learn by copying examples generated by other LLMs.Credit: David Talukdar/ZUMA via Alamy

The success of DeepSeek’s powerful artificial intelligence (AI) model R1 — that made the US stock market plummet when it was released in January — did not hinge on being trained on the output of its rivals, researchers at the Chinese firm have said. The statement came in documents released alongside a peer-reviewed version of the R1 model, published today in Nature1.

How China created AI model DeepSeek and shocked the world

R1 is designed to excel at ‘reasoning’ tasks such as mathematics and coding, and is a cheaper rival to tools developed by US technology firms. As an ‘open weight’ model, it is available for anyone to download and is the most popular such model on the AI community platform Hugging Face to date, having been downloaded 10.9 million times.

The paper updates a preprint released in January, which describes how DeepSeek augmented a standard large language model (LLM) to tackle reasoning tasks. Its supplementary material reveals for the first time how much R1 cost to train: the equivalent of just US$294,000. This comes on top of the $6 million or so that the company, based in Hangzhou, spent to make the base LLM that R1 is built on, but the total amount is still substantially less than the tens of millions of dollars that rival models are thought to have cost. DeepSeek says R1 was trained mainly on Nvidia’s H800 chips, which in 2023 became forbidden from being sold to China under US export controls.

Rigorous review

R1 is thought to be the first major LLM to undergo the peer-review process. “This is a very welcome precedent,” says Lewis Tunstall, a machine-learning engineer at Hugging Face who reviewed the Nature paper. “If we don’t have this norm of sharing a large part of this process publicly, it becomes very hard to evaluate whether these systems pose risks or not.”

In response to peer-review comments, the DeepSeek team reduced anthropomorphizing in its descriptions and added clarifications of technical details, including the kinds of data the model was trained on, and its safety. “Going through a rigorous peer-review process certainly helps verify the validity and usefulness of the model,” says Huan Sun, an AI researcher at Ohio State University in Columbus. “Other firms should do the same.”

Scientists flock to DeepSeek: how they’re using the blockbuster AI model

DeepSeek’s major innovation was to use an automated kind of the trial-and-error approach known as pure reinforcement learning to create R1. The process rewarded the model for reaching correct answers, rather than teaching it to follow human-selected reasoning examples. The company says that this is how its model learnt its own reasoning-like strategies, such as how to verify its workings without following human-prescribed tactics. To boost efficiency, the model also scored its own attempts using estimates, rather than employing a separate algorithm to do so, a technique known as group relative policy optimization.

The model has been “quite influential” among AI researchers, says Sun. “Almost all work in 2025 so far that conducts reinforcement learning in LLMs might have been inspired by R1 one way or another.”