AUSTIN, Texas — Using generative artificial intelligence, a team of researchers at The University of Texas at Austin has converted sounds from audio recordings into street-view images. The visual accuracy of these generated images demonstrates that machines can replicate human connection between audio and visual perception of environments.

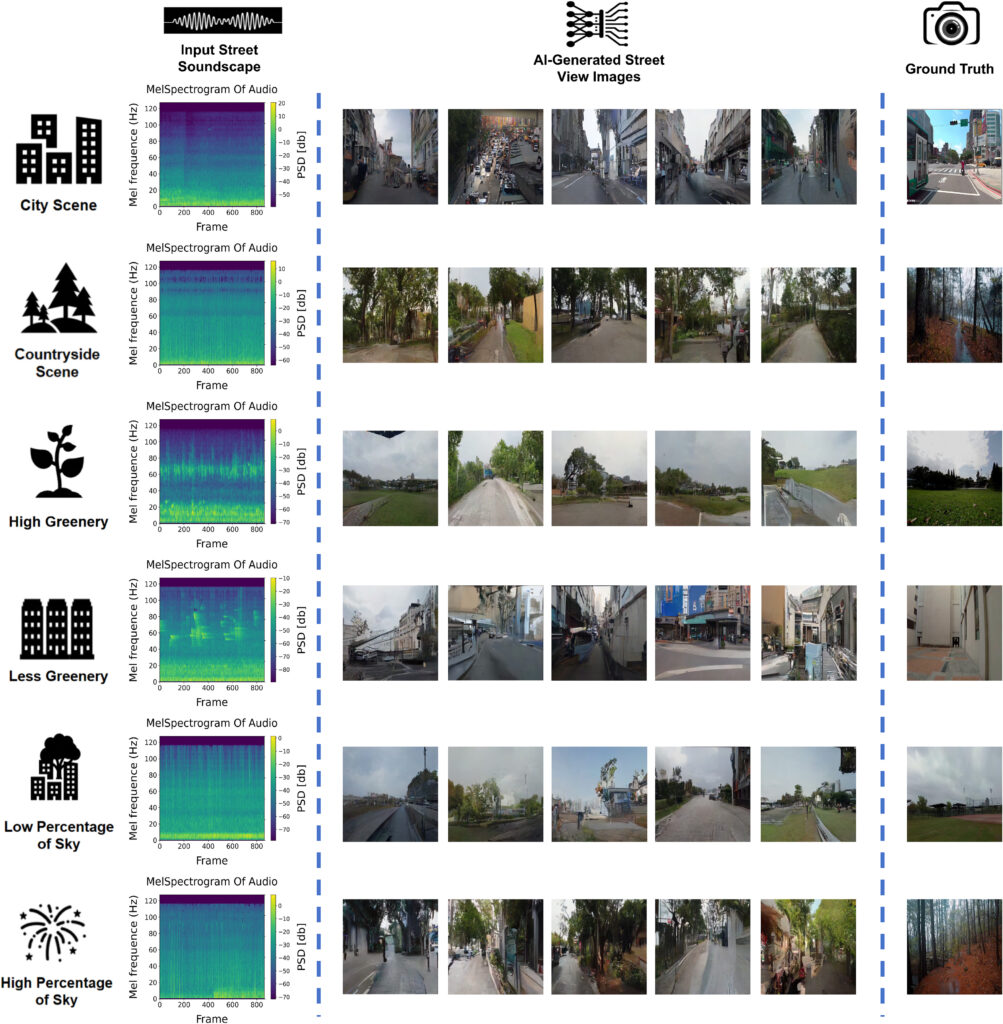

In a paper published in Computers, Environment and Urban Systems, the research team describes training a soundscape-to-image AI model using audio and visual data gathered from a variety of urban and rural streetscapes and then using that model to generate images from audio recordings.

“Our study found that acoustic environments contain enough visual cues to generate highly recognizable streetscape images that accurately depict different places,” said Yuhao Kang, assistant professor of geography and the environment at UT and co-author of the study. “This means we can convert the acoustic environments into vivid visual representations, effectively translating sounds into sights.”

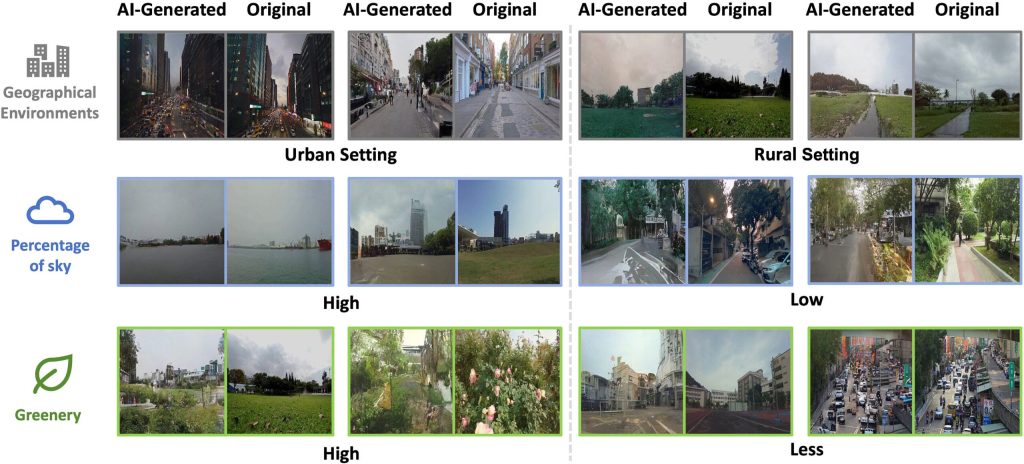

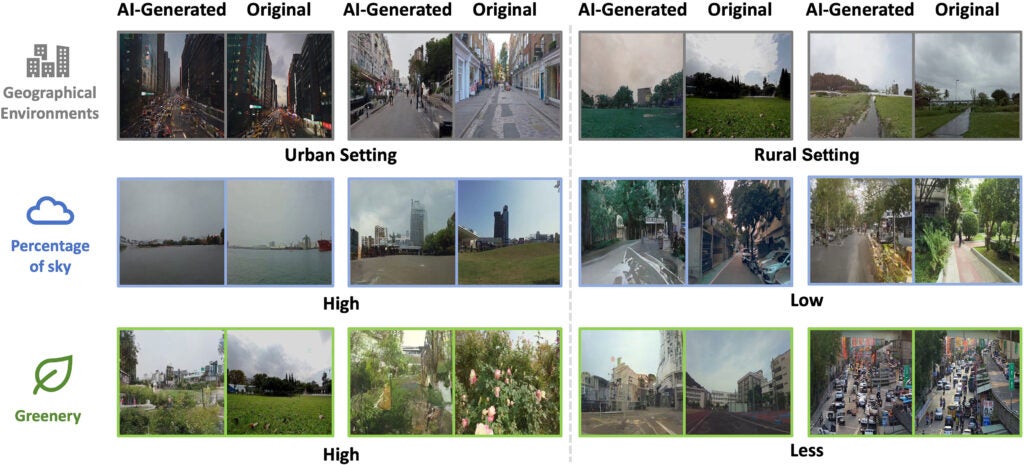

Using YouTube video and audio from cities in North America, Asia and Europe, the team created pairs of 10-second audio clips and image stills from the various locations and used them to train an AI model that could produce high-resolution images from audio input. They then compared AI sound-to-image creations made from 100 audio clips to their respective real-world photos, using both human and computer evaluations. Computer evaluations compared the relative proportions of greenery, building and sky between source and generated images, whereas human judges were asked to correctly match one of three generated images to an audio sample.

The results showed strong correlations in the proportions of sky and greenery between generated and real-world images and a slightly lesser correlation in building proportions. And human participants averaged 80% accuracy in selecting the generated images that corresponded to source audio samples.

“Traditionally, the ability to envision a scene from sounds is a uniquely human capability, reflecting our deep sensory connection with the environment. Our use of advanced AI techniques supported by large language models (LLMs) demonstrates that machines have the potential to approximate this human sensory experience,” Kang said. “This suggests that AI can extend beyond mere recognition of physical surroundings to potentially enrich our understanding of human subjective experiences at different places.”

In addition to approximating the proportions of sky, greenery and buildings, the generated images often maintained the architectural styles and distances between objects of their real-world image counterparts, as well as accurately reflecting whether soundscapes were recorded during sunny, cloudy or nighttime lighting conditions. The authors note that lighting information might come from variations in activity in the soundscapes. For example, traffic sounds or the chirping of nocturnal insects could reveal time of day. Such observations further the understanding of how multisensory factors contribute to our experience of a place.

“When you close your eyes and listen, the sounds around you paint pictures in your mind,” Kang said. “For instance, the distant hum of traffic becomes a bustling cityscape, while the gentle rustle of leaves ushers you into a serene forest. Each sound weaves a vivid tapestry of scenes, as if by magic, in the theater of your imagination.”

Kang’s work focuses on using geospatial AI to study the interaction of humans with their environments. In another recent paper published in Nature, he and his co-authors examined the potential of AI to capture the characteristics that give cities their unique identities.