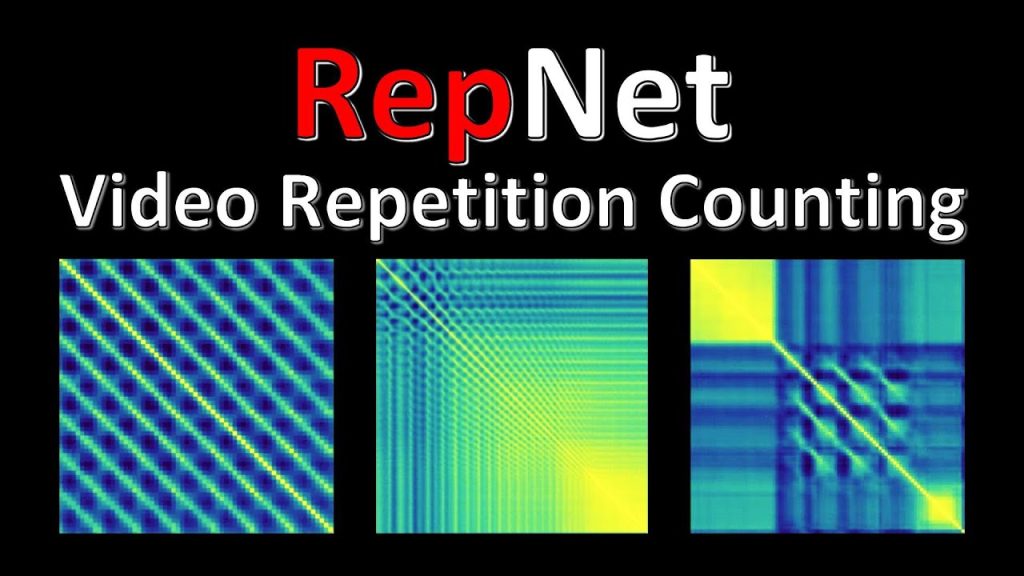

Counting repeated actions in a video is one of the easiest tasks for humans, yet remains incredibly hard for machines. RepNet achieves state-of-the-art by creating an information bottleneck in the form of a temporal self-similarity matrix, relating video frames to each other in a way that forces the model to surface the information relevant for counting. Along with that, the authors produce a new dataset for evaluating counting models.

OUTLINE:

0:00 – Intro & Overview

2:30 – Problem Statement

5:15 – Output & Loss

6:25 – Per-Frame Embeddings

11:20 – Temporal Self-Similarity Matrix

19:00 – Periodicity Predictor

25:50 – Architecture Recap

27:00 – Synthetic Dataset

30:15 – Countix Dataset

31:10 – Experiments

33:35 – Applications

35:30 – Conclusion & Comments

Paper Website:

Colab:

Abstract:

We present an approach for estimating the period with which an action is repeated in a video. The crux of the approach lies in constraining the period prediction module to use temporal self-similarity as an intermediate representation bottleneck that allows generalization to unseen repetitions in videos in the wild. We train this model, called RepNet, with a synthetic dataset that is generated from a large unlabeled video collection by sampling short clips of varying lengths and repeating them with different periods and counts. This combination of synthetic data and a powerful yet constrained model, allows us to predict periods in a class-agnostic fashion. Our model substantially exceeds the state of the art performance on existing periodicity (PERTUBE) and repetition counting (QUVA) benchmarks. We also collect a new challenging dataset called Countix (~90 times larger than existing datasets) which captures the challenges of repetition counting in real-world videos.

Authors: Debidatta Dwibedi, Yusuf Aytar, Jonathan Tompson, Pierre Sermanet, Andrew Zisserman

Links:

YouTube:

Twitter:

Discord:

BitChute:

Minds:

source