#ai #tech #science

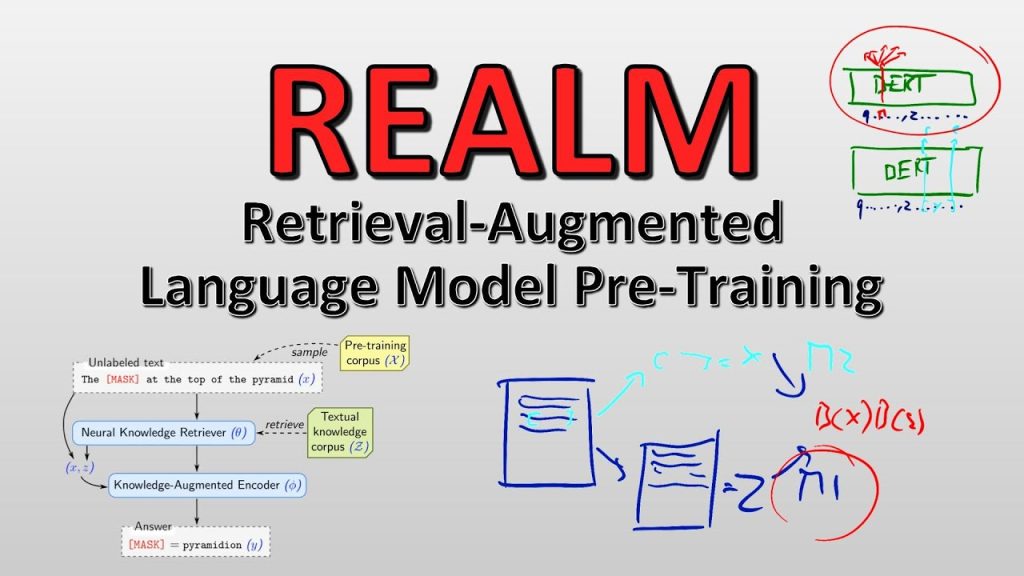

Open Domain Question Answering is one of the most challenging tasks in NLP. When answering a question, the model is able to retrieve arbitrary documents from an indexed corpus to gather more information. REALM shows how Masked Language Modeling (MLM) pretraining can be used to train a retriever for relevant documents in an end-to-end fashion and improves over state-of-the-art by a significant margin.

OUTLINE:

0:00 – Introduction & Overview

4:30 – World Knowledge in Language Models

8:15 – Masked Language Modeling for Latent Document Retrieval

14:50 – Problem Formulation

17:30 – Knowledge Retriever Model using MIPS

23:50 – Question Answering Model

27:50 – Architecture Recap

29:55 – Analysis of the Loss Gradient

34:15 – Initialization using the Inverse Cloze Task

41:40 – Prohibiting Trivial Retrievals

44:05 – Null Document

45:00 – Salient Span Masking

50:15 – My Idea on Salient Span Masking

51:50 – Experimental Results and Ablations

57:30 – Concrete Example from the Model

Paper:

Code:

My Video on GPT-3:

My Video on BERT:

My Video on Word2Vec:

Abstract:

Language model pre-training has been shown to capture a surprising amount of world knowledge, crucial for NLP tasks such as question answering. However, this knowledge is stored implicitly in the parameters of a neural network, requiring ever-larger networks to cover more facts.

To capture knowledge in a more modular and interpretable way, we augment language model pre-training with a latent knowledge retriever, which allows the model to retrieve and attend over documents from a large corpus such as Wikipedia, used during pre-training, fine-tuning and inference. For the first time, we show how to pre-train such a knowledge retriever in an unsupervised manner, using masked language modeling as the learning signal and backpropagating through a retrieval step that considers millions of documents.

We demonstrate the effectiveness of Retrieval-Augmented Language Model pre-training (REALM) by fine-tuning on the challenging task of Open-domain Question Answering (Open-QA). We compare against state-of-the-art models for both explicit and implicit knowledge storage on three popular Open-QA benchmarks, and find that we outperform all previous methods by a significant margin (4-16% absolute accuracy), while also providing qualitative benefits such as interpretability and modularity.

Authors: Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat, Ming-Wei Chang

Links:

YouTube:

Twitter:

Discord:

BitChute:

Minds:

Parler:

LinkedIn:

If you want to support me, the best thing to do is to share out the content 🙂

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar:

Patreon:

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

source