Our Futures Program, launched in 2023, aims to guide humanity towards the beneficial outcomes made possible by transformative technologies. This year, as part of that program, we are opening two new funding opportunities to support research into the ways that artificial intelligence can be harnessed safely to make the world a better place.

The first request for proposals (RFP) calls for papers evaluating and predicting the impact of AI on the achievement of the UN Sustainable Development Goals (SDGs) relating to poverty, healthcare, energy and climate change. The second RFP calls for designs of trustworthy global mechanisms or institutions to govern advanced AI in the near future.

Selected proposals in either category will receive a one-time grant of $15,000, to be used at the researcher’s discretion. We intend to make several grants in each track.

Applications for both tracks are now open and will remain so until April 1st, 2024.

Request 1: The Impact of AI on Achieving SDGs in Poverty, Health, Energy and Climate

There has been extensive academic research and, more recently, public discourse on the current harms and emerging risks of AI. In contrast, the discussion around the benefits of AI has been quite ambiguous.

The prospect of enormous benefits down the road from AI – that it will “eliminate poverty,” “cure diseases” or “solve climate change” – helps to drive a corporate race to build ever more powerful systems. But the type of AI capabilities necessary to realize those benefits is unclear. As that race brings increasing levels of risk, we need a concrete and evidence-based understanding of the benefits in order to develop, deploy and regulate this technology in a way that brings genuine benefits to everyone’s lives.

One way of doing that is to see how AI is affecting the achievement of a broadly supported list of global priorities. To that effect, we are looking for researchers to select a target from one of the four UN Sustainable Development Goals (SDGs) we have chosen to focus on – namely goals 1 (Poverty), 3 (Health), 7 (Energy), and 13 (Climate), analyse the (direct or indirect) impact of AI on the realisation of that target up to the present, and then project how AI could accelerate, inhibit, or prove irrelevant to, the achievement of that goal by 2030.

We hope that the resulting papers will enrich the vital discussion of whether AI can in fact solve these crucial challenges, and, if so, how it can be made or directed to do so.

Read more and apply

Request 2: Designs for global institutions governing advanced AI

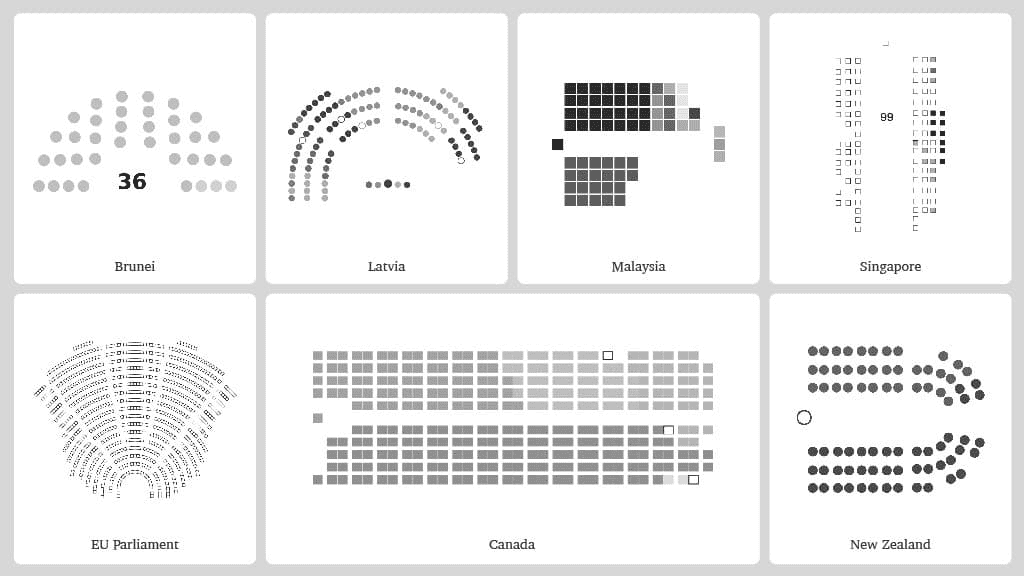

Reaching a stable future world may require restricting AI development such that the world has a.) no such AGI projects; b.) a single, global AGI project, or c.) multiple monitored AGI projects.

Here we define AGI as a system which outperforms human experts in non-physical tasks across a wide range of domains, including metacognitive abilities like learning new skills. A stable state would be a scenario that evolves at the cautious timescale determined by thorough risk assessments rather than corporate competition.

The success of any of these stable futures depends upon diligent new mechanisms and institutions which can account for the newly introduced risks and benefits of AI capability development. It is not yet clear what such organizations would look like, how they would command trust or evade capture, and so on.

Researchers must design trustworthy global governance mechanisms or institutions that can help stabilise a future with 0, 1, or more AGI projects – or a mechanism which aids more than one of these scenarios. Proposals should outline the specifications of their mechanism, and explain how it will minimise the risks of advanced AI and maximise the distribution of its benefits.

Without a clear articulation of how trustworthy global AGI governance could work, the default narrative is that it is impossible. This track is thus born of a sincere hope that the default narrative is wrong, a hope that if we keep it under control and use it well, AI will empower – rather than disempower – humans the world over.

Read more and apply

This content was first published at futureoflife.org on February 14, 2024.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.