On September 5, IT Home reported that Alibaba Tongyi Qianwen has launched the latest Qwen-3-Max-Preview model on its official website and OpenRouter. According to the official deion, this model is the most powerful language model in the Tongyi Qianwen series.

IT Home provides the following relevant links:

Official Website:Qwen Chat

OpenRouter:Qwen3 Max – API, Providers, Stats

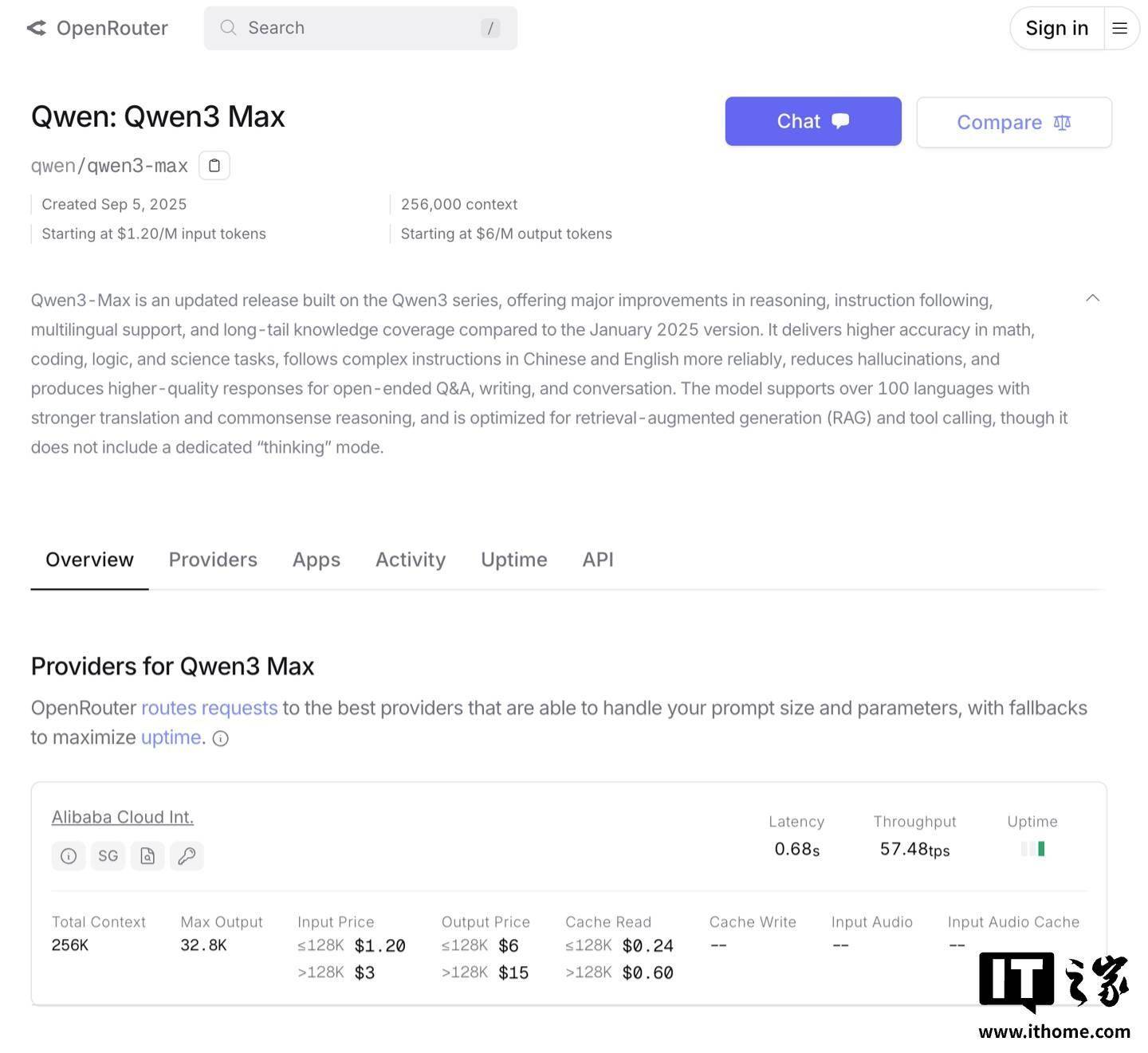

The introduction of the model on OpenRouter is summarized as follows:

Input:$1.20 (approximately ¥8.6 at current exchange rates) per million tokens

Output:$6 (approximately ¥42.8 at current exchange rates) per million tokens

Qwen3-Max is an update based on the Qwen3 series, offering significant improvements in inference, instruction following, multilingual support, and long-tail knowledge coveragecompared to the January 2025 version. It provides higher accuracy in mathematics, coding, logic, and scientific tasks, more reliably follows complex instructions in both Chinese and English, reduces hallucinations, and generates higher quality responses for open-ended questions, writing, and conversational generation.

The model supports over 100 languages, boasts stronger translation and commonsense reasoning abilities, and is optimized for retrieval-augmented generation (RAG) and tool invocation, although it does not include a dedicated ‘thinking’ mode.

Qwen3-Max is an update based on the Qwen3 series, offering significant improvements in inference, instruction following, multilingual support, and long-tail knowledge coveragecompared to the January 2025 version. It provides higher accuracy in mathematics, coding, logic, and scientific tasks, more reliably follows complex instructions in both Chinese and English, reduces hallucinations, and generates higher quality responses for open-ended questions, writing, and conversational generation.

The model supports over 100 languages, boasts stronger translation and commonsense reasoning abilities, and is optimized for retrieval-augmented generation (RAG) and tool invocation, although it does not include a dedicated ‘thinking’ mode.

返回搜狐,查看更多

返回搜狐,查看更多

平台声明:该文观点仅代表作者本人,搜狐号系信息发布平台,搜狐仅提供信息存储空间服务。