Retrieval Augmented Generation (RAG) applications have become increasingly popular due to their ability to enhance generative AI tasks with contextually relevant information. Implementing RAG-based applications requires careful attention to security, particularly when handling sensitive data. The protection of personally identifiable information (PII), protected health information (PHI), and confidential business data is crucial because this information flows through RAG systems. Failing to address these security considerations can lead to significant risks and potential data breaches. For healthcare organizations, financial institutions, and enterprises handling confidential information, these risks can result in regulatory compliance violations and breach of customer trust. See the OWASP Top 10 for Large Language Model Applications to learn more about the unique security risks associated with generative AI applications.

Developing a comprehensive threat model for your generative AI applications can help you identify potential vulnerabilities related to sensitive data leakage, prompt injections, unauthorized data access, and more. To assist in this effort, AWS provides a range of generative AI security strategies that you can use to create appropriate threat models.

Amazon Bedrock Knowledge Bases is a fully managed capability that simplifies the management of the entire RAG workflow, empowering organizations to give foundation models (FMs) and agents contextual information from your private data sources to deliver more relevant and accurate responses tailored to your specific needs. Additionally, with Amazon Bedrock Guardrails, you can implement safeguards in your generative AI applications that are customized to your use cases and responsible AI policies. You can redact sensitive information such as PII to protect privacy using Amazon Bedrock Guardrails.

RAG workflow: Converting data to actionable knowledge

RAG consists of two major steps:

Ingestion – Preprocessing unstructured data, which includes converting the data into text documents and splitting the documents into chunks. Document chunks are then encoded with an embedding model to convert them to document embeddings. These encoded document embeddings along with the original document chunks in the text are then stored to a vector store, such as Amazon OpenSearch Service.

Augmented retrieval – At query time, the user’s query is first encoded with the same embedding model to convert the query into a query embedding. The generated query embedding is then used to perform a similarity search on the stored document embeddings to find and retrieve semantically similar document chunks to the query. After the document chunks are retrieved, the user prompt is augmented by passing the retrieved chunks as additional context, so that the text generation model can answer the user query using the retrieved context. If sensitive data isn’t sanitized before ingestion, this might lead to retrieving sensitive data from the vector store and inadvertently leak the sensitive data to unauthorized users as part of the model response.

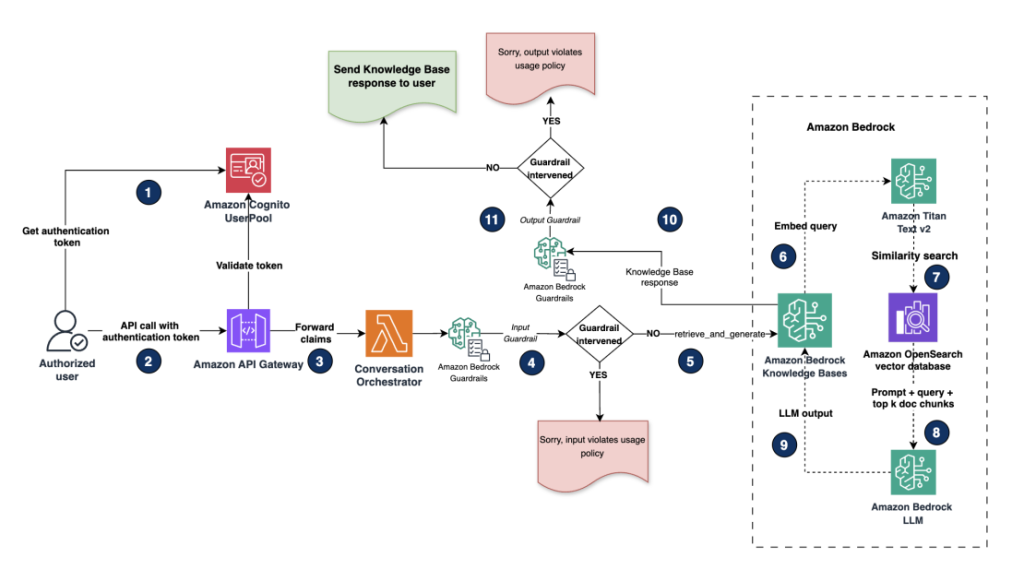

The following diagram shows the architectural workflow of a RAG system, illustrating how a user’s query is processed through multiple stages to generate an informed response

Solution overview

In this post we present two architecture patterns: data redaction at storage level and role-based access, for protecting sensitive data when building RAG-based applications using Amazon Bedrock Knowledge Bases.

Data redaction at storage level – Identifying and redacting (or masking) sensitive data before storing them to the vector store (ingestion) using Amazon Bedrock Knowledge Bases. This zero-trust approach to data sensitivity reduces the risk of sensitive information being inadvertently disclosed to unauthorized users.

Role-based access to sensitive data – Controlling selective access to sensitive information based on user roles and permissions during retrieval. This approach is best in situations where sensitive data needs to be stored in the vector store, such as in healthcare settings with distinct user roles like administrators (doctors) and non-administrators (nurses or support personnel).

For all data stored in Amazon Bedrock, the AWS shared responsibility model applies.

Let’s dive in to understand how to implement the data redaction at storage level and role-based access architecture patterns effectively.

Scenario 1: Identify and redact sensitive data before ingesting into the vector store

The ingestion flow implements a four-step process to help protect sensitive data when building RAG applications with Amazon Bedrock:

Source document processing – An AWS Lambda function monitors the incoming text documents landing to a source Amazon Simple Storage Service (Amazon S3) bucket and triggers an Amazon Comprehend PII redaction job to identify and redact (or mask) sensitive data in the documents. An Amazon EventBridge rule triggers the Lambda function every 5 minutes. The document processing pipeline described here only processes text documents. To handle documents containing embedded images, you should implement additional preprocessing steps to extract and analyze images separately before ingestion.

PII identification and redaction – The Amazon Comprehend PII redaction job analyzes the text content to identify and redact PII entities. For example, the job identifies and redacts sensitive data entities like name, email, address, and other financial PII entities.

Deep security scanning – After redaction, documents move to another folder where Amazon Macie verifies redaction effectiveness and identifies any remaining sensitive data objects. Documents flagged by Macie go to a quarantine bucket for manual review, while cleared documents move to a redacted bucket ready for ingestion. For more details on data ingestion, see Sync your data with your Amazon Bedrock knowledge base.

Secure knowledge base integration – Redacted documents are ingested into the knowledge base through a data ingestion job. In case of multi-modal content, for enhanced security, consider implementing:

A dedicated image extraction and processing pipeline.

Image analysis to detect and redact sensitive visual information.

Amazon Bedrock Guardrails to filter inappropriate image content during retrieval.

This multi-layered approach focuses on securing text content while highlighting the importance of implementing additional safeguards for image processing. Organizations should evaluate their multi-modal document requirements and extend the security framework accordingly.

Ingestion flow

The following illustration demonstrates a secure document processing pipeline for handling sensitive data before ingestion into Amazon Bedrock Knowledge Bases.

The high-level steps are as follows:

The document ingestion flow begins when documents containing sensitive data are uploaded to a monitored inputs folder in the source bucket. An EventBridge rule triggers a Lambda function (ComprehendLambda).

The ComprehendLambda function monitors for new files in the inputs folder of the source bucket and moves landed files to a processing folder. It then launches an asynchronous Amazon Comprehend PII redaction analysis job and records the job ID and status in an Amazon DynamoDB JobTracking table for monitoring job completion. The Amazon Comprehend PII redaction job automatically redacts and masks sensitive elements such as names, addresses, phone numbers, Social Security numbers, driver’s license IDs, and banking information with the entity type. The job replaces these identified PII entities with placeholder tokens, such as [NAME], [SSN] etc. The entities to mask can be configured using RedactionConfig. For more information, see Redacting PII entities with asynchronous jobs (API). The MaskMode in RedactionConfig is set to REPLACE_WITH_PII_ENTITY_TYPE instead of MASK; redacting with a MaskCharacter would affect the quality of retrieved documents because many documents could contain the same MaskCharacter, thereby affecting the retrieval quality. After completion, the redacted files move to the for_macie_scan folder for secondary scanning.

The secondary verification phase employs Macie for additional sensitive data detection on the redacted files. Another Lambda function (MacieLambda) monitors the completion of the Amazon Comprehend PII redaction job. When the job is complete, the function triggers a Macie one-time sensitive data detection job with files in the for_macie_scan folder.

The final stage integrates with the Amazon Bedrock knowledge base. The findings from Macie determine the next steps: files with high severity ratings (3 or higher) are moved to a quarantine folder for human review by authorized personnel with appropriate permissions and access controls, whereas files with low severity ratings are moved to a designated redacted bucket, which then triggers a data ingestion job to the Amazon Bedrock knowledge base.

This process helps prevent sensitive details from being exposed when the model generates responses based on retrieved data.

Augmented retrieval flow

The augmented retrieval flow diagram shows how user queries are processed securely. It illustrates the complete workflow from user authentication through Amazon Cognito to response generation with Amazon Bedrock, including guardrail interventions that help prevent policy violations in both inputs and outputs.

The high-level steps are as follows:

For our demo, we use a web application UI built using Streamlit. The web application launches with a login form with user name and password fields.

The user enters the credentials and logs in. User credentials are authenticated using Amazon Cognito user pools. Amazon Cognito acts as our OpenID connect (OIDC) identity provider (IdP) to provide authentication and authorization services for this application. After authentication, Amazon Cognito generates and returns identity, access and refresh tokens in JSON web token (JWT) format back to the web application. Refer to Understanding user pool JSON web tokens (JWTs) for more information.

After the user is authenticated, they are logged in to the web application, where an AI assistant UI is presented to the user. The user enters their query (prompt) in the assistant’s text box. The query is then forwarded using a REST API call to an Amazon API Gateway endpoint along with the access tokens in the header.

API Gateway forwards the payload along with the claims included in the header to a conversation orchestrator Lambda function.

The conversation orchestrator Lambda function processes the user prompt and model parameters received from the UI and calls the RetrieveAndGenerate API to the Amazon Bedrock knowledge base. Input guardrails are first applied to this request to perform input validation on the user query.

The guardrail evaluates and applies predefined responsible AI policies using content filters, denied topic filters and word filters on user input. For more information on creating guardrail filters, see Create a guardrail.

If the predefined input guardrail policies are triggered on the user input, the guardrails intervene and return a preconfigured message like, “Sorry, your query violates our usage policy.”

Requests that don’t trigger a guardrail policy will retrieve the documents from the knowledge base and generate a response using the RetrieveAndGenerate. Optionally, if users choose to run Retrieve separately, guardrails can also be applied at this stage. Guardrails during document retrieval can help block sensitive data returned from the vector store.

During retrieval, Amazon Bedrock Knowledge Bases encodes the user query using the Amazon Titan Text v2 embeddings model to generate a query embedding.

Amazon Bedrock Knowledge Bases performs a similarity search with the query embedding against the document embeddings in the OpenSearch Service vector store and retrieves top-k chunks. Optionally, post-retrieval, you can incorporate a reranking model to improve the retrieved results quality from the OpenSearch vector store. Refer to Improve the relevance of query responses with a reranker model in Amazon Bedrock for more details.

Finally, the user prompt is augmented with the retrieved document chunks from the vector store as context and the final prompt is sent to an Amazon Bedrock foundation model (FM) for inference. Output guardrail policies are again applied post-response generation. If the predefined output guardrail policies are triggered, the model generates a predefined response like “Sorry, your query violates our usage policy.” If no policies are triggered, then the large language model (LLM) generated response is sent to the user.

To deploy Scenario 1, find the instructions here on Github

Scenario 2: Implement role-based access to PII data during retrieval

In this scenario, we demonstrate a comprehensive security approach that combines role-based access control (RBAC) with intelligent PII guardrails for RAG applications. It integrates Amazon Bedrock with AWS identity services to automatically enforce security through different guardrail configurations for admin and non-admin users.

The solution uses the metadata filtering capabilities of Amazon Bedrock Knowledge Bases to dynamically filter documents during similarity searches using metadata attributes assigned before ingestion. For example, admin and non-admin metadata attributes are created and attached to relevant documents before the ingestion process. During retrieval, the system returns only the documents with metadata matching the user’s security role and permissions and applies the relevant guardrail policies to either mask or block sensitive data detected on the LLM output.

This metadata-driven approach, combined with features like custom guardrails, real-time PII detection, masking, and comprehensive access logging creates a robust framework that maintains the security and utility of the RAG application while enforcing RBAC.

The following diagram illustrates how RBAC works with metadata filtering in the vector database.

For a detailed understanding of how metadata filtering works, see Amazon Bedrock Knowledge Bases now supports metadata filtering to improve retrieval accuracy.

Augmented retrieval flow

The augmented retrieval flow diagram shows how user queries are processed securely based on role-based access.

The workflow consists of the following steps:

The user is authenticated using an Amazon Cognito user pool. It generates a validation token after successful authentication.

The user query is sent using an API call along with the authentication token through Amazon API Gateway.

Amazon API Gateway forwards the payload and claims to an integration Lambda function.

The Lambda function extracts the claims from the header and checks for user role and determines whether to use an admin guardrail or a non-admin guardrail based on the access level.

Next, the Amazon Bedrock Knowledge Bases RetrieveAndGenerate API is invoked along with the guardrail applied on the user input.

Amazon Bedrock Knowledge Bases embeds the query using the Amazon Titan Text v2 embeddings model.

Amazon Bedrock Knowledge Bases performs similarity searches on the OpenSearch Service vector database and retrieves relevant chunks (optionally, you can improve the relevance of query responses using a reranker model in the knowledge base).

The user prompt is augmented with the retrieved context from the previous step and sent to the Amazon Bedrock FM for inference.

Based on the user role, the LLM output is evaluated against defined Responsible AI policies using either admin or non-admin guardrails.

Based on guardrail evaluation, the system either returns a “Sorry! Cannot Respond” message if the guardrail intervenes, or delivers an appropriate response with no masking on the output for admin users or sensitive data masked for non-admin users.

To deploy Scenario 2, find the instructions here on Github

This security architecture combines Amazon Bedrock guardrails with granular access controls to automatically manage sensitive information exposure based on user permissions. The multi-layered approach makes sure organizations maintain security compliance while fully utilizing their knowledge base, proving security and functionality can coexist.

Customizing the solution

The solution offers several customization points to enhance its flexibility and adaptability:

Integration with external APIs – You can integrate existing PII detection and redaction solutions with this system. The Lambda function can be modified to use custom APIs for PHI or PII handling before calling the Amazon Bedrock Knowledge Bases API.

Multi-modal processing – Although the current solution focuses on text, it can be extended to handle images containing PII by incorporating image-to-text conversion and caption generation. For more information about using Amazon Bedrock for processing multi-modal content during ingestion, see Parsing options for your data source.

Custom guardrails – Organizations can implement additional specialized security measures tailored to their specific use cases.

Structured data handling – For queries involving structured data, the solution can be customized to include Amazon Redshift as a structured data store as opposed to OpenSearch Service. Data masking and redaction on Amazon Redshift can be achieved by applying dynamic data masking (DDM) policies, including fine-grained DDM policies like role-based access control and column-level policies using conditional dynamic data masking.

Agentic workflow integration – When incorporating an Amazon Bedrock knowledge base with an agentic workflow, additional safeguards can be implemented to protect sensitive data from external sources, such as API calls, tool use, agent action groups, session state, and long-term agentic memory.

Response streaming support – The current solution uses a REST API Gateway endpoint that doesn’t support streaming. For streaming capabilities, consider WebSocket APIs in API Gateway, Application Load Balancer (ALB), or custom solutions with chunked responses using client-side reassembly or long-polling techniques.

With these customization options, you can tailor the solution to your specific needs, providing a robust and flexible security framework for your RAG applications. This approach not only protects sensitive data but also maintains the utility and efficiency of the knowledge base, allowing users to interact with the system while automatically enforcing role-appropriate information access and PII handling.

Shared security responsibility: The customer’s role

At AWS, security is our top priority and security in the cloud is a shared responsibility between AWS and our customers. With AWS, you control your data by using AWS services and tools to determine where your data is stored, how it is secured, and who has access to it. Services such as AWS Identity and Access Management (IAM) provide robust mechanisms for securely controlling access to AWS services and resources.

To enhance your security posture further, services like AWS CloudTrail and Amazon Macie offer advanced compliance, detection, and auditing capabilities. When it comes to encryption, AWS CloudHSM and AWS Key Management Service (KMS) enable you to generate and manage encryption keys with confidence.

For organizations seeking to establish governance and maintain data residency controls, AWS Control Tower offers a comprehensive solution. For more information on Data protection and Privacy, refer to Data Protection and Privacy at AWS.

While our solution demonstrates the use of PII detection and redaction techniques, it does not provide an exhaustive list of all PII types or detection methods. As a customer, you bear the responsibility for implementing the appropriate PII detection types and redaction methods using AWS services, including Amazon Bedrock Guardrails and other open-source libraries. The regular expressions configured in Bedrock Guardrails within this solution serve as a reference example only and do not cover all possible variations for detecting PII types. For instance, date of birth (DOB) formats can vary widely. Therefore, it falls on you to configure Bedrock Guardrails and policies to accurately detect the PII types relevant to your use case. Amazon Bedrock maintains strict data privacy standards. The service does not store or log your prompts and completions, nor does it use them to train AWS models or share them with third parties. We implement this through our Model Deployment Account architecture – each AWS Region where Amazon Bedrock is available has a dedicated deployment account per model provider, managed exclusively by the Amazon Bedrock service team. Model providers have no access to these accounts. When a model is delivered to AWS, Amazon Bedrock performs a deep copy of the provider’s inference and training software into these controlled accounts for deployment, making sure that model providers cannot access Amazon Bedrock logs or customer prompts and completions.

Ultimately, while we provide the tools and infrastructure, the responsibility for securing your data using AWS services rests with you, the customer. This shared responsibility model makes sure that you have the flexibility and control to implement security measures that align with your unique requirements and compliance needs, while we maintain the security of the underlying cloud infrastructure. For comprehensive information about Amazon Bedrock security, please refer to the Amazon Bedrock Security documentation.

Conclusion

In this post, we explored two approaches for securing sensitive data in RAG applications using Amazon Bedrock. The first approach focused on identifying and redacting sensitive data before ingestion into an Amazon Bedrock knowledge base, and the second demonstrated a fine-grained RBAC pattern for managing access to sensitive information during retrieval. These solutions represent just two possible approaches among many for securing sensitive data in generative AI applications.

Security is a multi-layered concern that requires careful consideration across all aspects of your application architecture. Looking ahead, we plan to dive deeper into RBAC for sensitive data within structured data stores when used with Amazon Bedrock Knowledge Bases. This can provide additional granularity and control over data access patterns while maintaining security and compliance requirements. Securing sensitive data in RAG applications requires ongoing attention to evolving security best practices, regular auditing of access patterns, and continuous refinement of your security controls as your applications and requirements grow.

To enhance your understanding of Amazon Bedrock security implementation, explore these additional resources:

The complete source code and deployment instructions for these solutions are available in our GitHub repository.

We encourage you to explore the repository for detailed implementation guidance and customize the solutions based on your specific requirements using the customization points discussed earlier.

About the authors

Praveen Chamarthi brings exceptional expertise to his role as a Senior AI/ML Specialist at Amazon Web Services, with over two decades in the industry. His passion for Machine Learning and Generative AI, coupled with his specialization in ML inference on Amazon SageMaker and Amazon Bedrock, enables him to empower organizations across the Americas to scale and optimize their ML operations. When he’s not advancing ML workloads, Praveen can be found immersed in books or enjoying science fiction films. Connect with him on LinkedIn to follow his insights.

Praveen Chamarthi brings exceptional expertise to his role as a Senior AI/ML Specialist at Amazon Web Services, with over two decades in the industry. His passion for Machine Learning and Generative AI, coupled with his specialization in ML inference on Amazon SageMaker and Amazon Bedrock, enables him to empower organizations across the Americas to scale and optimize their ML operations. When he’s not advancing ML workloads, Praveen can be found immersed in books or enjoying science fiction films. Connect with him on LinkedIn to follow his insights.

Srikanth Reddy is a Senior AI/ML Specialist with Amazon Web Services. He is responsible for providing deep, domain-specific expertise to enterprise customers, helping them use AWS AI and ML capabilities to their fullest potential. You can find him on LinkedIn.

Srikanth Reddy is a Senior AI/ML Specialist with Amazon Web Services. He is responsible for providing deep, domain-specific expertise to enterprise customers, helping them use AWS AI and ML capabilities to their fullest potential. You can find him on LinkedIn.

Dhawal Patel is a Principal Machine Learning Architect at AWS. He has worked with organizations ranging from large enterprises to mid-sized startups on problems related to distributed computing and artificial intelligence. He focuses on deep learning, including NLP and computer vision domains. He helps customers achieve high-performance model inference on Amazon SageMaker.

Dhawal Patel is a Principal Machine Learning Architect at AWS. He has worked with organizations ranging from large enterprises to mid-sized startups on problems related to distributed computing and artificial intelligence. He focuses on deep learning, including NLP and computer vision domains. He helps customers achieve high-performance model inference on Amazon SageMaker.

Vivek Bhadauria is a Principal Engineer at Amazon Bedrock with almost a decade of experience in building AI/ML services. He now focuses on building generative AI services such as Amazon Bedrock Agents and Amazon Bedrock Guardrails. In his free time, he enjoys biking and hiking.

Vivek Bhadauria is a Principal Engineer at Amazon Bedrock with almost a decade of experience in building AI/ML services. He now focuses on building generative AI services such as Amazon Bedrock Agents and Amazon Bedrock Guardrails. In his free time, he enjoys biking and hiking.

Brandon Rooks Sr. is a Cloud Security Professional with 20+ years of experience in the IT and Cybersecurity field. Brandon joined AWS in 2019, where he dedicates himself to helping customers proactively enhance the security of their cloud applications and workloads. Brandon is a lifelong learner, and holds the CISSP, AWS Security Specialty, and AWS Solutions Architect Professional certifications. Outside of work, he cherishes moments with his family, engaging in various activities such as sports, gaming, music, volunteering, and traveling.

Brandon Rooks Sr. is a Cloud Security Professional with 20+ years of experience in the IT and Cybersecurity field. Brandon joined AWS in 2019, where he dedicates himself to helping customers proactively enhance the security of their cloud applications and workloads. Brandon is a lifelong learner, and holds the CISSP, AWS Security Specialty, and AWS Solutions Architect Professional certifications. Outside of work, he cherishes moments with his family, engaging in various activities such as sports, gaming, music, volunteering, and traveling.

Vikash Garg is a Principal Engineer at Amazon Bedrock with almost 4 years of experience in building AI/ML services. He has a decade of experience in building large-scale systems. He now focuses on building the generative AI service AWS Bedrock Guardrails. In his free time, he enjoys hiking and traveling.

Vikash Garg is a Principal Engineer at Amazon Bedrock with almost 4 years of experience in building AI/ML services. He has a decade of experience in building large-scale systems. He now focuses on building the generative AI service AWS Bedrock Guardrails. In his free time, he enjoys hiking and traveling.