With support from the Accelerating Foundation Models Research (AFMR) grant program, a team of researchers from Microsoft and collaborating institutions has developed an approach to evaluate AI models that predicts how they will perform on unfamiliar tasks and explain why, something current benchmarks struggle to do.

In the paper, “General Scales Unlock AI Evaluation with Explanatory and Predictive Power,” they introduce a methodology that goes beyond measuring overall accuracy. It assesses the knowledge and cognitive abilities a task requires and evaluates them against the model’s capabilities.

ADeLe: An ability-based approach to task evaluation

The framework uses ADeLe (annotated-demand-levels), a technique that assesses how demanding a task is for an AI model by applying measurement scales for 18 types of cognitive and knowledge-based abilities. This difficulty rating is based on a detailed rubric, originally developed for human tasks and shown to work reliably when applied by AI models.

Spotlight: Blog post

MedFuzz: Exploring the robustness of LLMs on medical challenge problems

Medfuzz tests LLMs by breaking benchmark assumptions, exposing vulnerabilities to bolster real-world accuracy.

By comparing what a task requires with what a model can do, ADeLe generates an ability profile that not only predicts performance but also explains why a model is likely to succeed or fail—linking outcomes to specific strengths or limitations.

The 18 scales reflect core cognitive abilities (e.g., attention, reasoning), knowledge areas (e.g., natural or social sciences), and other task-related factors (e.g., prevalence of the task on the internet). Each task is rated from 0 to 5 based on how much it draws on a given ability. For example, a simple math question might score 1 on formal knowledge, while one requiring advanced expertise could score 5. Figure 1 illustrates how the full process works—from rating task requirements to generating ability profiles.

To develop this system, the team analyzed 16,000 examples spanning 63 tasks drawn from 20 AI benchmarks, creating a unified measurement approach that works across a wide range of tasks. The paper details how ratings across 18 general scales explain model success or failure and predict performance on new tasks in both familiar and unfamiliar settings.

Evaluation results

Using ADeLe, the team evaluated 20 popular AI benchmarks and uncovered three key findings: 1) Current AI benchmarks have measurement limitations; 2) AI models show distinct patterns of strengths and weaknesses across different capabilities; and 3) ADeLe provides accurate predictions of whether AI systems will succeed or fail on a new task.

1. Revealing hidden flaws in AI testing methods

Many popular AI tests either don’t measure what they claim or only cover a limited range of difficulty levels. For example, the Civil Service Examination benchmark is meant to test logical reasoning, but it also requires other abilities, like specialized knowledge and metacognition. Similarly, TimeQA, designed to test temporal reasoning, only includes medium-difficulty questions—missing both simple and complex challenges.

2. Creating detailed AI ability profiles

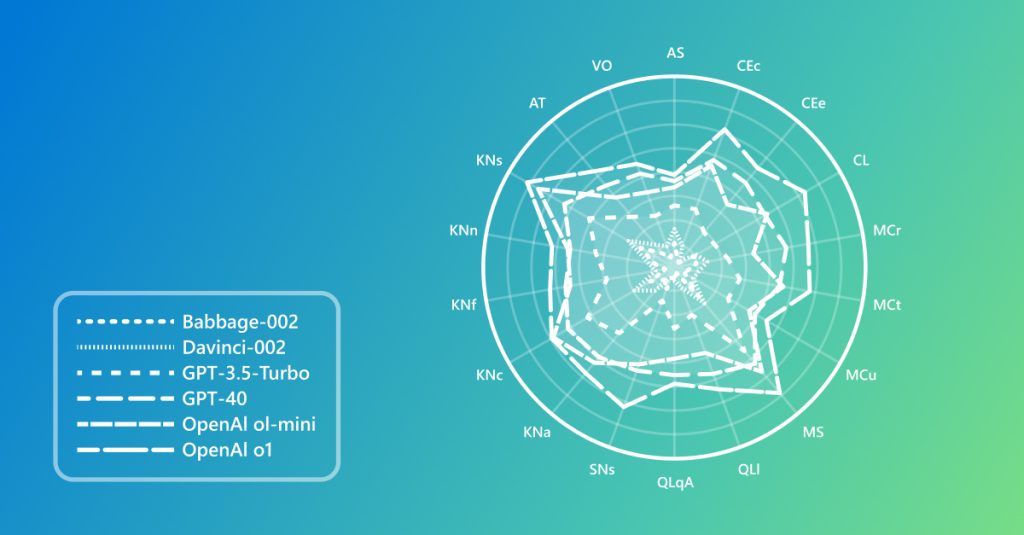

Using the 0–5 rating for each ability, the team created comprehensive ability profiles of 15 LLMs. For each of the 18 abilities measured, they plotted “subject characteristic curves” to show how a model’s success rate changes with task difficulty.

They then calculated a score for each ability—the difficulty level at which a model has a 50% chance of success—and used these results to generate radial plots showing each model’s strengths and weaknesses across the different scales and levels, illustrated in Figure 2.

This analysis revealed the following:

When measured against human performance, AI systems show different strengths and weaknesses across the 18 ability scales.

Newer LLMs generally outperform older ones, though not consistently across all abilities.

Knowledge-related performance depends heavily on model size and training methods.

Reasoning models show clear gains over non-reasoning models in logical thinking, learning and abstraction, and social capabilities, such as inferring the mental states of their users.

Increasing the size of general-purpose models after a given threshold only leads to small performance gains.

3. Predicting AI success and failure

In addition to evaluation, the team created a practical prediction system based on demand-level measurements that forecasts whether a model will succeed on specific tasks, even unfamiliar ones.

The system achieved approximately 88% accuracy in predicting the performance of popular models like GPT-4o and LLaMA-3.1-405B, outperforming traditional methods. This makes it possible to anticipate potential failures before deployment, adding the important step of reliability assessment for AI models.

Looking ahead

ADeLe can be extended to multimodal and embodied AI systems, and it has the potential to serve as a standardized framework for AI research, policymaking, and security auditing.

This technology marks a major step toward a science of AI evaluation, one that offers both clear explanations of system behavior and reliable predictions about performance. It aligns with the vision laid out in a previous Microsoft position paper on the promise of applying psychometrics to AI evaluation and a recent Societal AI white paper emphasizing the importance of AI evaluation.

As general-purpose AI advances faster than traditional evaluation methods, this work lays a timely foundation for making AI assessments more rigorous, transparent, and ready for real-world deployment. The research team is working toward building a collaborative community to strengthen and expand this emerging field.