Large Video Models (LVMs) built upon Large Language Models (LLMs) have shown

promise in video understanding but often suffer from misalignment with human

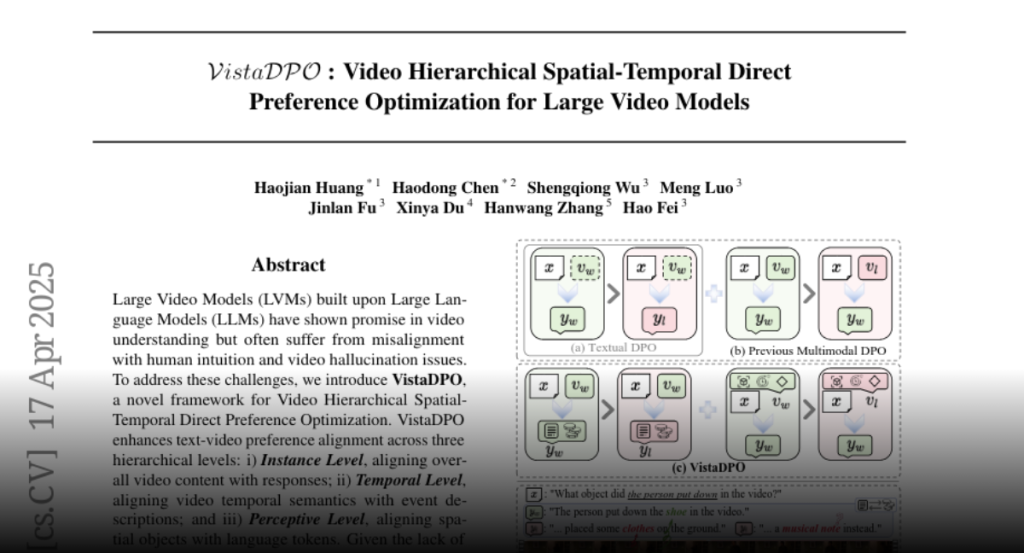

intuition and video hallucination issues. To address these challenges, we

introduce VistaDPO, a novel framework for Video Hierarchical Spatial-Temporal

Direct Preference Optimization. VistaDPO enhances text-video preference

alignment across three hierarchical levels: i) Instance Level, aligning overall

video content with responses; ii) Temporal Level, aligning video temporal

semantics with event descriptions; and iii) Perceptive Level, aligning spatial

objects with language tokens. Given the lack of datasets for fine-grained

video-language preference alignment, we construct VistaDPO-7k, a dataset of

7.2K QA pairs annotated with chosen and rejected responses, along with

spatial-temporal grounding information such as timestamps, keyframes, and

bounding boxes. Extensive experiments on benchmarks such as Video

Hallucination, Video QA, and Captioning performance tasks demonstrate that

VistaDPO significantly improves the performance of existing LVMs, effectively

mitigating video-language misalignment and hallucination. The code and data are

available at https://github.com/HaroldChen19/VistaDPO.