A survey proposes a systematic taxonomy for evaluating large audio-language models across dimensions including auditory awareness, knowledge reasoning, dialogue ability, and fairness, to address fragmented benchmarks in the field.

With advancements in large audio-language models (LALMs), which enhance large

language models (LLMs) with auditory capabilities, these models are expected to

demonstrate universal proficiency across various auditory tasks. While numerous

benchmarks have emerged to assess LALMs’ performance, they remain fragmented

and lack a structured taxonomy. To bridge this gap, we conduct a comprehensive

survey and propose a systematic taxonomy for LALM evaluations, categorizing

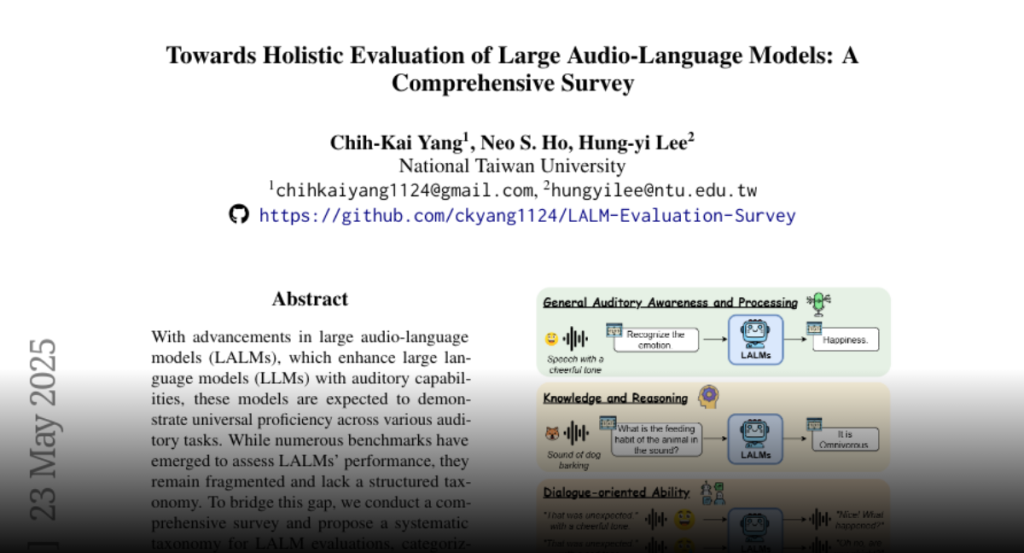

them into four dimensions based on their objectives: (1) General Auditory

Awareness and Processing, (2) Knowledge and Reasoning, (3) Dialogue-oriented

Ability, and (4) Fairness, Safety, and Trustworthiness. We provide detailed

overviews within each category and highlight challenges in this field, offering

insights into promising future directions. To the best of our knowledge, this

is the first survey specifically focused on the evaluations of LALMs, providing

clear guidelines for the community. We will release the collection of the

surveyed papers and actively maintain it to support ongoing advancements in the

field.