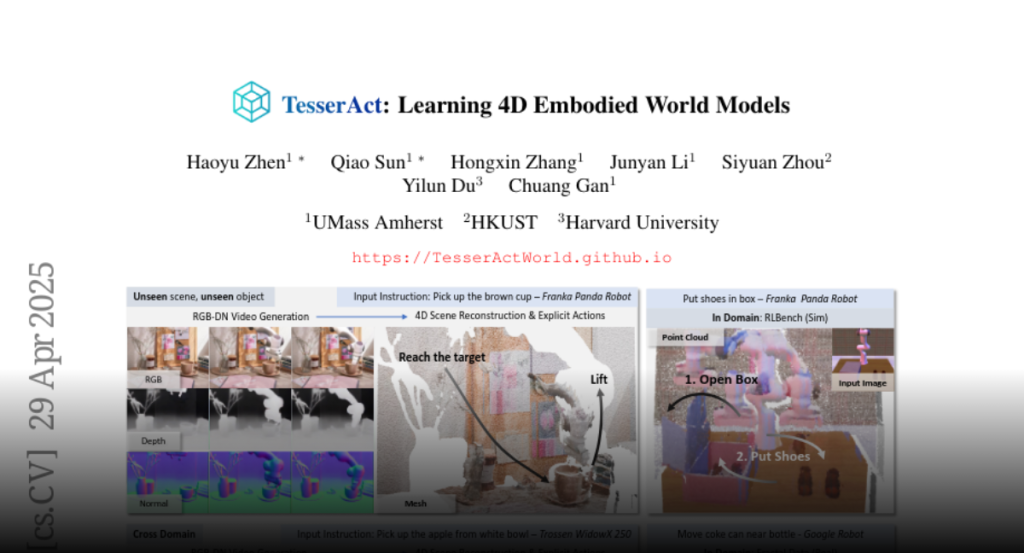

This paper presents an effective approach for learning novel 4D embodied

world models, which predict the dynamic evolution of 3D scenes over time in

response to an embodied agent’s actions, providing both spatial and temporal

consistency. We propose to learn a 4D world model by training on RGB-DN (RGB,

Depth, and Normal) videos. This not only surpasses traditional 2D models by

incorporating detailed shape, configuration, and temporal changes into their

predictions, but also allows us to effectively learn accurate inverse dynamic

models for an embodied agent. Specifically, we first extend existing robotic

manipulation video datasets with depth and normal information leveraging

off-the-shelf models. Next, we fine-tune a video generation model on this

annotated dataset, which jointly predicts RGB-DN (RGB, Depth, and Normal) for

each frame. We then present an algorithm to directly convert generated RGB,

Depth, and Normal videos into a high-quality 4D scene of the world. Our method

ensures temporal and spatial coherence in 4D scene predictions from embodied

scenarios, enables novel view synthesis for embodied environments, and

facilitates policy learning that significantly outperforms those derived from

prior video-based world models.