TeEFusion enhances text-to-image synthesis by efficiently incorporating classifier-free guidance into text embeddings, reducing inference costs without sacrificing image quality.

Recent advances in text-to-image synthesis largely benefit from sophisticated

sampling strategies and classifier-free guidance (CFG) to ensure high-quality

generation. However, CFG’s reliance on two forward passes, especially when

combined with intricate sampling algorithms, results in prohibitively high

inference costs. To address this, we introduce TeEFusion (Text

Embeddings Fusion), a novel and efficient distillation method

that directly incorporates the guidance magnitude into the text embeddings and

distills the teacher model’s complex sampling strategy. By simply fusing

conditional and unconditional text embeddings using linear operations,

TeEFusion reconstructs the desired guidance without adding extra parameters,

simultaneously enabling the student model to learn from the teacher’s output

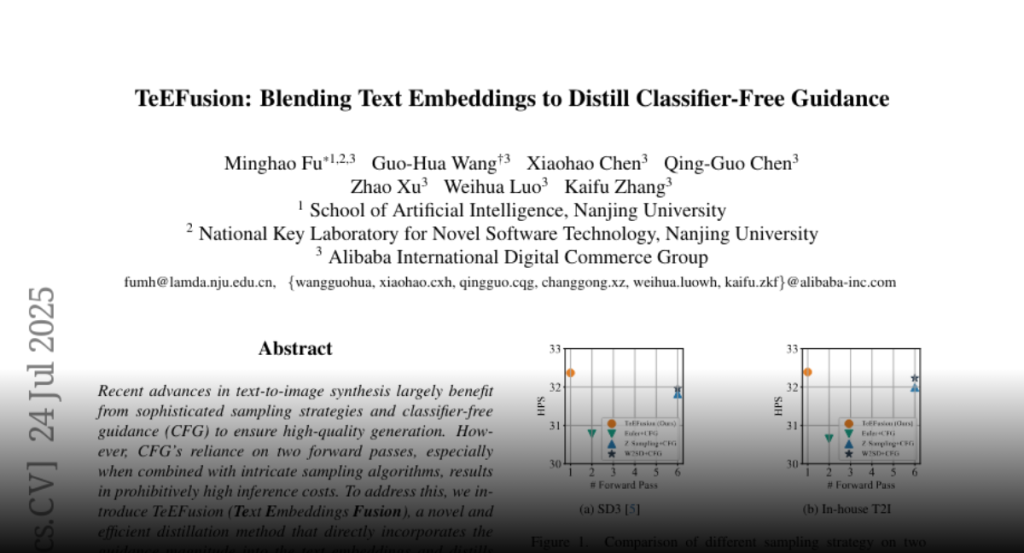

produced via its sophisticated sampling approach. Extensive experiments on

state-of-the-art models such as SD3 demonstrate that our method allows the

student to closely mimic the teacher’s performance with a far simpler and more

efficient sampling strategy. Consequently, the student model achieves inference

speeds up to 6times faster than the teacher model, while maintaining image

quality at levels comparable to those obtained through the teacher’s complex

sampling approach. The code is publicly available at

https://github.com/AIDC-AI/TeEFusion{github.com/AIDC-AI/TeEFusion}.