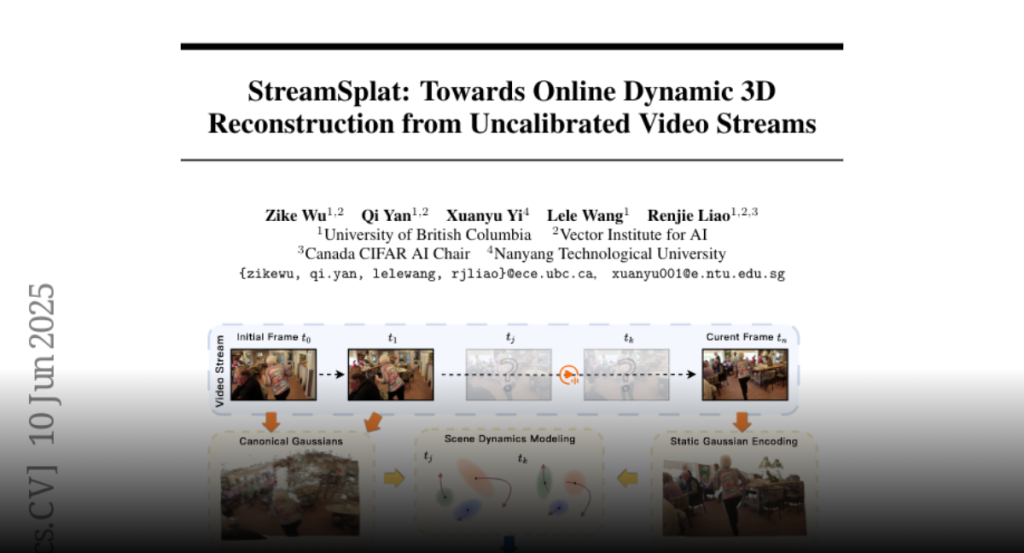

StreamSplat, a fully feed-forward framework, addresses real-time 3D scene reconstruction from uncalibrated video with accurate dynamics and long-term stability.

Real-time reconstruction of dynamic 3D scenes from uncalibrated video streams

is crucial for numerous real-world applications. However, existing methods

struggle to jointly address three key challenges: 1) processing uncalibrated

inputs in real time, 2) accurately modeling dynamic scene evolution, and 3)

maintaining long-term stability and computational efficiency. To this end, we

introduce StreamSplat, the first fully feed-forward framework that transforms

uncalibrated video streams of arbitrary length into dynamic 3D Gaussian

Splatting (3DGS) representations in an online manner, capable of recovering

scene dynamics from temporally local observations. We propose two key technical

innovations: a probabilistic sampling mechanism in the static encoder for 3DGS

position prediction, and a bidirectional deformation field in the dynamic

decoder that enables robust and efficient dynamic modeling. Extensive

experiments on static and dynamic benchmarks demonstrate that StreamSplat

consistently outperforms prior works in both reconstruction quality and dynamic

scene modeling, while uniquely supporting online reconstruction of arbitrarily

long video streams. Code and models are available at

https://github.com/nickwzk/StreamSplat.