SonicMaster, a unified generative model, improves music audio quality by addressing various artifacts using text-based control and a flow-matching generative training paradigm.

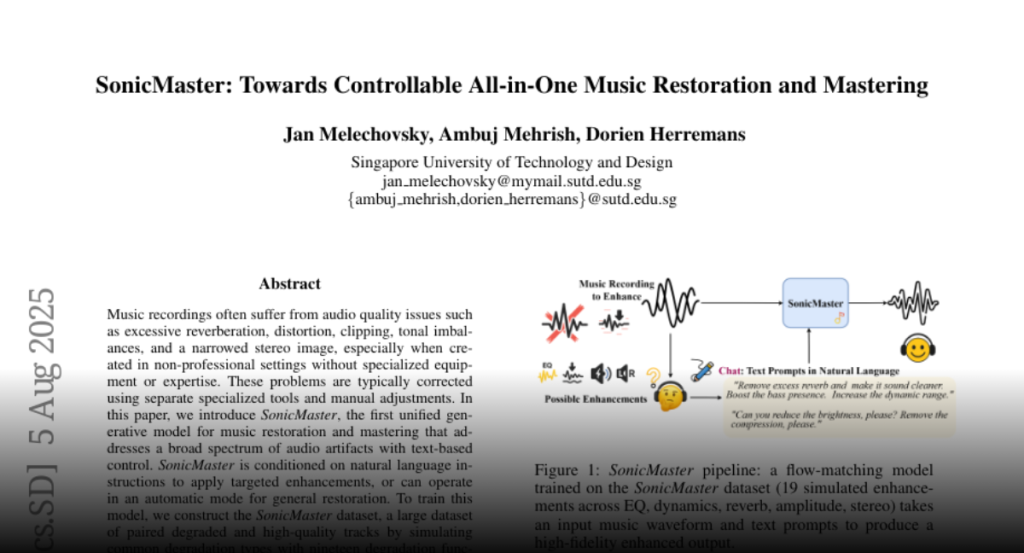

Music recordings often suffer from audio quality issues such as excessive

reverberation, distortion, clipping, tonal imbalances, and a narrowed stereo

image, especially when created in non-professional settings without specialized

equipment or expertise. These problems are typically corrected using separate

specialized tools and manual adjustments. In this paper, we introduce

SonicMaster, the first unified generative model for music restoration and

mastering that addresses a broad spectrum of audio artifacts with text-based

control. SonicMaster is conditioned on natural language instructions to apply

targeted enhancements, or can operate in an automatic mode for general

restoration. To train this model, we construct the SonicMaster dataset, a large

dataset of paired degraded and high-quality tracks by simulating common

degradation types with nineteen degradation functions belonging to five

enhancements groups: equalization, dynamics, reverb, amplitude, and stereo. Our

approach leverages a flow-matching generative training paradigm to learn an

audio transformation that maps degraded inputs to their cleaned, mastered

versions guided by text prompts. Objective audio quality metrics demonstrate

that SonicMaster significantly improves sound quality across all artifact

categories. Furthermore, subjective listening tests confirm that listeners

prefer SonicMaster’s enhanced outputs over the original degraded audio,

highlighting the effectiveness of our unified approach.