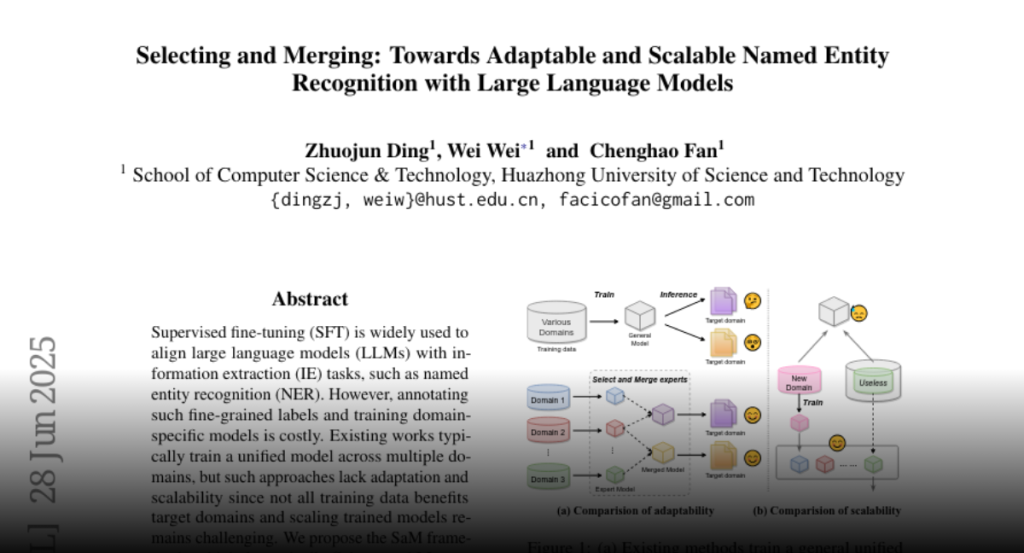

A framework dynamically selects and merges pre-trained domain-specific models for efficient and scalable information extraction tasks.

Supervised fine-tuning (SFT) is widely used to align large language models

(LLMs) with information extraction (IE) tasks, such as named entity recognition

(NER). However, annotating such fine-grained labels and training

domain-specific models is costly. Existing works typically train a unified

model across multiple domains, but such approaches lack adaptation and

scalability since not all training data benefits target domains and scaling

trained models remains challenging. We propose the SaM framework, which

dynamically Selects and Merges expert models at inference time. Specifically,

for a target domain, we select domain-specific experts pre-trained on existing

domains based on (i) domain similarity to the target domain and (ii)

performance on sampled instances, respectively. The experts are then merged to

create task-specific models optimized for the target domain. By dynamically

merging experts beneficial to target domains, we improve generalization across

various domains without extra training. Additionally, experts can be added or

removed conveniently, leading to great scalability. Extensive experiments on

multiple benchmarks demonstrate our framework’s effectiveness, which

outperforms the unified model by an average of 10%. We further provide insights

into potential improvements, practical experience, and extensions of our

framework.