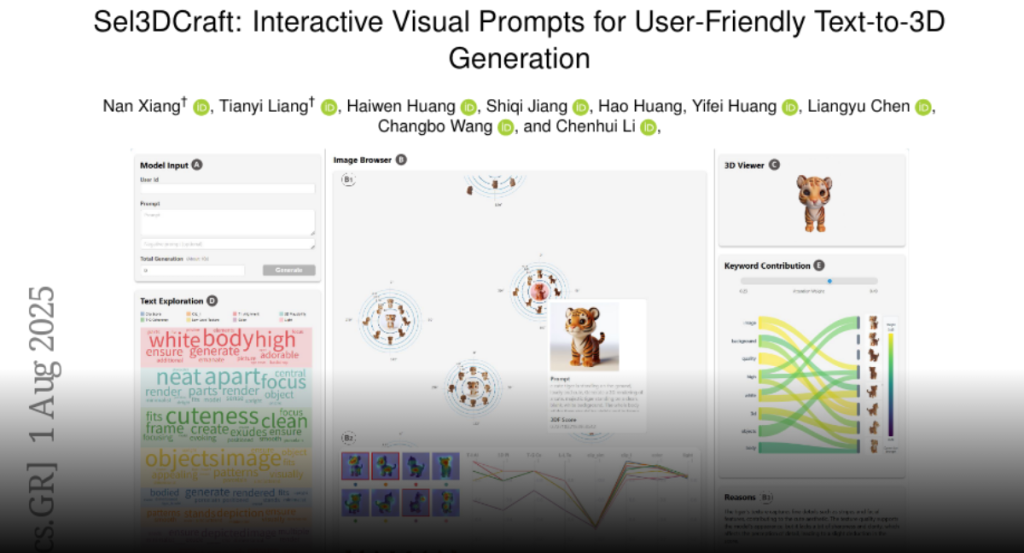

Sel3DCraft enhances text-to-3D generation through a dual-branch retrieval and generation system, multi-view hybrid scoring with MLLMs, and prompt-driven visual analytics, improving designer creativity.

Text-to-3D (T23D) generation has transformed digital content creation, yet

remains bottlenecked by blind trial-and-error prompting processes that yield

unpredictable results. While visual prompt engineering has advanced in

text-to-image domains, its application to 3D generation presents unique

challenges requiring multi-view consistency evaluation and spatial

understanding. We present Sel3DCraft, a visual prompt engineering system for

T23D that transforms unstructured exploration into a guided visual process. Our

approach introduces three key innovations: a dual-branch structure combining

retrieval and generation for diverse candidate exploration; a multi-view hybrid

scoring approach that leverages MLLMs with innovative high-level metrics to

assess 3D models with human-expert consistency; and a prompt-driven visual

analytics suite that enables intuitive defect identification and refinement.

Extensive testing and user studies demonstrate that Sel3DCraft surpasses other

T23D systems in supporting creativity for designers.