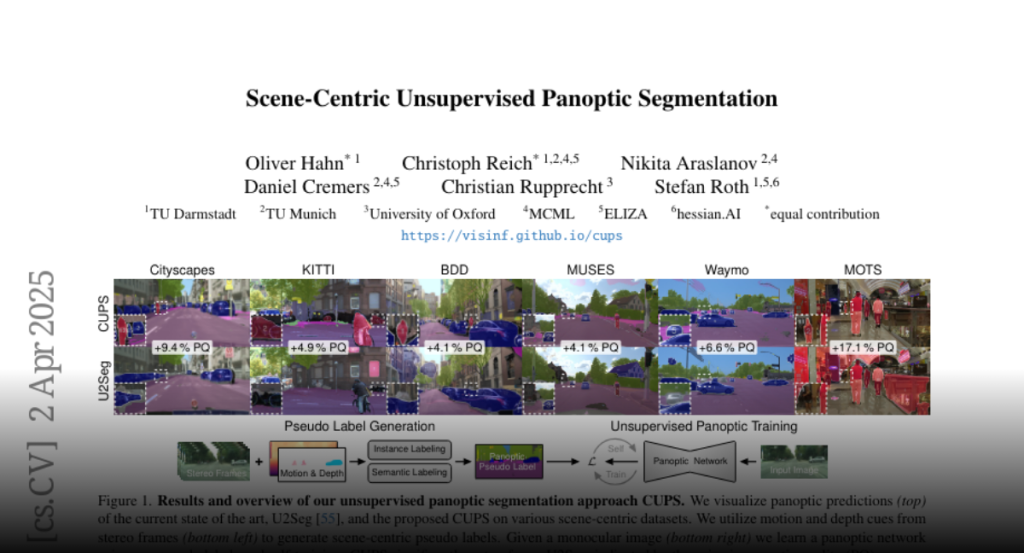

Unsupervised panoptic segmentation aims to partition an image into

semantically meaningful regions and distinct object instances without training

on manually annotated data. In contrast to prior work on unsupervised panoptic

scene understanding, we eliminate the need for object-centric training data,

enabling the unsupervised understanding of complex scenes. To that end, we

present the first unsupervised panoptic method that directly trains on

scene-centric imagery. In particular, we propose an approach to obtain

high-resolution panoptic pseudo labels on complex scene-centric data, combining

visual representations, depth, and motion cues. Utilizing both pseudo-label

training and a panoptic self-training strategy yields a novel approach that

accurately predicts panoptic segmentation of complex scenes without requiring

any human annotations. Our approach significantly improves panoptic quality,

e.g., surpassing the recent state of the art in unsupervised panoptic

segmentation on Cityscapes by 9.4% points in PQ.