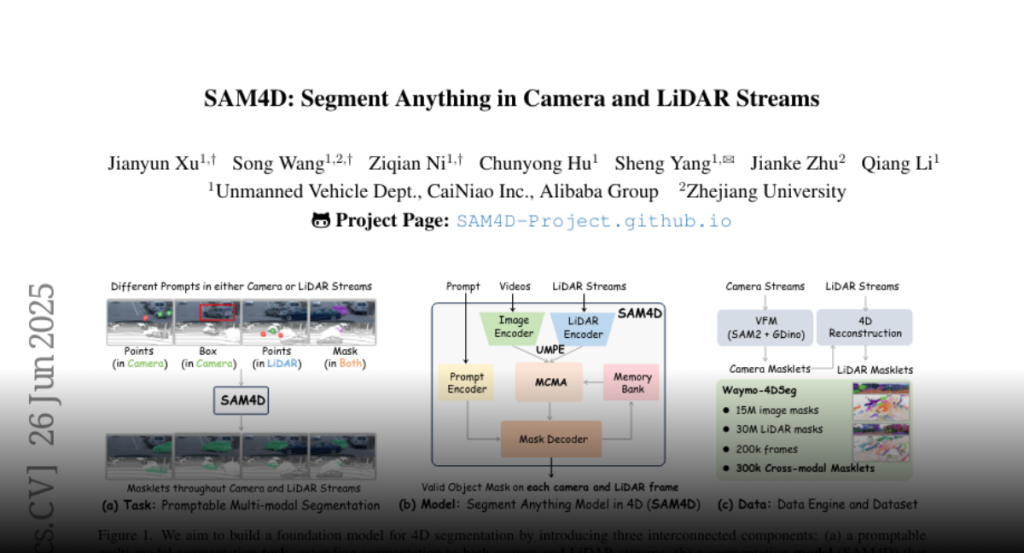

SAM4D is a multi-modal and temporal foundation model for segmentation in autonomous driving using Unified Multi-modal Positional Encoding and Motion-aware Cross-modal Memory Attention, with a multi-modal automated data engine generating pseudo-labels.

We present SAM4D, a multi-modal and temporal foundation model designed for

promptable segmentation across camera and LiDAR streams. Unified Multi-modal

Positional Encoding (UMPE) is introduced to align camera and LiDAR features in

a shared 3D space, enabling seamless cross-modal prompting and interaction.

Additionally, we propose Motion-aware Cross-modal Memory Attention (MCMA),

which leverages ego-motion compensation to enhance temporal consistency and

long-horizon feature retrieval, ensuring robust segmentation across dynamically

changing autonomous driving scenes. To avoid annotation bottlenecks, we develop

a multi-modal automated data engine that synergizes VFM-driven video masklets,

spatiotemporal 4D reconstruction, and cross-modal masklet fusion. This

framework generates camera-LiDAR aligned pseudo-labels at a speed orders of

magnitude faster than human annotation while preserving VFM-derived semantic

fidelity in point cloud representations. We conduct extensive experiments on

the constructed Waymo-4DSeg, which demonstrate the powerful cross-modal

segmentation ability and great potential in data annotation of proposed SAM4D.