RedOne, a domain-specific LLM, enhances performance across multiple SNS tasks through a three-stage training strategy, improving generalization and reducing harmful content exposure.

As a primary medium for modern information dissemination, social networking

services (SNS) have experienced rapid growth, which has proposed significant

challenges for platform content management and interaction quality improvement.

Recently, the development of large language models (LLMs) has offered potential

solutions but existing studies focus on isolated tasks, which not only

encounter diminishing benefit from the data scaling within individual scenarios

but also fail to flexibly adapt to diverse real-world context. To address these

challenges, we introduce RedOne, a domain-specific LLM designed to break the

performance bottleneck of single-task baselines and establish a comprehensive

foundation for the SNS. RedOne was developed through a three-stage training

strategy consisting of continue pretraining, supervised fine-tuning, and

preference optimization, using a large-scale real-world dataset. Through

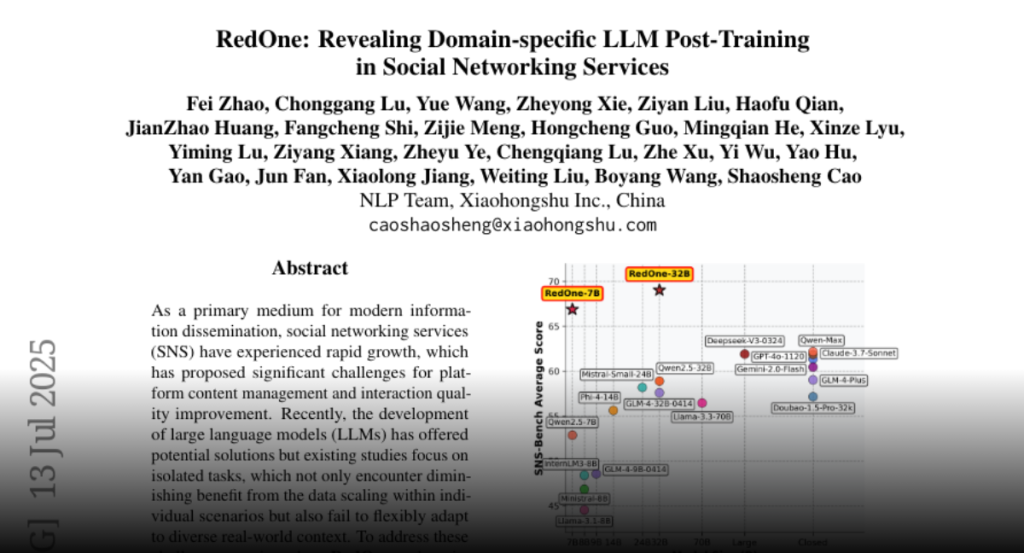

extensive experiments, RedOne maintains strong general capabilities, and

achieves an average improvement up to 14.02% across 8 major SNS tasks and 7.56%

in SNS bilingual evaluation benchmark, compared with base models. Furthermore,

through online testing, RedOne reduced the exposure rate in harmful content

detection by 11.23% and improved the click page rate in post-view search by

14.95% compared with single-tasks finetuned baseline models. These results

establish RedOne as a robust domain-specific LLM for SNS, demonstrating

excellent generalization across various tasks and promising applicability in

real-world scenarios.