A multimodal agent transforms documents into detailed presentation videos with audio, evaluated using a comprehensive framework involving vision-language models.

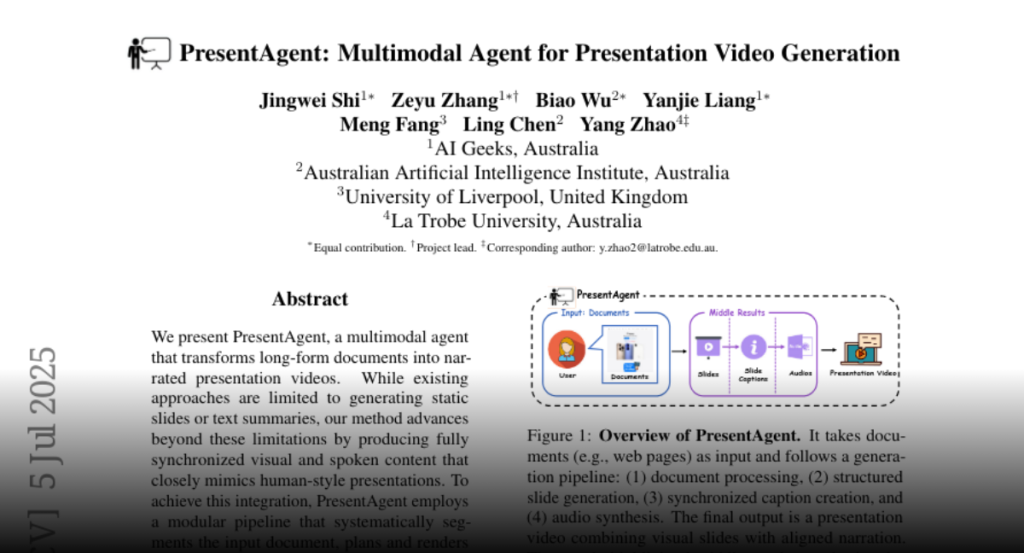

We present PresentAgent, a multimodal agent that transforms long-form

documents into narrated presentation videos. While existing approaches are

limited to generating static slides or text summaries, our method advances

beyond these limitations by producing fully synchronized visual and spoken

content that closely mimics human-style presentations. To achieve this

integration, PresentAgent employs a modular pipeline that systematically

segments the input document, plans and renders slide-style visual frames,

generates contextual spoken narration with large language models and

Text-to-Speech models, and seamlessly composes the final video with precise

audio-visual alignment. Given the complexity of evaluating such multimodal

outputs, we introduce PresentEval, a unified assessment framework powered by

Vision-Language Models that comprehensively scores videos across three critical

dimensions: content fidelity, visual clarity, and audience comprehension

through prompt-based evaluation. Our experimental validation on a curated

dataset of 30 document-presentation pairs demonstrates that PresentAgent

approaches human-level quality across all evaluation metrics. These results

highlight the significant potential of controllable multimodal agents in

transforming static textual materials into dynamic, effective, and accessible

presentation formats. Code will be available at

https://github.com/AIGeeksGroup/PresentAgent.