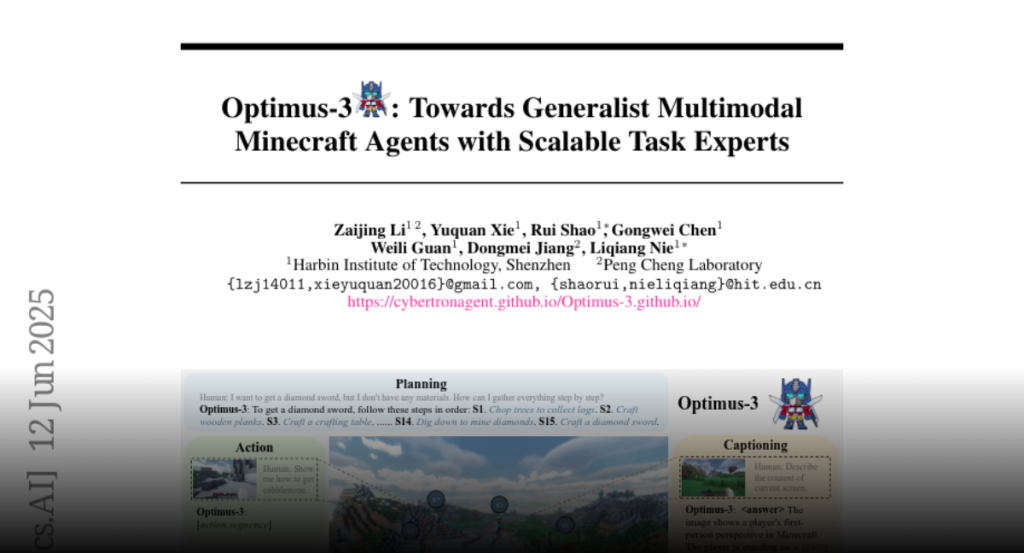

Optimus-3, a multimodal large language model agent, uses knowledge-enhanced data generation, a Mixture-of-Experts architecture, and multimodal reasoning-augmented reinforcement learning to achieve superior performance across various tasks in Minecraft.

Recently, agents based on multimodal large language models (MLLMs) have

achieved remarkable progress across various domains. However, building a

generalist agent with capabilities such as perception, planning, action,

grounding, and reflection in open-world environments like Minecraft remains

challenges: insufficient domain-specific data, interference among heterogeneous

tasks, and visual diversity in open-world settings. In this paper, we address

these challenges through three key contributions. 1) We propose a

knowledge-enhanced data generation pipeline to provide scalable and

high-quality training data for agent development. 2) To mitigate interference

among heterogeneous tasks, we introduce a Mixture-of-Experts (MoE) architecture

with task-level routing. 3) We develop a Multimodal Reasoning-Augmented

Reinforcement Learning approach to enhance the agent’s reasoning ability for

visual diversity in Minecraft. Built upon these innovations, we present

Optimus-3, a general-purpose agent for Minecraft. Extensive experimental

results demonstrate that Optimus-3 surpasses both generalist multimodal large

language models and existing state-of-the-art agents across a wide range of

tasks in the Minecraft environment. Project page:

https://cybertronagent.github.io/Optimus-3.github.io/