A new dataset and baseline method for motion-guided few-shot video object segmentation are introduced, addressing challenges in motion understanding.

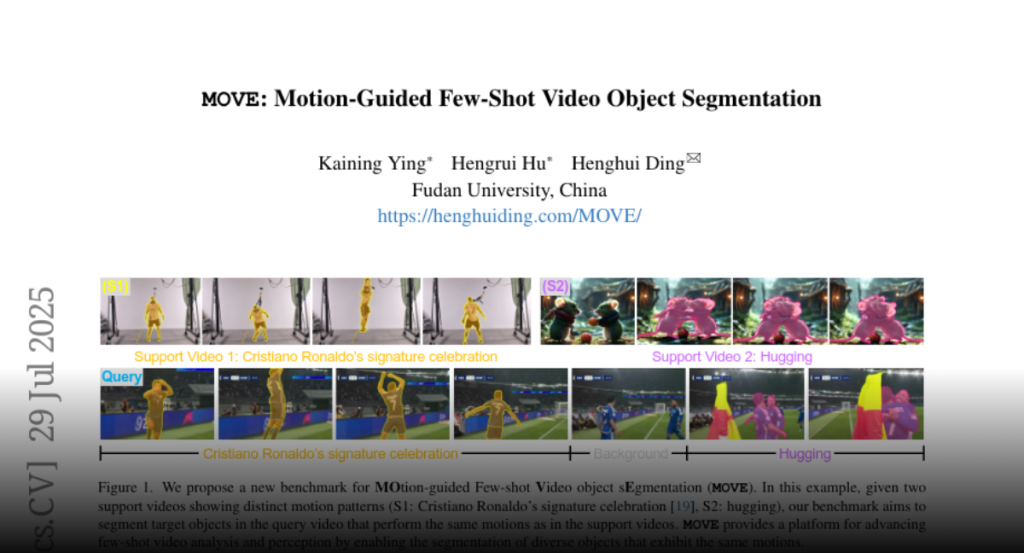

This work addresses motion-guided few-shot video object segmentation (FSVOS),

which aims to segment dynamic objects in videos based on a few annotated

examples with the same motion patterns. Existing FSVOS datasets and methods

typically focus on object categories, which are static attributes that ignore

the rich temporal dynamics in videos, limiting their application in scenarios

requiring motion understanding. To fill this gap, we introduce MOVE, a

large-scale dataset specifically designed for motion-guided FSVOS. Based on

MOVE, we comprehensively evaluate 6 state-of-the-art methods from 3 different

related tasks across 2 experimental settings. Our results reveal that current

methods struggle to address motion-guided FSVOS, prompting us to analyze the

associated challenges and propose a baseline method, Decoupled Motion

Appearance Network (DMA). Experiments demonstrate that our approach achieves

superior performance in few shot motion understanding, establishing a solid

foundation for future research in this direction.