The integration of long-context capabilities with visual understanding

unlocks unprecedented potential for Vision Language Models (VLMs). However, the

quadratic attention complexity during the pre-filling phase remains a

significant obstacle to real-world deployment. To overcome this limitation, we

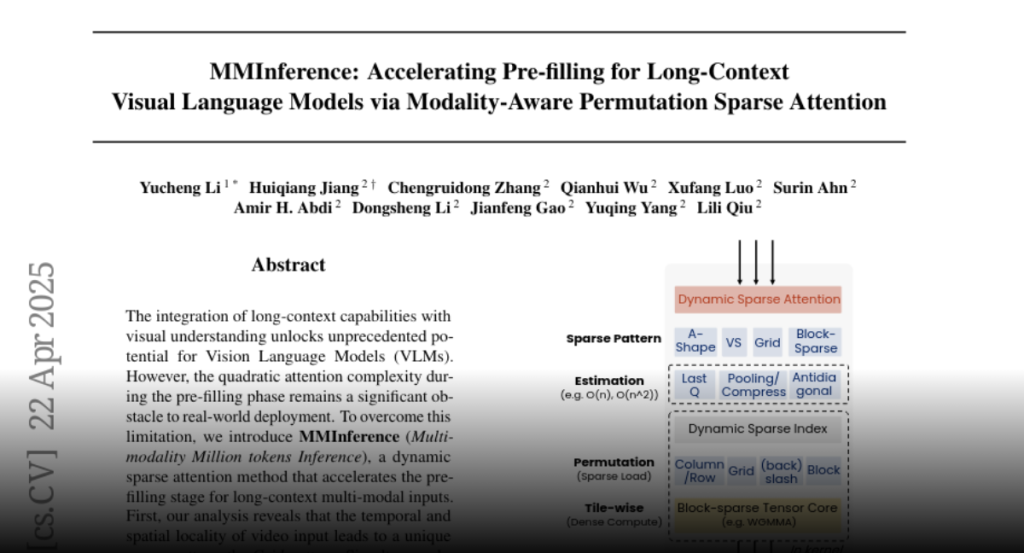

introduce MMInference (Multimodality Million tokens Inference), a dynamic

sparse attention method that accelerates the prefilling stage for long-context

multi-modal inputs. First, our analysis reveals that the temporal and spatial

locality of video input leads to a unique sparse pattern, the Grid pattern.

Simultaneously, VLMs exhibit markedly different sparse distributions across

different modalities. We introduce a permutation-based method to leverage the

unique Grid pattern and handle modality boundary issues. By offline search the

optimal sparse patterns for each head, MMInference constructs the sparse

distribution dynamically based on the input. We also provide optimized GPU

kernels for efficient sparse computations. Notably, MMInference integrates

seamlessly into existing VLM pipelines without any model modifications or

fine-tuning. Experiments on multi-modal benchmarks-including Video QA,

Captioning, VisionNIAH, and Mixed-Modality NIAH-with state-of-the-art

long-context VLMs (LongVila, LlavaVideo, VideoChat-Flash, Qwen2.5-VL) show that

MMInference accelerates the pre-filling stage by up to 8.3x at 1M tokens while

maintaining accuracy. Our code is available at https://aka.ms/MMInference.