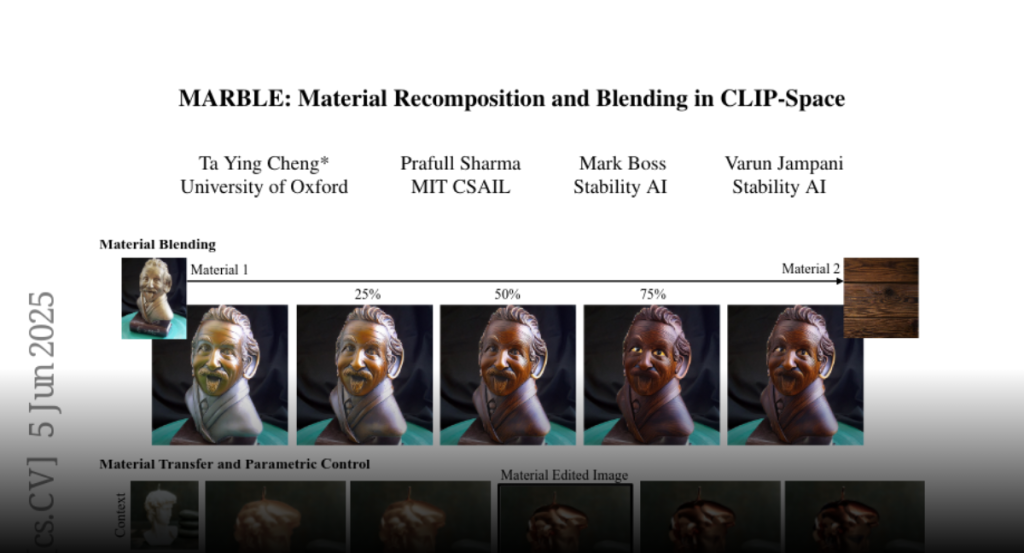

MARBLE utilizes material embeddings in CLIP-space to control pre-trained text-to-image models for blending and recomposing material properties in images with parametric control over attributes.

Editing materials of objects in images based on exemplar images is an active

area of research in computer vision and graphics. We propose MARBLE, a method

for performing material blending and recomposing fine-grained material

properties by finding material embeddings in CLIP-space and using that to

control pre-trained text-to-image models. We improve exemplar-based material

editing by finding a block in the denoising UNet responsible for material

attribution. Given two material exemplar-images, we find directions in the

CLIP-space for blending the materials. Further, we can achieve parametric

control over fine-grained material attributes such as roughness, metallic,

transparency, and glow using a shallow network to predict the direction for the

desired material attribute change. We perform qualitative and quantitative

analysis to demonstrate the efficacy of our proposed method. We also present

the ability of our method to perform multiple edits in a single forward pass

and applicability to painting.

Project Page: https://marblecontrol.github.io/