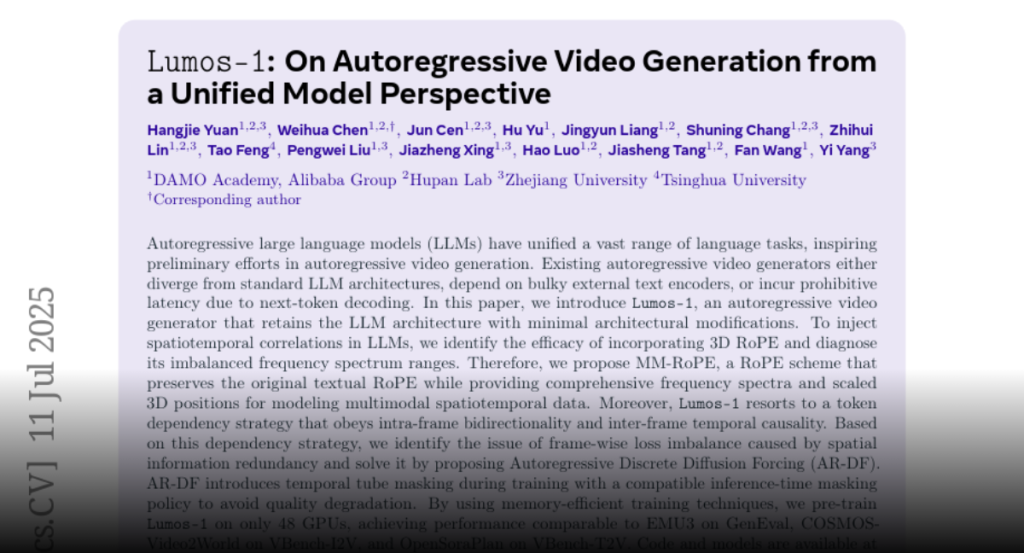

Lumos-1 is an autoregressive video generator that uses a modified LLM architecture with MM-RoPE and AR-DF to address spatiotemporal correlation and frame-wise loss imbalance, achieving competitive performance with fewer resources.

Autoregressive large language models (LLMs) have unified a vast range of

language tasks, inspiring preliminary efforts in autoregressive video

generation. Existing autoregressive video generators either diverge from

standard LLM architectures, depend on bulky external text encoders, or incur

prohibitive latency due to next-token decoding. In this paper, we introduce

Lumos-1, an autoregressive video generator that retains the LLM architecture

with minimal architectural modifications. To inject spatiotemporal correlations

in LLMs, we identify the efficacy of incorporating 3D RoPE and diagnose its

imbalanced frequency spectrum ranges. Therefore, we propose MM-RoPE, a RoPE

scheme that preserves the original textual RoPE while providing comprehensive

frequency spectra and scaled 3D positions for modeling multimodal

spatiotemporal data. Moreover, Lumos-1 resorts to a token dependency strategy

that obeys intra-frame bidirectionality and inter-frame temporal causality.

Based on this dependency strategy, we identify the issue of frame-wise loss

imbalance caused by spatial information redundancy and solve it by proposing

Autoregressive Discrete Diffusion Forcing (AR-DF). AR-DF introduces temporal

tube masking during training with a compatible inference-time masking policy to

avoid quality degradation. By using memory-efficient training techniques, we

pre-train Lumos-1 on only 48 GPUs, achieving performance comparable to EMU3 on

GenEval, COSMOS-Video2World on VBench-I2V, and OpenSoraPlan on VBench-T2V. Code

and models are available at https://github.com/alibaba-damo-academy/Lumos.