Scientific equation discovery is a fundamental task in the history of

scientific progress, enabling the derivation of laws governing natural

phenomena. Recently, Large Language Models (LLMs) have gained interest for this

task due to their potential to leverage embedded scientific knowledge for

hypothesis generation. However, evaluating the true discovery capabilities of

these methods remains challenging, as existing benchmarks often rely on common

equations that are susceptible to memorization by LLMs, leading to inflated

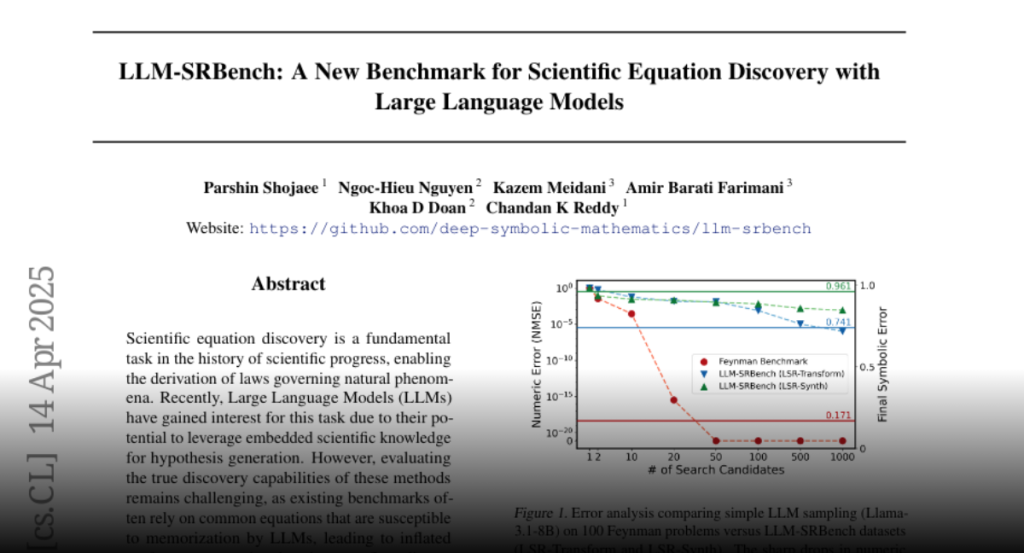

performance metrics that do not reflect discovery. In this paper, we introduce

LLM-SRBench, a comprehensive benchmark with 239 challenging problems across

four scientific domains specifically designed to evaluate LLM-based scientific

equation discovery methods while preventing trivial memorization. Our benchmark

comprises two main categories: LSR-Transform, which transforms common physical

models into less common mathematical representations to test reasoning beyond

memorized forms, and LSR-Synth, which introduces synthetic, discovery-driven

problems requiring data-driven reasoning. Through extensive evaluation of

several state-of-the-art methods, using both open and closed LLMs, we find that

the best-performing system so far achieves only 31.5% symbolic accuracy. These

findings highlight the challenges of scientific equation discovery, positioning

LLM-SRBench as a valuable resource for future research.