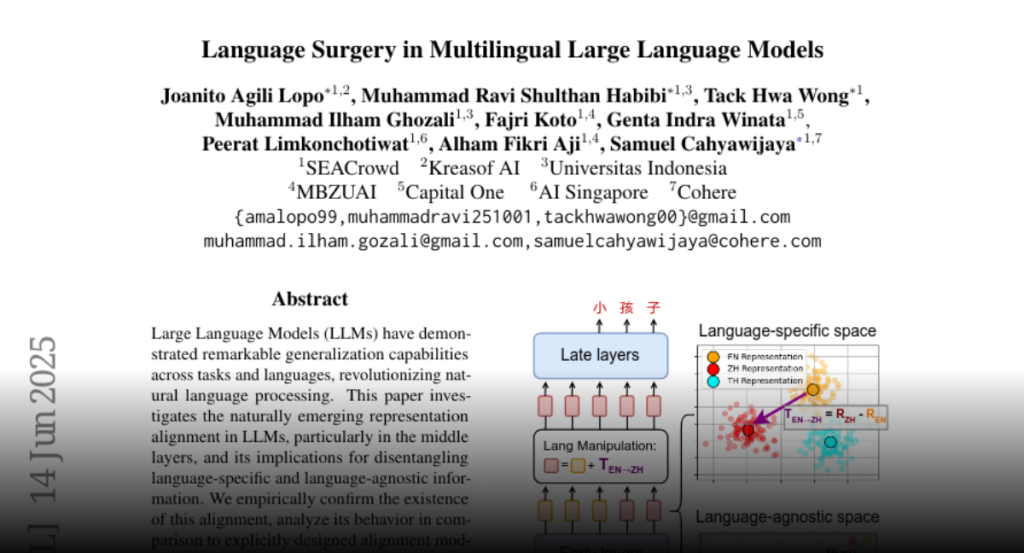

We’re excited to share our latest work, “Language Surgery in Multilingual Large Language Models”. We proposed a method, named Inference-Time Language Control (ITLC), designed to enhance cross-lingual language control and mitigate language confusion in Large Language Models (LLMs). ITLC leverages latent injection to enable precise manipulation of language-specific information during inference, while preserving semantic integrity. By exploiting representation alignment in LLMs’ middle layers, ITLC achieves zero-shot cross-lingual generation (10.70 average BLEU), mitigates language confusion (2.7x better LCPR, 4x better LPR), and allows language-specific manipulation without compromising meaning. Key contributions include confirming representation alignment via cosine similarity analysis and providing a practical solution for cross-lingual tasks. ITLC’s applications include enabling zero-shot cross-lingual generation and ensuring consistent language output.

📖 Paper: http://arxiv.org/abs/2506.12450

💻 Code: https://github.com/SEACrowd/itlc