IGL-Nav uses an incremental 3D Gaussian representation for efficient and accurate image-goal navigation in 3D space, outperforming existing methods and applicable in real-world settings.

Visual navigation with an image as goal is a fundamental and challenging

problem. Conventional methods either rely on end-to-end RL learning or

modular-based policy with topological graph or BEV map as memory, which cannot

fully model the geometric relationship between the explored 3D environment and

the goal image. In order to efficiently and accurately localize the goal image

in 3D space, we build our navigation system upon the renderable 3D gaussian

(3DGS) representation. However, due to the computational intensity of 3DGS

optimization and the large search space of 6-DoF camera pose, directly

leveraging 3DGS for image localization during agent exploration process is

prohibitively inefficient. To this end, we propose IGL-Nav, an Incremental 3D

Gaussian Localization framework for efficient and 3D-aware image-goal

navigation. Specifically, we incrementally update the scene representation as

new images arrive with feed-forward monocular prediction. Then we coarsely

localize the goal by leveraging the geometric information for discrete space

matching, which can be equivalent to efficient 3D convolution. When the agent

is close to the goal, we finally solve the fine target pose with optimization

via differentiable rendering. The proposed IGL-Nav outperforms existing

state-of-the-art methods by a large margin across diverse experimental

configurations. It can also handle the more challenging free-view image-goal

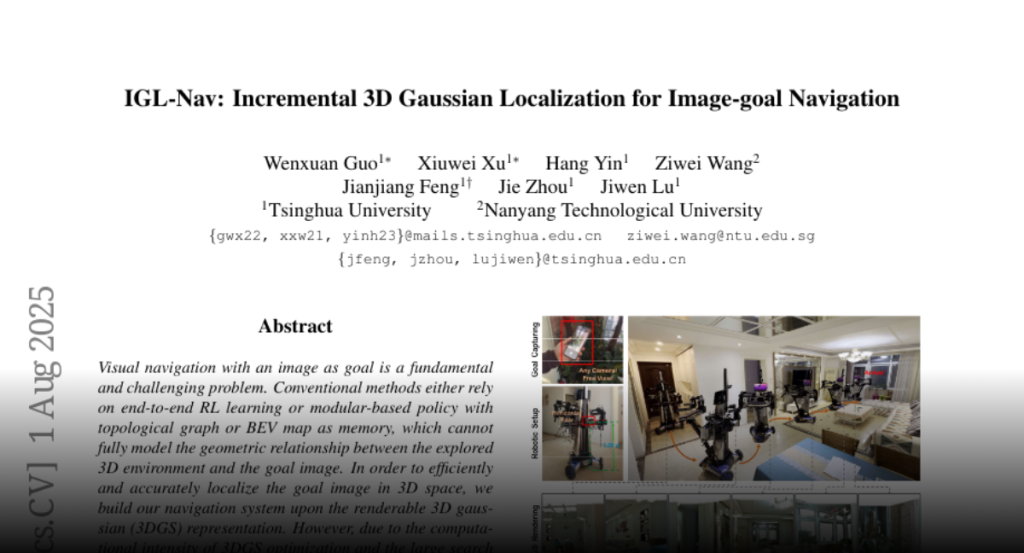

setting and be deployed on real-world robotic platform using a cellphone to

capture goal image at arbitrary pose. Project page:

https://gwxuan.github.io/IGL-Nav/.