Single-image human reconstruction is vital for digital human modeling

applications but remains an extremely challenging task. Current approaches rely

on generative models to synthesize multi-view images for subsequent 3D

reconstruction and animation. However, directly generating multiple views from

a single human image suffers from geometric inconsistencies, resulting in

issues like fragmented or blurred limbs in the reconstructed models. To tackle

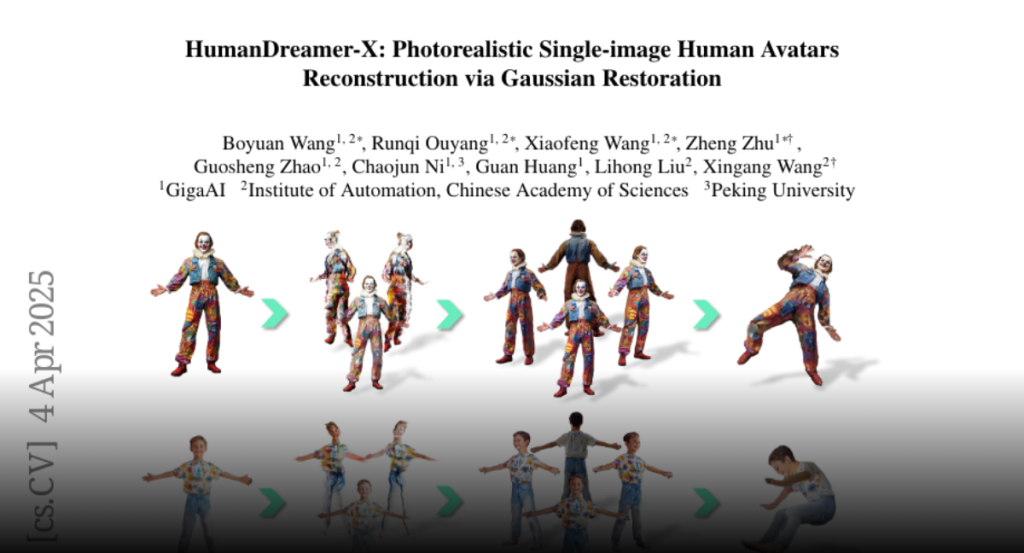

these limitations, we introduce HumanDreamer-X, a novel framework that

integrates multi-view human generation and reconstruction into a unified

pipeline, which significantly enhances the geometric consistency and visual

fidelity of the reconstructed 3D models. In this framework, 3D Gaussian

Splatting serves as an explicit 3D representation to provide initial geometry

and appearance priority. Building upon this foundation, HumanFixer is

trained to restore 3DGS renderings, which guarantee photorealistic results.

Furthermore, we delve into the inherent challenges associated with attention

mechanisms in multi-view human generation, and propose an attention modulation

strategy that effectively enhances geometric details identity consistency

across multi-view. Experimental results demonstrate that our approach markedly

improves generation and reconstruction PSNR quality metrics by 16.45% and

12.65%, respectively, achieving a PSNR of up to 25.62 dB, while also showing

generalization capabilities on in-the-wild data and applicability to various

human reconstruction backbone models.