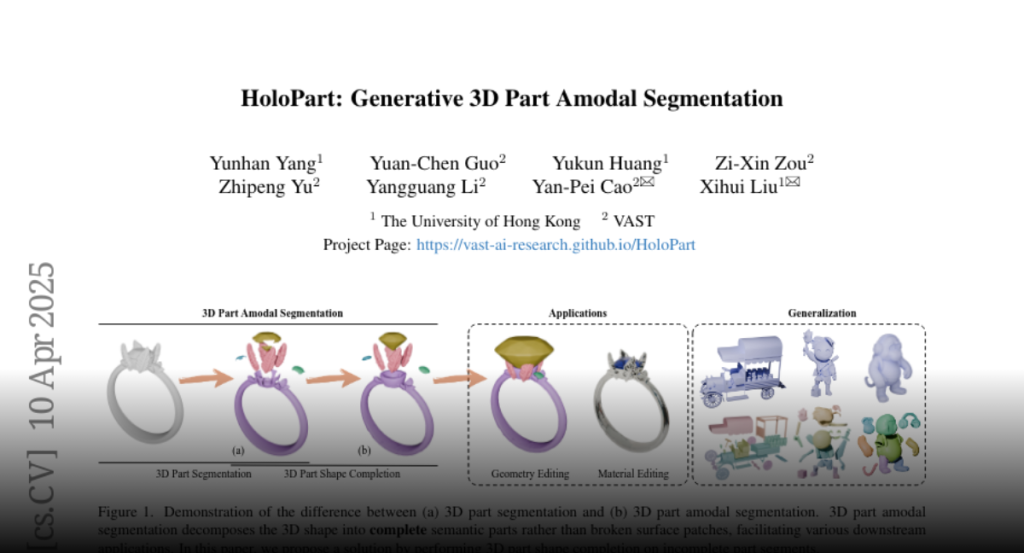

3D part amodal segmentation–decomposing a 3D shape into complete,

semantically meaningful parts, even when occluded–is a challenging but crucial

task for 3D content creation and understanding. Existing 3D part segmentation

methods only identify visible surface patches, limiting their utility. Inspired

by 2D amodal segmentation, we introduce this novel task to the 3D domain and

propose a practical, two-stage approach, addressing the key challenges of

inferring occluded 3D geometry, maintaining global shape consistency, and

handling diverse shapes with limited training data. First, we leverage existing

3D part segmentation to obtain initial, incomplete part segments. Second, we

introduce HoloPart, a novel diffusion-based model, to complete these segments

into full 3D parts. HoloPart utilizes a specialized architecture with local

attention to capture fine-grained part geometry and global shape context

attention to ensure overall shape consistency. We introduce new benchmarks

based on the ABO and PartObjaverse-Tiny datasets and demonstrate that HoloPart

significantly outperforms state-of-the-art shape completion methods. By

incorporating HoloPart with existing segmentation techniques, we achieve

promising results on 3D part amodal segmentation, opening new avenues for

applications in geometry editing, animation, and material assignment.