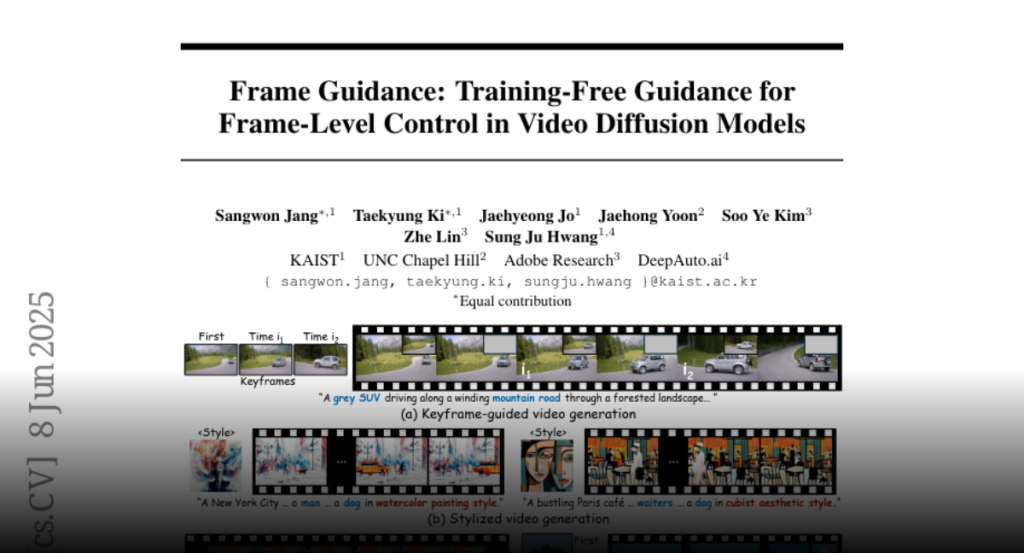

Frame Guidance offers a training-free method for controlling video generation using frame-level signals, reducing memory usage and enhancing globally coherent video output.

Advancements in diffusion models have significantly improved video quality,

directing attention to fine-grained controllability. However, many existing

methods depend on fine-tuning large-scale video models for specific tasks,

which becomes increasingly impractical as model sizes continue to grow. In this

work, we present Frame Guidance, a training-free guidance for controllable

video generation based on frame-level signals, such as keyframes, style

reference images, sketches, or depth maps. For practical training-free

guidance, we propose a simple latent processing method that dramatically

reduces memory usage, and apply a novel latent optimization strategy designed

for globally coherent video generation. Frame Guidance enables effective

control across diverse tasks, including keyframe guidance, stylization, and

looping, without any training, compatible with any video models. Experimental

results show that Frame Guidance can produce high-quality controlled videos for

a wide range of tasks and input signals.