Despite significant advances in video generation, synthesizing physically

plausible human actions remains a persistent challenge, particularly in

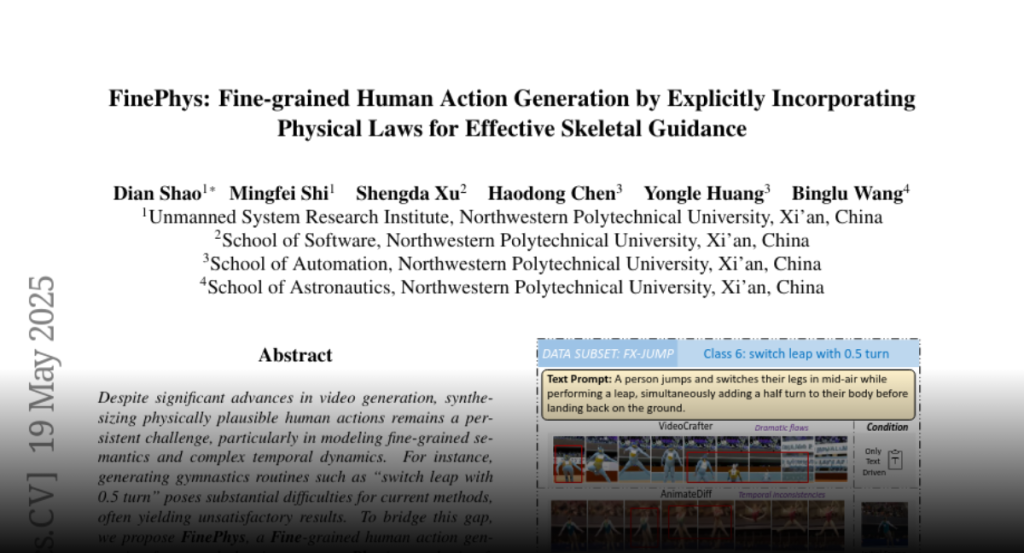

modeling fine-grained semantics and complex temporal dynamics. For instance,

generating gymnastics routines such as “switch leap with 0.5 turn” poses

substantial difficulties for current methods, often yielding unsatisfactory

results. To bridge this gap, we propose FinePhys, a Fine-grained human action

generation framework that incorporates Physics to obtain effective skeletal

guidance. Specifically, FinePhys first estimates 2D poses in an online manner

and then performs 2D-to-3D dimension lifting via in-context learning. To

mitigate the instability and limited interpretability of purely data-driven 3D

poses, we further introduce a physics-based motion re-estimation module

governed by Euler-Lagrange equations, calculating joint accelerations via

bidirectional temporal updating. The physically predicted 3D poses are then

fused with data-driven ones, offering multi-scale 2D heatmap guidance for the

diffusion process. Evaluated on three fine-grained action subsets from FineGym

(FX-JUMP, FX-TURN, and FX-SALTO), FinePhys significantly outperforms

competitive baselines. Comprehensive qualitative results further demonstrate

FinePhys’s ability to generate more natural and plausible fine-grained human

actions.