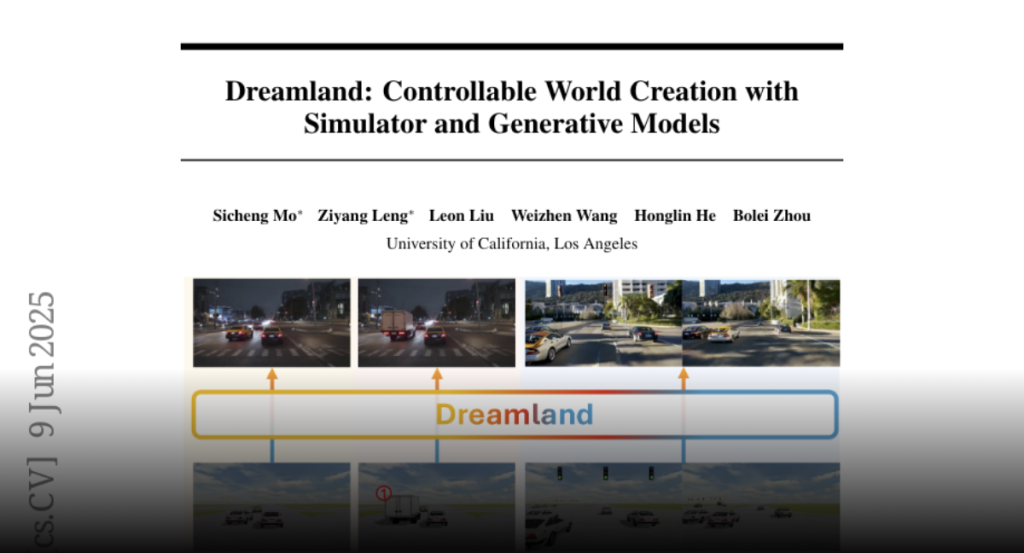

Dreamland, a hybrid framework, combines physics-based simulators and generative models to improve controllability and image quality in video generation.

Large-scale video generative models can synthesize diverse and realistic

visual content for dynamic world creation, but they often lack element-wise

controllability, hindering their use in editing scenes and training embodied AI

agents. We propose Dreamland, a hybrid world generation framework combining the

granular control of a physics-based simulator and the photorealistic content

output of large-scale pretrained generative models. In particular, we design a

layered world abstraction that encodes both pixel-level and object-level

semantics and geometry as an intermediate representation to bridge the

simulator and the generative model. This approach enhances controllability,

minimizes adaptation cost through early alignment with real-world

distributions, and supports off-the-shelf use of existing and future pretrained

generative models. We further construct a D3Sim dataset to facilitate the

training and evaluation of hybrid generation pipelines. Experiments demonstrate

that Dreamland outperforms existing baselines with 50.8% improved image

quality, 17.9% stronger controllability, and has great potential to enhance

embodied agent training. Code and data will be made available.