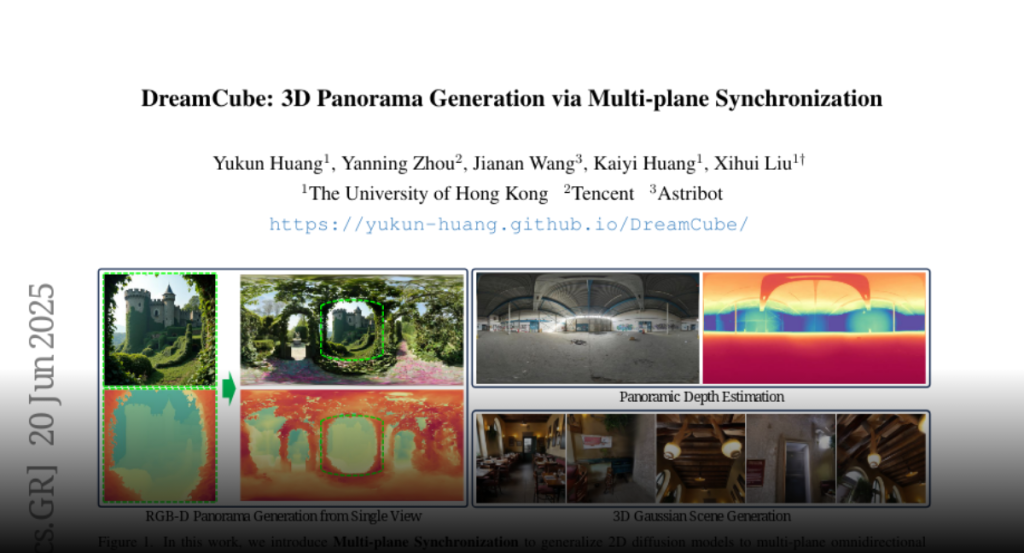

Multi-plane synchronization extends 2D foundation models to 3D panorama generation, introducing DreamCube to achieve diverse appearances and accurate geometry.

3D panorama synthesis is a promising yet challenging task that demands

high-quality and diverse visual appearance and geometry of the generated

omnidirectional content. Existing methods leverage rich image priors from

pre-trained 2D foundation models to circumvent the scarcity of 3D panoramic

data, but the incompatibility between 3D panoramas and 2D single views limits

their effectiveness. In this work, we demonstrate that by applying multi-plane

synchronization to the operators from 2D foundation models, their capabilities

can be seamlessly extended to the omnidirectional domain. Based on this design,

we further introduce DreamCube, a multi-plane RGB-D diffusion model for 3D

panorama generation, which maximizes the reuse of 2D foundation model priors to

achieve diverse appearances and accurate geometry while maintaining multi-view

consistency. Extensive experiments demonstrate the effectiveness of our

approach in panoramic image generation, panoramic depth estimation, and 3D

scene generation.