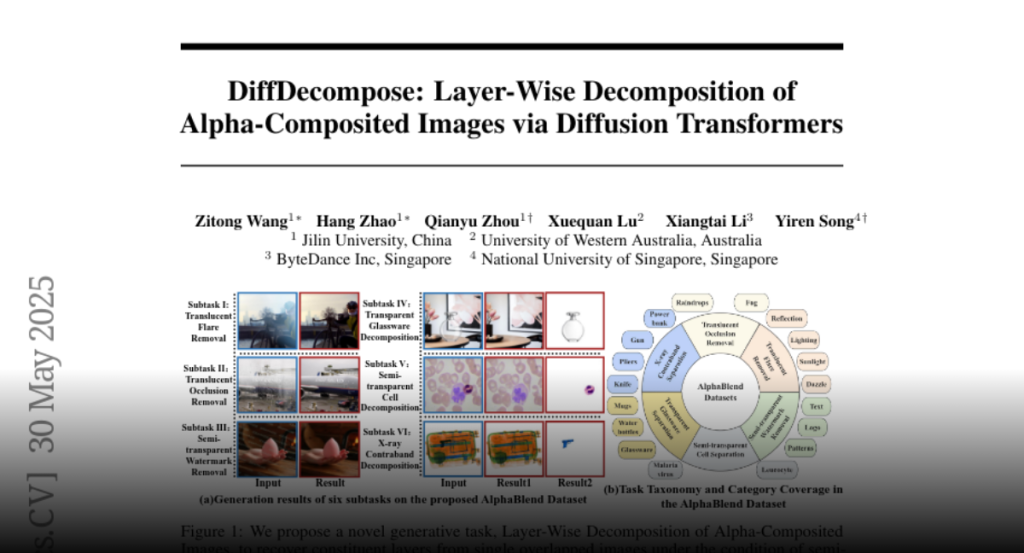

Diffusion models have recently achieved impressive performance in various generative tasks, including object removal. However, existing image decomposition methods still struggle to disentangle semi-transparent or transparent layer occlusions due to their reliance on mask priors, assumptions of static objects, and the lack of suitable datasets. In this work, we introduce a new task: Layer-Wise Decomposition of Alpha-Composited Images, which aims to recover constituent layers from a single image with semi-transparent or transparent occlusions caused by nonlinear alpha blending. To address the challenges of layer ambiguity, generalization, and data scarcity, we first present AlphaBlend, the first large-scale, high-quality dataset designed for transparent and semi-transparent layer decomposition. AlphaBlend supports six real-world subtasks such as translucent flare removal, semi-transparent cell decomposition, and glassware decomposition. Based on this dataset, we propose DiffDecompose, a diffusion transformer-based framework that models the posterior over possible layer decompositions conditioned on the input image, semantic prompts, and blending type. Instead of regressing alpha mattes directly, DiffDecompose adopts an In-Context Decomposition strategy, allowing the model to predict one or multiple layers without requiring per-layer supervision. It further introduces Layer Position Encoding Cloning to ensure pixel-level correspondence across layers. Extensive experiments on AlphaBlend and the public LOGO dataset demonstrate the effectiveness of DiffDecompose. Code and dataset will be released upon paper acceptance.