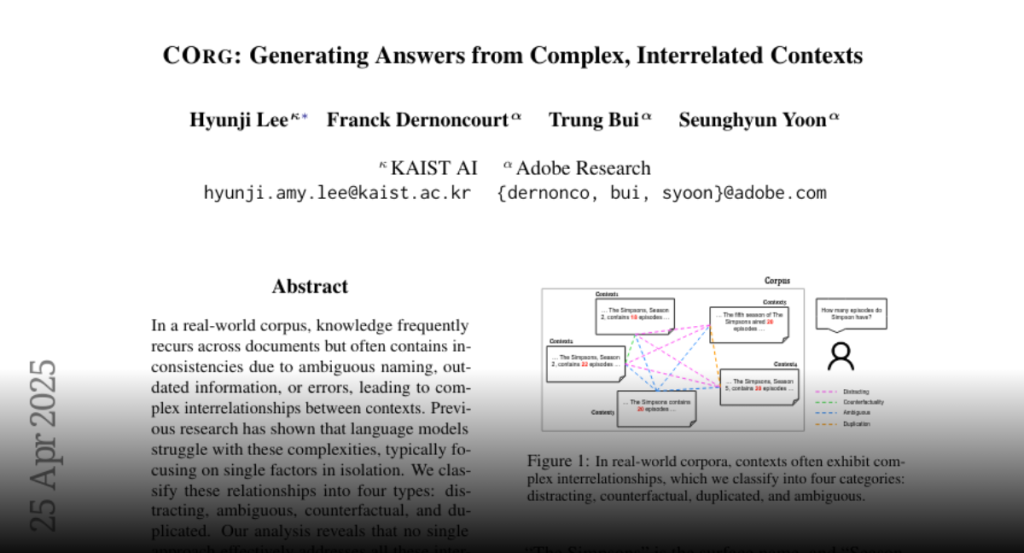

In a real-world corpus, knowledge frequently recurs across documents but

often contains inconsistencies due to ambiguous naming, outdated information,

or errors, leading to complex interrelationships between contexts. Previous

research has shown that language models struggle with these complexities,

typically focusing on single factors in isolation. We classify these

relationships into four types: distracting, ambiguous, counterfactual, and

duplicated. Our analysis reveals that no single approach effectively addresses

all these interrelationships simultaneously. Therefore, we introduce Context

Organizer (CORG), a framework that organizes multiple contexts into

independently processed groups. This design allows the model to efficiently

find all relevant answers while ensuring disambiguation. CORG consists of three

key components: a graph constructor, a reranker, and an aggregator. Our results

demonstrate that CORG balances performance and efficiency effectively,

outperforming existing grouping methods and achieving comparable results to

more computationally intensive, single-context approaches.